This virtual Viking battle was the most immersive VR I've ever seen

Putting reality back in VR

There's never been a better time to be a VR enthusiast. With the increased presence of standalone, wireless headsets, plus the advanced motion tracking and screen resolutions to make VR experiences vaguely in sync with our body’s own senses, we’re now reaching a point where VR simulations are starting to feel, if not real, certainly more realistic than they used to be.

We’re still far from the Matrix, however. Developers are still trying to get around basic physical issues like motion sickness and eye strain, while the kinds of human character models generated for VR game engines – or any game engine, for that matter – are anything but lifelike.

With volumetric capture, though, that might start to change.

I made a visit to Dimension, a VR production studio working with the new video capture technology, to find out how a simulation of a Viking battle ship could signal the future of interactive VR experiences.

What is volumetric capture?

Volumetric capture is a relatively new video capture technology for recreating people and objects in virtual reality. Patented by Microsoft, with only two studios currently licensing the technology worldwide – Dimension being one of them – it has the potential to change the level of immersion and emotional engagement we get from VR.

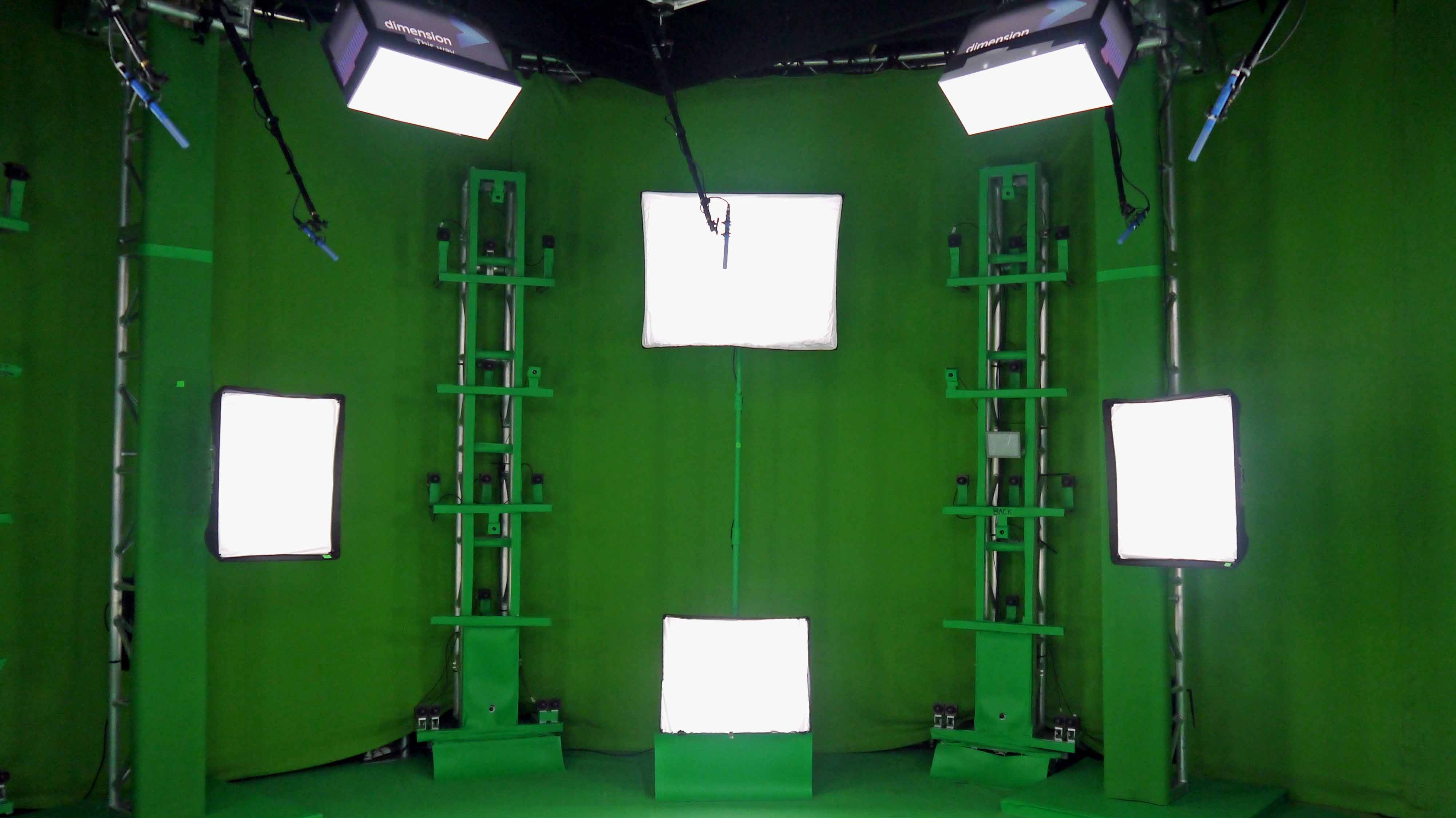

Instead of using a 360-degree camera that snaps real-life footage in all directions, or recreating an entire scene in a computer physics engine, volumetric capture uses a vast array of cameras in a dedicated capture studio – recording from multiple angles to capture an incredible amount of detail, when is then scanned into a CGI environment.

Dimension’s capture stage has 106 individual cameras (53 RGB, 53 infra-red) as well as eight directional microphones to capture audio in real-time, instead of adding in separately in post-production. The full array is able to capture over 10GB of detail per second, at 30 frames per second – or 20GB/s at 60 frames per second.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Steve Jelly, managing director of Hammerhead (which owns and operates Dimension), ran me through how the process works:

“Half of [the cameras] are shooting visible light, and the other half are shooting infra-red light, which is lit by these lasers here… and that helps our algorithms figure out form as well as color.

“We take those images, and then we run them through a massive computer farm over the road, which basically computes every position of every single pixel in space, creates a mesh, and wraps the video footage over the top of it.”

The precision of the mapping method, which uses “thousands of tiny dots” to capture 3D objects, means that the cameras can even recreate details as small as the folds in your clothes – far more detail than you’d get with traditional motion capture methods, which rely on recreating gesture and movement within a computer-generated ‘puppet’.

“That’s the problem [with motion capture],” said Jelley. “You can make it look fantastic, if you got a lot of money, and you’re outputting a 2D frame, but you always lose something in translation.”

What do Vikings have to do with anything?

We arrived at Dimension’s studio to try out a preview of Virtual Viking: The Ambush. A collaboration between Ridley Scott’s production studio RSA Films and the interaction entertainment center The Viking Planet in Olso, it’s one of the latest examples of how immersive the VR experiences of tomorrow could be.

The Ambush is a historically-accurate recreation of a Viking battle ship in VR, using expertise from a host of era-appropriate museums – “wherever there are Viking boats”, we were told – and research texts such as Kim Hjardar’s Vikings at War. Produced for The Viking Planet center in Olso, Norway, the demo is set to be part of a wider exhibition of the lives of Norse seafarers, using a number of VR headsets to bring budding historians onto a Viking ship in the heat of battle.

Given the involvement of Microsoft, it’s unsurprising that The Ambush runs on a Windows Mixed Reality headset: the HP Reverb.

The Reverb does, however, have one of the sharpest displays on the market, with 2,160 x 2,160 resolution per-eye panels, delivering twice the display resolution of the HTC Vive Pro and Samsung Odyssey+. Not to mention six degrees of freedom for fluid movement in 360 degrees.

We’re told the end experience will have full haptic feedback for the seats you’re in too, recreating the gentle rock of the boat to minimise motion sickness – another recurring obstacle for seamless VR.

Making the virtual feel real

We’ve been to a lot of VR demos – everything from 8K batman helmets to nausea-inducing paragliding – but The Ambush felt incredibly fresh.

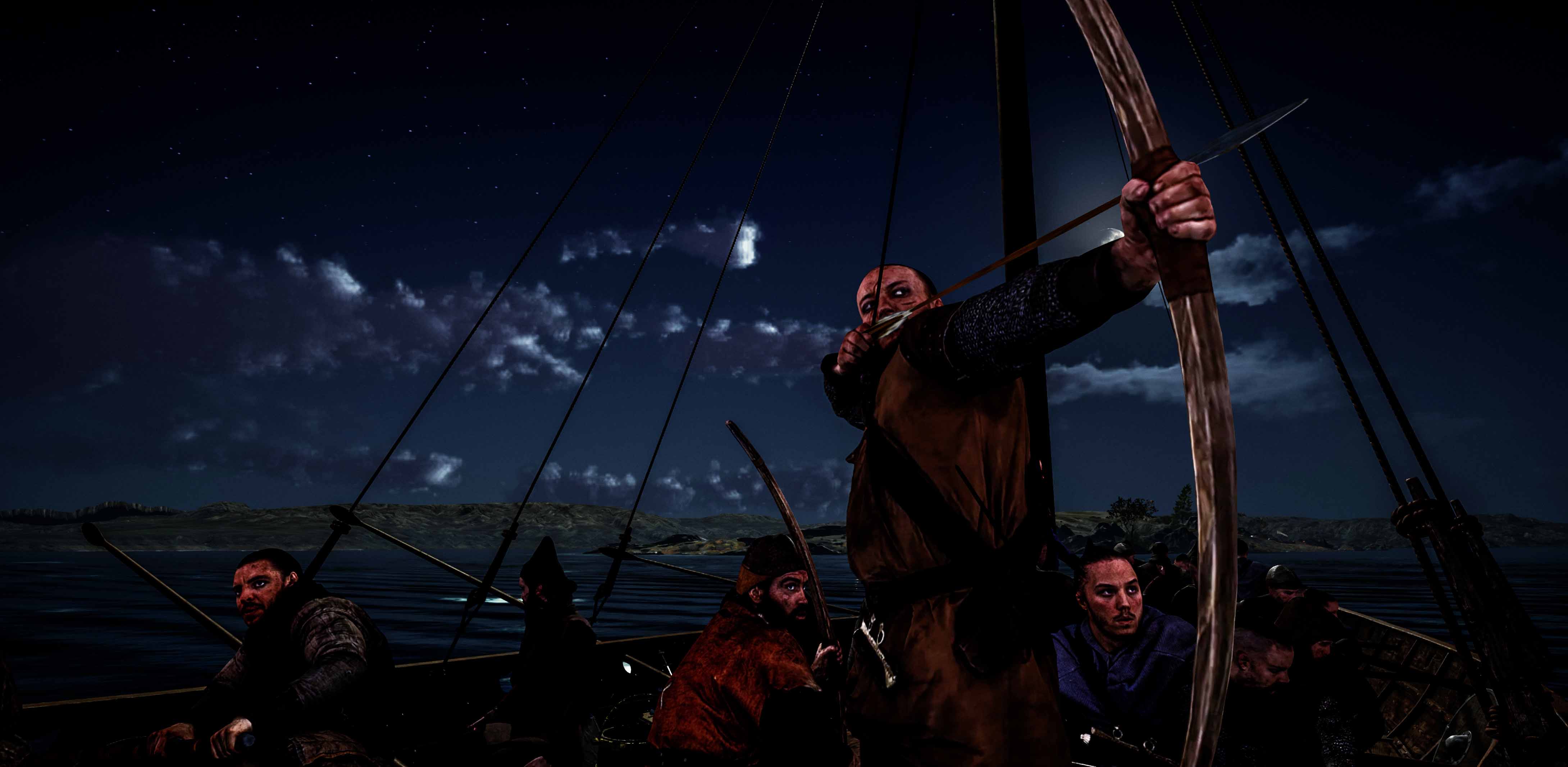

Starting out at a dozy campfire and ending up under siege from flaming arrows on an ancient Viking boat, Dimension has pieced together a simple narrative along Norway's west coast, with the historically-accurate recreations of boats, weapons and Vikings to lend the scene some weight.

The closest comparison I can think of is sitting onstage in a theatre, with the actors only a few feet away from you. I could see the Vikings in front of me heaving their chests as they rowed their ship down the river at night, squinting their eyes to see better in the dark, knuckles tensing around their CGI oars, and flinching as projectiles began to rain down on their allies.

While many of the objects – and the ship itself – was generated in Unreal engine, it was the actors that really made the virtual space feel peopled, and made the resulting destruction of the ship’s crew all the more affecting.

It’s those small details, in a look, a tightening grip on an oar, or the twitch of a facial muscle, that make a person feel real – without the ‘uncanny valley’ effect with digital characters that simply aren’t as expressive as a human face.

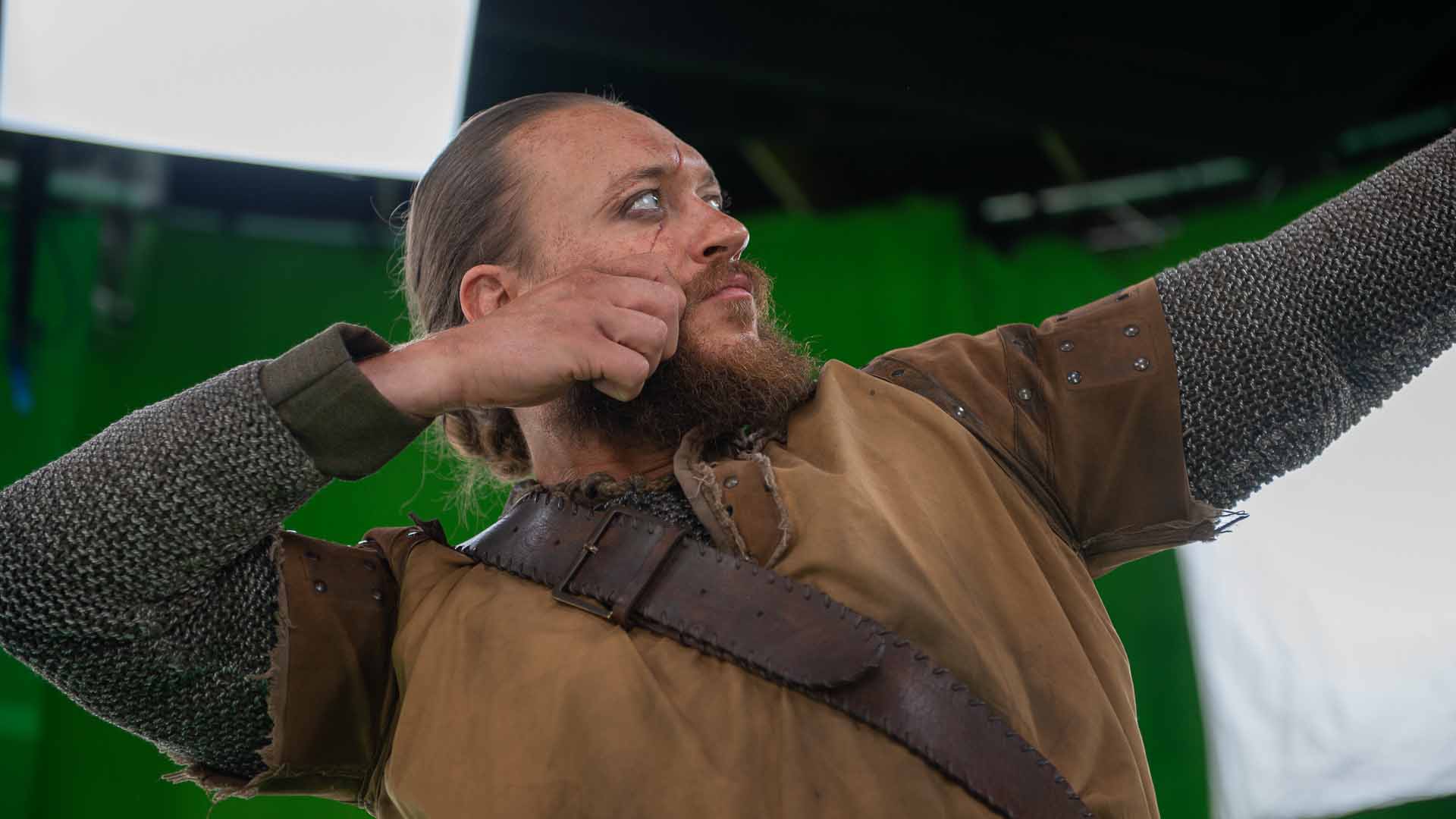

The challenge with capturing human performances, though, is that you can’t blame the technology for bad acting. Lisa Joseph, producer at RSA Films, started her career in the theatre, and is only too aware of how important this aspect is.

“You’re taking real people, and putting them into a computer generated world,” says Joseph. “So they really need to be able to act.”

Dimension had to run “rigorous casting” over several days, to make sure the result was worth the trouble of the new capture method. What made the process easier was by adding NPCs (non-player characters) into the background, only utilizing actual people for those in close proximity to the viewer, where the difference in detail would really count.

There are certainly big applications in VR games: imagining an open-world Fallout or Skyrim with complex, human expressions instead of rote facial animations could completely transform how engaging our interactions in games can be.

Those at Dimension won’t be baited on the topic, but their use of Unreal Engine – which powers titles ranging from Fortnite and Gears of War to the Final Fantasy VII remake – gives us hope that it isn’t too long before volumetric capture catches on in the wider industry. We have a lot more VR demos ahead of us, and we want them to feel a lot more like this.

Henry is a freelance technology journalist, and former News & Features Editor for TechRadar, where he specialized in home entertainment gadgets such as TVs, projectors, soundbars, and smart speakers. Other bylines include Edge, T3, iMore, GamesRadar, NBC News, Healthline, and The Times.