SecondLight explained: MS Surface on steroids

We get hands on with Microsoft's 3D interface and display

One of the tech demos that caught our eye at last week's visit to Microsoft Research was SecondLight. While we've already seen a demo of it on the web – with some even wrongly branding it as a replacement for Surface - David Molyneaux from Microsoft Research Cambridge took us through how it works.

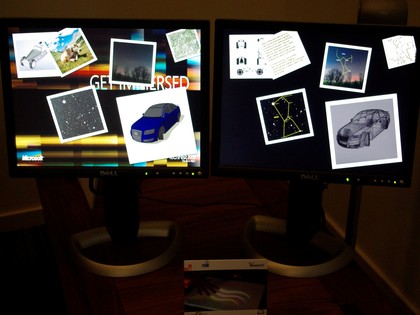

"It's a very simple concept," explains Molyneaux, showing us a Surface-type display atop what looks, essentially, like a tall amplifier's flight case. Inside are two projectors each of which is hooked up to a separate monitor, so those observing the demo can see the output of each of the projectors.

"[Surface is] a multi-touch computer. [SecondLight] is very similar, so again we can have single points of interaction, we can have multiple points of interaction and we can all interact simultaneously if you wanted to. But this is different. Additionally to the Surface computer we can reveal extra information than what's already on display."

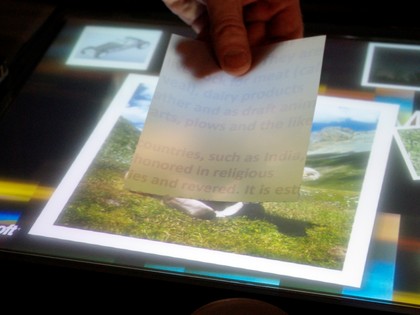

Showing us an image of a star constellation, Molyneaux then held a simple piece of plastic over the image to reveal the constellation lines and the names of the stars. You could imagine it being used productively in education.

Then we saw a map demo of maps being used in the same way with street names being revealed and a picture of a cow, where some text was revealed about the animal. "These are totally passive objects. Were not doing any kind of tracking," says Molyneaux.

Diffused or transparent?

So how does it work? "Its all down to a special diffusing surface that we're using. It's a switchable diffuser like that on privacy glass. So it can be in two different states, diffused or transparent."

Sign up for breaking news, reviews, opinion, top tech deals, and more.

"What we're doing is applying a voltage to change it between the two different states so fast that you can't actually see that switching happening."

"[The two projectors] are actually synchronised with the two different states of the surface. When it's in the diffused state, like the tracing paper, we only project with one projector. That's the one you can see if you walk up and look at it (as shown on the monitor on the left)."

The change happens extremely rapidly. Molyneaux then explained how we're able to see the overlay image. "When we apply the voltage to the surface and it goes to transparent, we actually see a second image.

"The content stays in the same location in both projectors. But as the surface is now transparent, the rays of light pass through and project onto the ceiling if you don't catch them [with an object]." Molyneux pointed out the fuzzy projection of the overlay image on the ceiling.

Tracking objects

But it's also possible for SecondLight to be able to track objects and display information accordingly - and this is really where the technology could find a niche as Molyneaux demonstrated.

"When you do the touch detection on the surface, we have rows and rows of infrared LEDs and they shoot infrared light into the acrylic that we have on top of this diffuser. [The light] bounces around inside the acrylic through total internal reflection until something stops it - if I touch the surface it will break that internal reflection."

So how can this be used to track objects? "As you'll remember our diffuser is transparent for [only] part of the cycle. If I put my fingers down it can see them or my hand, or potentially if I lean over it can see my face and recognise me."

Molyneux then shows us what he calls a portable interaction panel. "What we have here is a piece of acrylic with a battery and a strip of infrared LEDs with two lines etched into the acrylic - that breaks the total internal reflection. These [the etched lines] show as two lines of infrared light and that allows us to track it."

By placing the panel over the surface, we saw an animation of a running man. As the panel was tilted, the image also moved and adjusted with it. "What we're doing is pre-distorting the image so that when [the image] hits the interaction panel it's actually undistorted," says Molyneaux.

"That enables us to have a lot more movement on the surface without having the distortion you typically get with all projectors."

"You can imagine many use scenarios, collaborative working scenarios when you have a lot of people round the surface, scoop information off it and drop it back on there." Wait, scoop? Well yes. Molyneaux says it's not beyond the realms of possibility that we might be able to use a portable interaction panel to literally 'pick up' information off the display and replace it elsewhere.

And the possibilities could yet be more ground breaking. "Quite a lot of medical machines like MRI scanners produce a 3D volume of data. You can imagine this data set could be here virtually above the surface.

"A portable interaction panel could enable you to slice through this data set at different angles, you could even turn it around and get different views through this data set in real time."

Dan (Twitter, Google+) is TechRadar's Former Deputy Editor and is now in charge at our sister site T3.com. Covering all things computing, internet and mobile he's a seasoned regular at major tech shows such as CES, IFA and Mobile World Congress. Dan has also been a tech expert for many outlets including BBC Radio 4, 5Live and the World Service, The Sun and ITV News.