Generative AI is stealing the valor of human intelligence

The tainted core of generative AI is the devaluing of human culture

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

With this week's Google I/O 2023 event, we got an extensive preview of how AI tools will transform our day-to-day lives in ways one could have predicted just a few years ago. And even those who did see it coming would never have guessed it would happen so soon.

Within a few weeks or months, Google AI tools will be writing your emails, reading and summarizing other people's emails to you, creating your spreadsheets, your presentation notes, and even responding to text messages with appropriate emotional tones (so long as you instruct it to respond in such a way), and the whole affair left me rather pessimistic.

And it's not just Google, obviously. Microsoft has partnered with OpenAI - the once non-profit startup turned for-profit AI venture - to reinvigorate its laughingstock of a search engine, Bing, with new generative powers. For a time at least, Bing AI Chat puts Microsoft back on even footing with the internet's Eye of Sauron camped out in Mountain View, California.

Far be it for Google to let that stand, and Google I/O 2023 confirmed what our US Editor-in-Chief Lance Ulanoff predicted months ago: that Google might have been caught flat-footed by Bing's Open AI integration, but that lasted about as long as it took for Google to open the LaMDA floodgates.

Now, the AI arms race is full-speed ahead, with normally sensible-ish tech companies throwing caution and social responsibility to the wind to leverage their ubiquity in everything from emails to getting directions to ordering pizza so they can cram an AI assistant into every corner of our lives.

I wrote earlier this week about how our work and interpersonal lives are already fractured and atomized from one another to the point of near-isolation, so the idea of putting up more barriers between human interaction even on something as banal as a work email struck me as sacrilegious. It was hard to really put my finger on why, but a few days later I'm starting to understand what it is about the rapid march of generative AI that has me so shaken by its progress.

It's anger, fundamentally, at the presumptuousness of the technology being passed off as human-enough that we will accept it as a permanent part of our lives. But more than that, it's the understanding that countless billions of human interactions, bursts of creativity, and painstaking work has been lifted wholesale, stripped of its value and presented back to us as if it's just as good as all of that human effort.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Ultimately, these models mimic the composite parts of millennia of human thought and creativity and reassemble it in a place beyond the uncanny valley that is, at its core, fundamentally more offensive. If we were smart, we would burn these models to ash.

Generative AI models are fundamentally anti-human

The first breakout generative AI model to hit the scene was DALL-E, back in January 2021. An amalgamation of Salvador DalÍ and the Pixar character WALL-E, DALL-E distills down two recognizable human cultural touchstones, strips them of their value, and repackages it as a bastardization of both.

WALL-E, for those who haven't seen the film, is a sanitation robot abandoned on a ruined planet, condemned to sort and organize the trash of humanity after it has long left the planet behind. It does so tirelessly and alone, until it meets another robot, one with personality and genuine human-ness. We then get to watch as the relationship WALL-E forms with another consciousness helps it discovers within itself the capacity to break out beyond its original purpose to find its own meaning. In doing so it, becomes more human than the humans who left it behind, forgotten among their waste.

Salvador DalÍ, meanwhile, was one of the twentieth century's greatest artists. Not because he was especially skilled such that no one could paint like he could; DalÍ's brilliance as an artist came through seeing the world differently than the rest of us. He excelled at creating surreal visions that challenged our natural perception and expectations, and through dedication to craft was able to translate that vision onto a canvas so the rest of us could share in this small corner of his mind long after he died.

DALL-E, meanwhile, has neither soul nor vision, and it can create nothing on its own. Its output is visually interesting because its source material, its ingredients, are what is interesting, and it can create groteque caricatures of human art, photography, or illustrations.

But an algorithm isn't a remix (as some might argue, in defense of DALL-E and others), where some artist takes the work of others and adds a new element to provide a different perspective or experience that enriches the original. An adversarial generative AI like DALL-E, ChatGPT, and the various voice generators that can produce songs by your favorite artists who never actually performed them is something else, and it definitely isn't human.

It can play the part at a passing glance, but so could the alien in John Carpenter's The Thing. To pretend that the output of a generative AI is anything more than a curiosity is fundamentally antithetical to human culture. It's an anticulture, and it threatens human thought itself with a crude imitation of what it means to think.

AI is stealing the valor of human intelligence, and it can't be forgiven for that

All of this, meanwhile, wouldn't be possible without you, the human being, the one who produced the training data that all these AI models have used to get so good at writing an email. Google's models probably read countless emails to understand what an email is supposed to sound like, and yours was possibly among them.

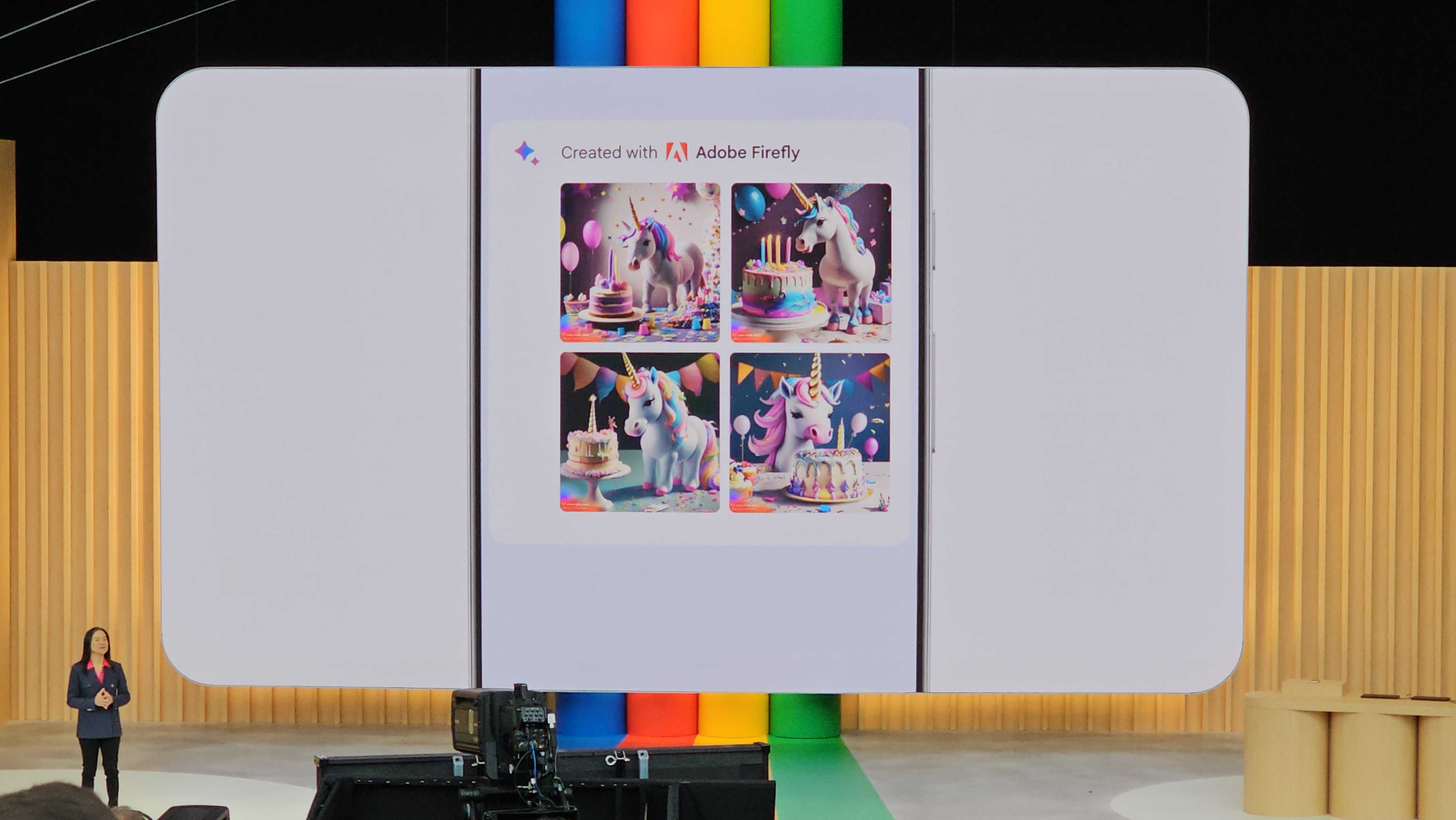

AI image-generation tools like DALL-E, Midjourney, and soon Google's AI image generation, likewise, wouldn't be anywhere without the grueling, often unpaid and thankless work of the photographers, painters, and other artists whose works were used to train these models, almost entirely without the artists' consent or even knowledge.

Hilariously, so much Shutterstock image data has been scraped for these models that the company's watermarks are clearly visible in any number of AI-generated images and even videos. At least a photographer got paid by Shutterstock, but AI image generation won't have to pay you anything, even though it will inevitably have been trained on copyright-protected material that you and others like you will never be paid for.

It might be one JPEG among a few billion, but your creative product will be drawn upon in some degree to produce an image that someone will go on to use for free, or even worse, pay Google or some other company to use without you ever knowing your connection to it. All the while, the existence of these tools will make it impossible for you to continue making a living as an artist, since who is going to pay you for something that they can generate for free that looks enough like what you would have produced.

The only analogy I can think of for this is someone donning a military uniform and claiming to have fought in a war they did not fight in, or claiming some medal or act of heroism that they did not personally earn or perform. This is called stolen valor, and it banks on the respect and admiration given to those who do something productive and heroic by taking credit for something without having to actually do it.

AI generation, whether text, voice, or imagery, can produce nothing on its own; it can only take what you and countless other humans have created, and combine it all into a shape dictated by random chance. It does so without contributing a single new thing of its own to the mix, so at its core, it's more than a form of intellectual theft; it's the theft of intelligence and creativity itself.

Human culture, for better or worse, is something we've earned and have a right, as humans, to participate in and foster. But the proliferation of generative AI content will all but cut off any avenue for genuine human creativity and thought to develop in a meaningful, constructive way.

Artists spend a lifetime developing the skills of their medium through unpaid labors of passion with the promise of one day adding to the mosaic of the human experience, if not at least make enough to live off their work. If an AI comes along and steals that labor and redistributes that profit to some nameless group of shareholders or a select few tech founders, what human will ever want to work to produce art under such a system? And for those who do continue despite the circumstances, the continued theft of their work simply exacerbates the social harm.

This is not the end any of us should want. Humanity, for all its faults, has produced something unique in the universe, as far as we know. Surrendering it to a cohort of tech idealists for profit only makes us complicit in the fundamental crime these AI models commit against the human spirit.

John (He/Him) is the Components Editor here at TechRadar and he is also a programmer, gamer, activist, and Brooklyn College alum currently living in Brooklyn, NY.

Named by the CTA as a CES 2020 Media Trailblazer for his science and technology reporting, John specializes in all areas of computer science, including industry news, hardware reviews, PC gaming, as well as general science writing and the social impact of the tech industry.

You can find him online on Bluesky @johnloeffler.bsky.social