Google's AI plans will soon sever what little human connection we still have at work

Hey Google, there's no such thing as responsible AI

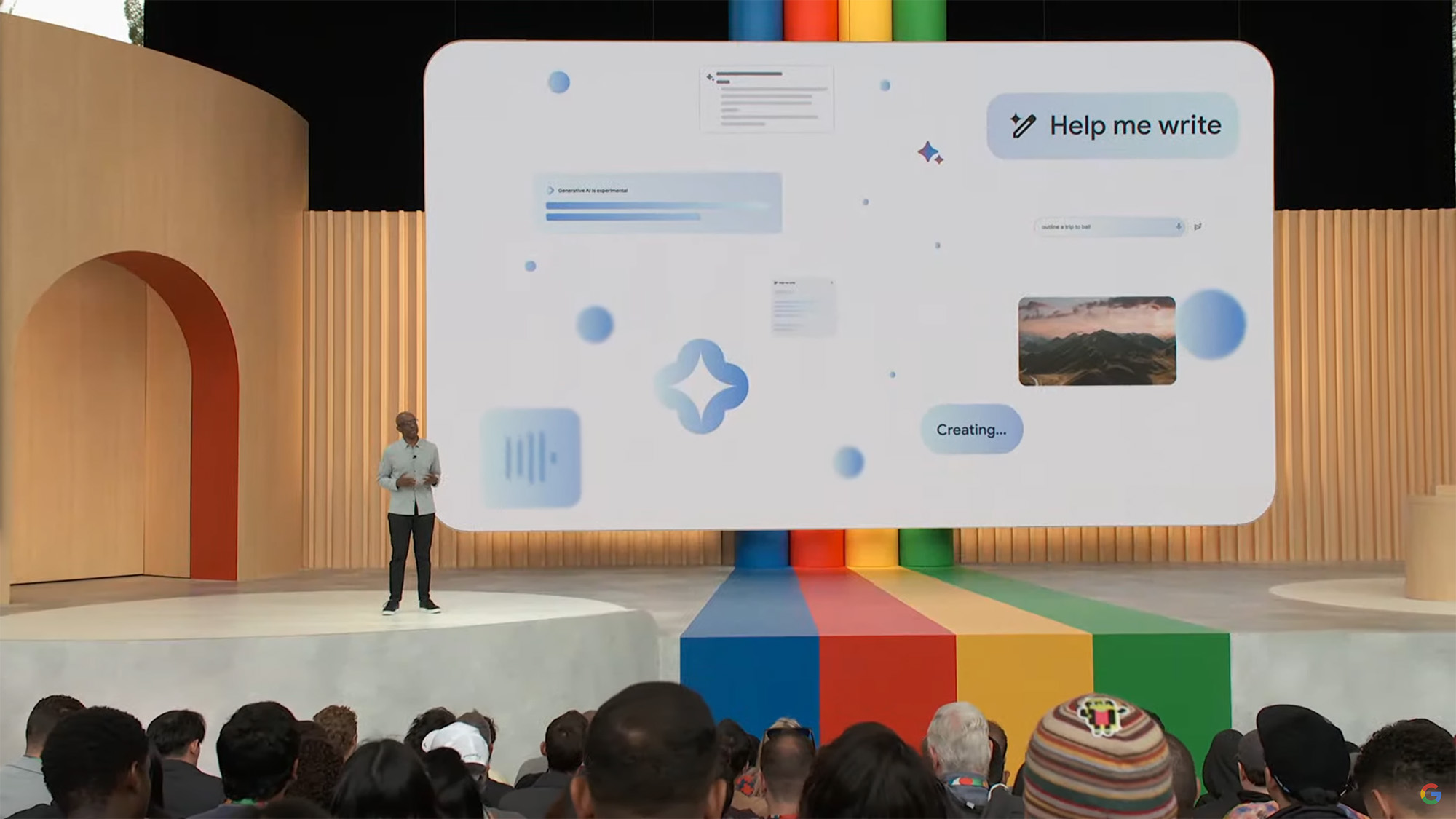

Google I/O 2023 is a wrap, and the company showed off some exciting new hardware and cool new features for Android devices; but the bulk of the presentation was devoted to how Google was integrating AI into all of its major services, from Maps to Gmail to Docs to Photos.

Even its major Android device releases, the Google Pixel 7a, Google Pixel Tablet, and Google Pixel Fold, aren't spared the ever-encroaching presence of AI integration, like AI-generated wallpapers and AI chat integration at your fingertips.

For those excited about AI, this is all golden, and I have no doubt that Google will accomplish much, if not all, it is setting out to do with its AI plans. But one of the points Google's presenters emphasized is how they would be doing the company's AI rollout responsibly – and on that, I call BS.

The very premise of what Google and other companies like Open AI have done around AI is, at its core, irresponsible to say the least, and detrimental to human health and safety in many respects – and there's nothing an upbeat, happy presentation about it can do to erase this original sin.

Human connection was fraying already

Being an elder millennial, I have a rather different perspective to that of my younger and older cohorts. My childhood years (I was born in 1981) were distinctly pre-internet. I grew up in Queens, NY, and I was privileged enough to live in a place where I could play outside for hours as a child, and grow up without a lot of the pressures that the internet age puts on kids today.

For example, there was no need to always watch your step and act perfectly, or in an artificial manner, because there were a gajillion moments in life, and while your embarrassing misstep in school might single you out for ruthless mockery in the moment, memories fade, and the mistakes of youth can be quietly packed away as learning experiences and nothing more.

The internet, which landed when I was in junior high school, changed all that. Things on the internet could be permanent in a way they weren't beforehand, but we didn't know it then. It wasn't until social media hit the scene in the late aughts that the full implications of this permanence became clear to see.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Every post on social media could be cause for social rupture, and while I don't think this is inherently bad (racist social media posts only reveal that someone is a racist, for example, something that they would be with or without social media, and which is actually a good thing to know), without question it has changed how we approach the world.

In many ways, it has amplified our personas over ourselves, making us wear the masks that we make for our public selves (which is normal and timeless) more often, so that we inhabit these personas more than we used to, and interact with personas rather than people more than ever before.

And, as we live much of our lives online nowadays, this has inevitably had mental health consequences for many people who are trying to adjust to a way of living that isn't healthy for humans, who are inherently social animals that need actual human-to-human connections to thrive.

Social media, by contrast, replaces genuine human connection with an ongoing dialog of personas that simply isn't healthy for us in the long term – and now Google looks prepared to extend this same problem into more aspects of our day-to-day lives and declare it the future. For the sake of humanity, I hope to hell it isn't.

Google, OpenAI, and all the rest have made an algorithm in the shape of a person

To start with, Google is in a bind as a business. It's not Google's fault necessarily that Open AI and others have taken generative adversarial AIs and set them loose on the world for profit. Google, in classic prisoner's dilemma style, is simply adapting to the marketplace.

It also doesn't change the fact that this marketplace ought to be destroyed for the sake of humanity. It is a marketplace created by people who have founded tech startups, and whose only social interactions are with other 'elite' and often asocial tech founders and associated staff members. It's a marketplace created by people who feel like they could just create an AI agent to do their swiping for them on a dating app, and pick their perfect match for them so they don't have to go through the messy human cycle of excitement and disappointment that comes with dating.

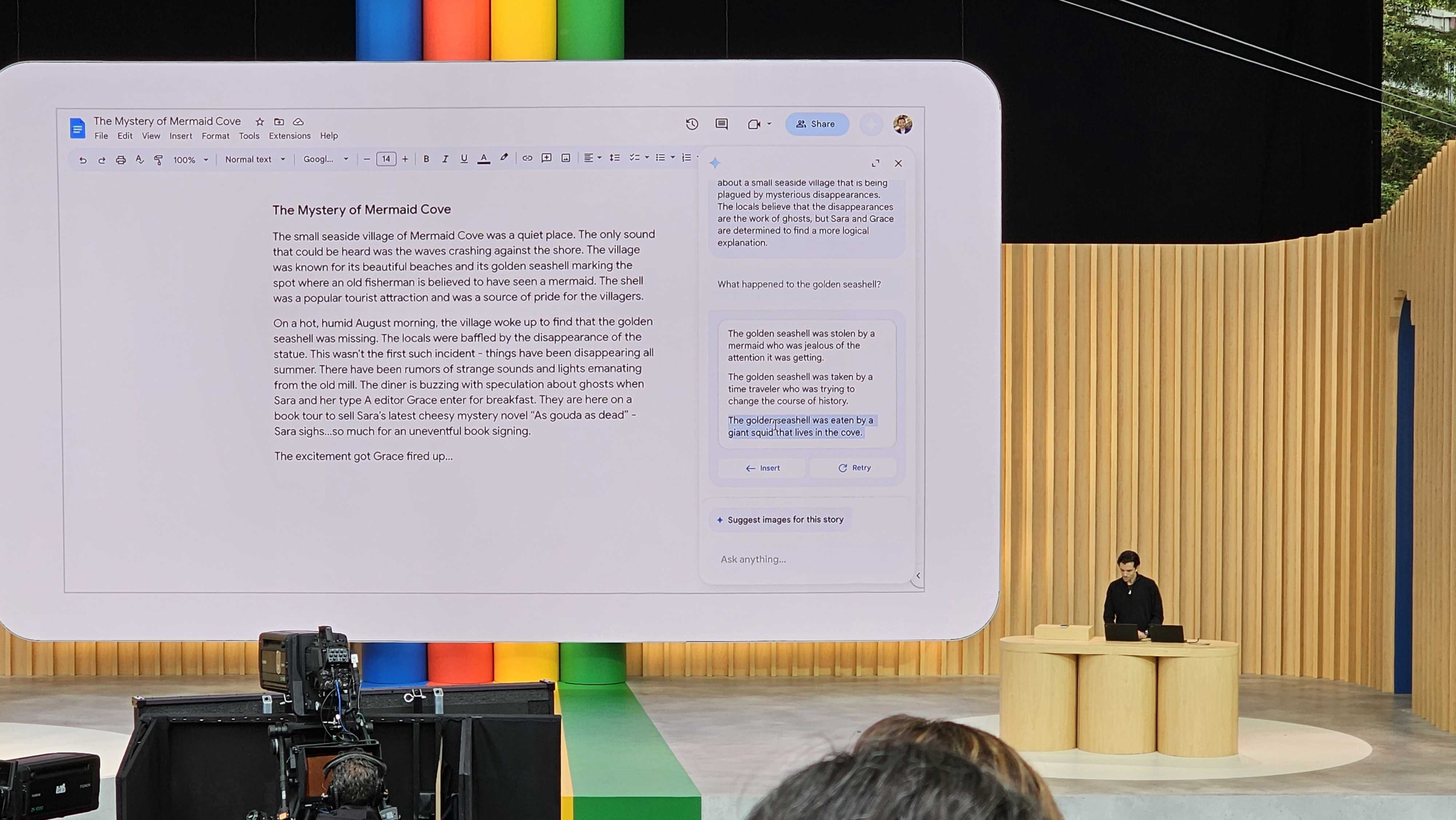

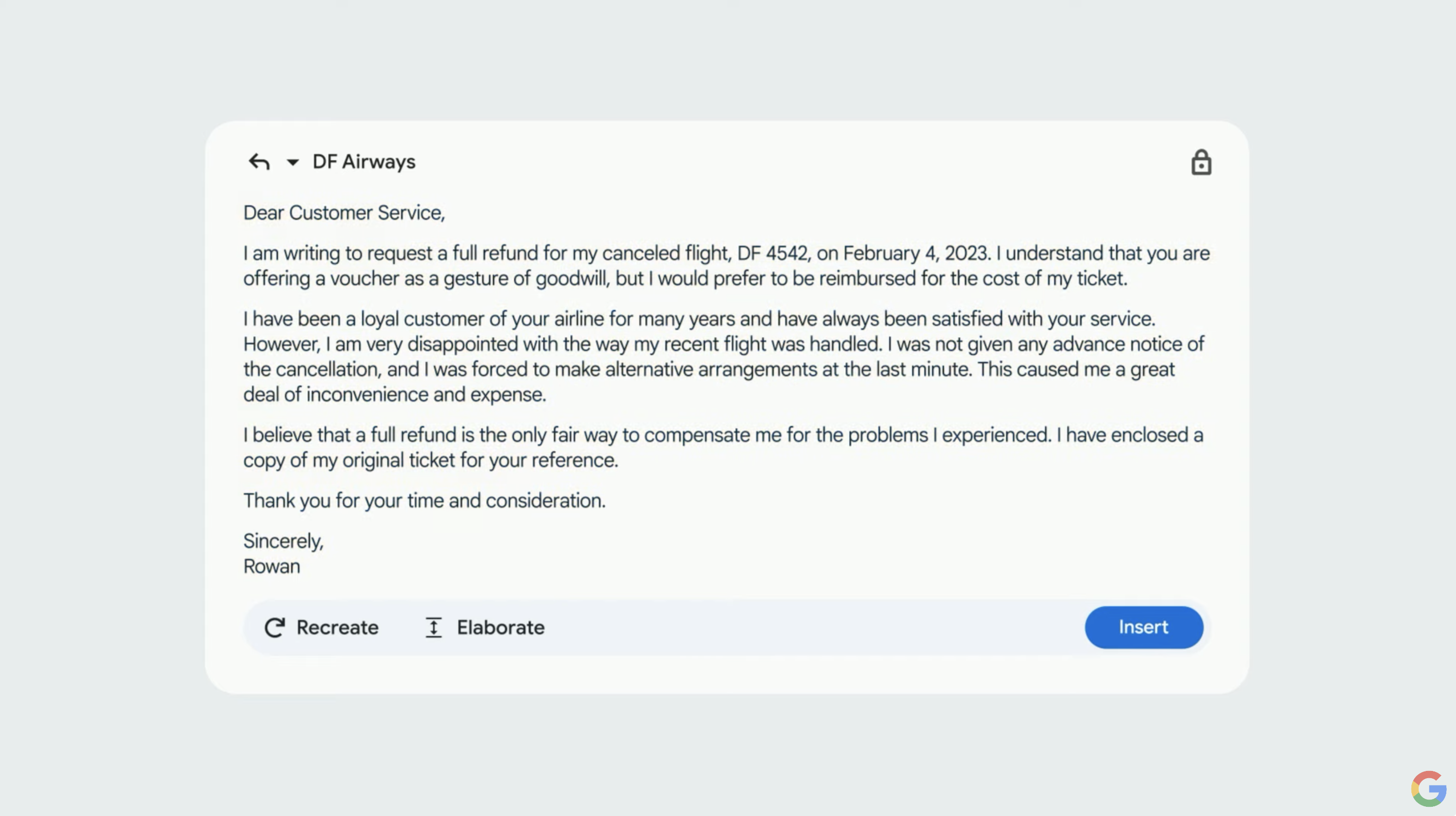

Google is taking that approach to its entire line of productivity apps. An AI will soon be able to write you an email for work, based on the content of an email chain with just a prompt or two. It can even read the content of an email chain and summarize the contents into a brief summary, so you won't actually have to read what others have written. All those in-jokes between colleagues that help tie us together as people at work will presumably not make the cut.

I have a lot of experience with PR emails coming to me reading as if they have been written by an impersonal AI, and let me warn you all, your inbox is about to become a hell-hole. Mine is set to become unusable, as AI-generated pitches by the hundreds and possibly even thousands about topics I do not cover professionally drown out the dozen or so emails I get a week about those topics I do. Whatever you do for work, the result will still be the same, and if this isn't already your experience, my condolences for what's coming.

Then there's Google Docs, and Sheets, and Slides, where you can check your creativity and personality at the prompt and let Google's AI just do it all for you. As a 'starting place', of course.

And of course, no one is going to put so much pressure on you at work in the future that you won't end up cutting corners and just quickly have Google draft up some work product for you because you don't have the time to actually take that starting place and infuse it with your own intelligence, personality, and dedication.

And it's definitely not going to be an issue where your boss will just tell you to use Google AI to generate your work because it's quicker and "good enough" for the time being (and the time being is ultimately every time), sucking whatever enjoyment you got out of your job. If you hate your job though, maybe Google gets that and is giving you the tools you need to just mail it in at work. It won't be long, though, before your boss starts wondering why they're even paying you when AI can do your job just fine.

Don't want to write a paper for school and actually learn something in the process? Don't worry, Google Tailwind can pretty much do your school papers for you; just give it some sites to pilfer for source material and submit your work.

Your teacher doesn't have the time to thoroughly check your work anymore anyway, since teachers are already overworked as it is, so as long as your essay doesn't contain the words "As a large language model, I can't say personally how I spent my summer vacation, but if I were, I..." you'll probably be fine.

Something tells me that no teacher got into the profession just to check the work of a large language model for terrible pay; but maybe they can find a large language model that can check their students' work for them, and no one has to teach anyone anything, and the models can just work it out amongst themselves.

Google wants you to know its language model is so good that it can pass medical-exam-style questions now, so soon doctors can just text you for a list of symptoms, and you can google the symptoms you need for a doctor's note for your boss, and your doctor can give you the diagnosis you need to get out of work for a few days.

Even your family photos aren't safe, since that one snapshot you took of your kids can be touched up and altered to make it perfect, right on your phone, rather than being the normal snapshot in time of a person you love as they actually were when you took it, bad lighting and all. It will totally help you to be an influencer on Instagram though, since only perfect kids will do for social media.

The list of potential use cases for these new AI tools from Google and others are truly limitless, and they all have one thing in common: they put one more remove in between ourselves and the people we used to have to interact with to get anything done, and in the end it devalues us all in the process.

John (He/Him) is the Components Editor here at TechRadar and he is also a programmer, gamer, activist, and Brooklyn College alum currently living in Brooklyn, NY.

Named by the CTA as a CES 2020 Media Trailblazer for his science and technology reporting, John specializes in all areas of computer science, including industry news, hardware reviews, PC gaming, as well as general science writing and the social impact of the tech industry.

You can find him online on Bluesky @johnloeffler.bsky.social