Now Google’s AI can navigate labyrinths faster than humans

Mirroring our brains and achieving better results

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Google taught its DeepMind AI to remember things like a human would.

This is unique. Most AIs can specialize in one area, like defeating the world’s best Go players; but DeepMind was programmed to apply previous knowledge and skills to learning new tasks, drawing from a neural network of programmed skills and “memories”.

Now, DeepMind is teaching itself how to organize its own “brain” network. And Google researchers were shocked when, without any input from them, the AI chose to make part of its brain look nearly identical to humans.

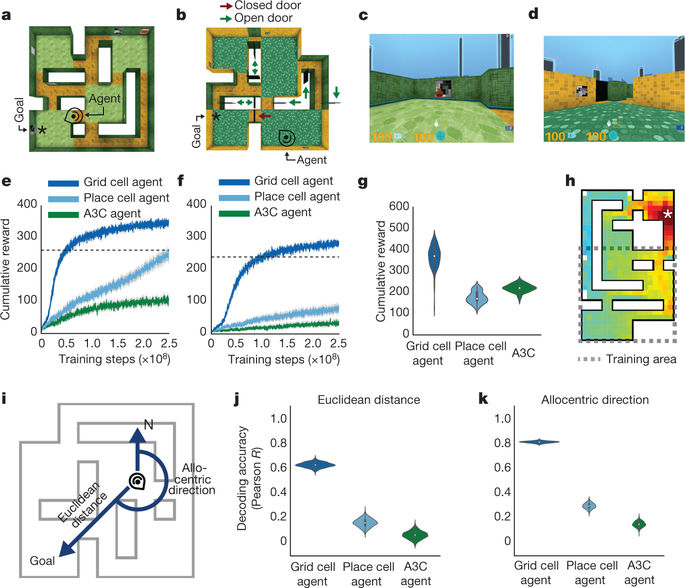

Google’s DeepMind team, in collaboration with University College London (UCL) researchers, stuck the AI in a virtual reality maze to teach it spatial awareness and memorization of patterns, publishing their findings in Nature.

As time went on, it rearranged its electrical grid into a hexagonal pattern (think beehives).

What’s significant about that pattern is that it mirrors the pattern of neural cells found in our own entorhinal cortex, which handles our internal navigation and memory of places—essentially our personal GPS, complete with saved locations of our favorite hangouts.

In 2014, Norwegian researchers won a Nobel prize for their research on grid cells. Arranged in hexagonal grids, these cells fire signals in different directions as you move forward or change directions.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

With these cells, our entorhinal cortex forms a mini-map in your head, helping you to remember the quickest route to work or guess which direction faces north.

And without any guidance, DeepMind created its own hexagonal, mental mini-map to get out of maze puzzles even faster than human brains can.

“We were surprised how well it worked,” Caswell Barry, an UCL neuroscientist, told the Guardian. “The degree of similarity is absolutely striking.”

Using this human-style network, the AI learned to recognize the most direct route to a goal, and even figured out how to cheat and take shortcuts.

AI brains: a map to understanding our own?

DeepMind naturally emulated an aspect of human brain structure that wasn’t widely known until four years ago.

As Google’s AI gets more intelligent and takes on other tasks, it will likely develop new neural networks that efficiently handle these tasks.

These networks could help researchers better understand how our own brains work. Or, as Barry told the Guardian, “It could be a testbed for experiments you wouldn’t otherwise do.”

Alzheimer’s targets the entorhinal cortex first before moving on to the hippocampus and other brain regions.

Looking at how healthy brains’ and sick brains’ grid cells compare to the firings of DeepMind’s electrical nodes could provide an interesting avenue of study for neuroscientists hoping to better understand the disease.

For now, DeepMind’s impact is more centered on short-term tech improvements than long-term health breakthroughs.

During the Google IO 2018 conference this week, Google announced that Android P would have improved battery life and adaptive brightness, based off of DeepMind AI technology.

Plus, of course, now that robots can chase after us and we can't even escape them in a maze, we're basically doomed.

Via The Guardian

- 5 can't-miss features in Android P

- The best vacuum cleaners: from cordless to vacs with robot brains

Michael Hicks began his freelance writing career with TechRadar in 2016, covering emerging tech like VR and self-driving cars. Nowadays, he works as a staff editor for Android Central, but still writes occasional TR reviews, how-tos and explainers on phones, tablets, smart home devices, and other tech.