Microsoft unveils Maia 200, its 'powerhouse' accelerator looking to unlock the power of large-scale AI

Maia 200 looks to push Azure ahead of AI competition

- Microsoft unveils Maia 200 AI hardware

- Maia 200 reportedly offers more performance and efficiency than AWS and GCP rivals

- Microsoft will use it to help improve Copilot in-house, but it will be available for customers too

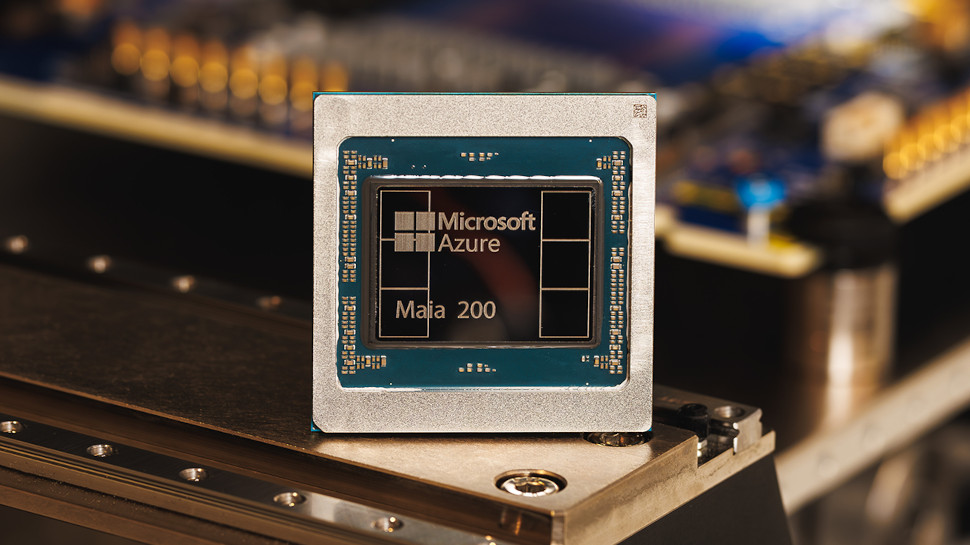

Microsoft has revealed Maia 200, its "next major milestone" in supporting the next generation of AI and inference technology.

The company's new hardware, the successor to the Maia 100, will "dramatically shift the economics of largescale AI," offering a significant upgrade in terms of performance and efficiency as it to stake a claim in the market.

The launch will also look to push Microsoft Azure as a great place to run AI models faster and more efficiently, as it looks to take on its great rivals Amazon Web Services and Google Cloud.

Microsoft Maia 200

Microsoft says Maia 200 contains over 100 billion transistors built on TSMC 3nm process with native FP8/FP4 tensor cores, a redesigned memory system with 216GB HBM3e at 7TB/s, and 272MB of on chip SRAM.

This all contributes to the ability to deliver over 10 PFLOPS in 4-bit precision (FP4) and around 5 PFLOPS of 8-bit (FP8) performance - easily enough to run even the largest AI models around today, and with space to grow as the technology evolves.

Microsoft says Maia 200 delivers 3x the FP4 performance of the third generation Amazon Trainium hardware, and FP8 performance above Google’s seventh generation TPU, making it the company's most efficient inference system yet.

And thanks to its optimized design, which sees the memory subsystem centered on narrow-precision datatypes, a specialized DMA engine, on-die SRAM, and a specialized NoC fabric for high‑bandwidth data movement, Maia 200 is able to keep more of a model’s weights and data local, meaning fewer devices are required to run a model.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Microsoft is already using the new hardware to power its AI workloads in Microsoft Foundry and Microsoft 365 Copilot, with wider customer availability coming soon.

It is also rolling out Maia 200 to its US Central datacenter region now, with further deployments coming to its US West 3 datacenter region near Phoenix, Arizona soon, and additional regions set to follow.

For those looking to get an early look, Microsoft is inviting academics, developers, frontier AI labs and open source model project contributors to sign up for a preview of the new Maia 200 software development kit (SDK) now.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

Mike Moore is Deputy Editor at TechRadar Pro. He has worked as a B2B and B2C tech journalist for nearly a decade, including at one of the UK's leading national newspapers and fellow Future title ITProPortal, and when he's not keeping track of all the latest enterprise and workplace trends, can most likely be found watching, following or taking part in some kind of sport.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.