“The AI data centers of 2036 won’t be filled with GPUs”: FuriosaAI’s CEO on the future of silicon

We talk with FuriosaAI’s June Paik about AI hardware, startup survival, and the future of inference chips

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

AI acceleration hardware is becoming increasingly expensive, with next-generation chips demanding high power budgets and major infrastructure investment. For many startups and smaller enterprises, the cost and complexity of deploying AI at scale creates barriers long before software development even begins.

New silicon players such as FuriosaAI are trying to rethink that equation, focusing on efficiency, performance, and alternative approaches to the GPU-dominated market.

Founded in South Korea, FuriosaAI develops AI inference chips designed to deliver high performance while reducing power consumption and data center strain. Its latest processor, RNGD, is built around the company’s Tensor Contraction Processor architecture and aims to run demanding AI models without relying on traditional GPU frameworks.

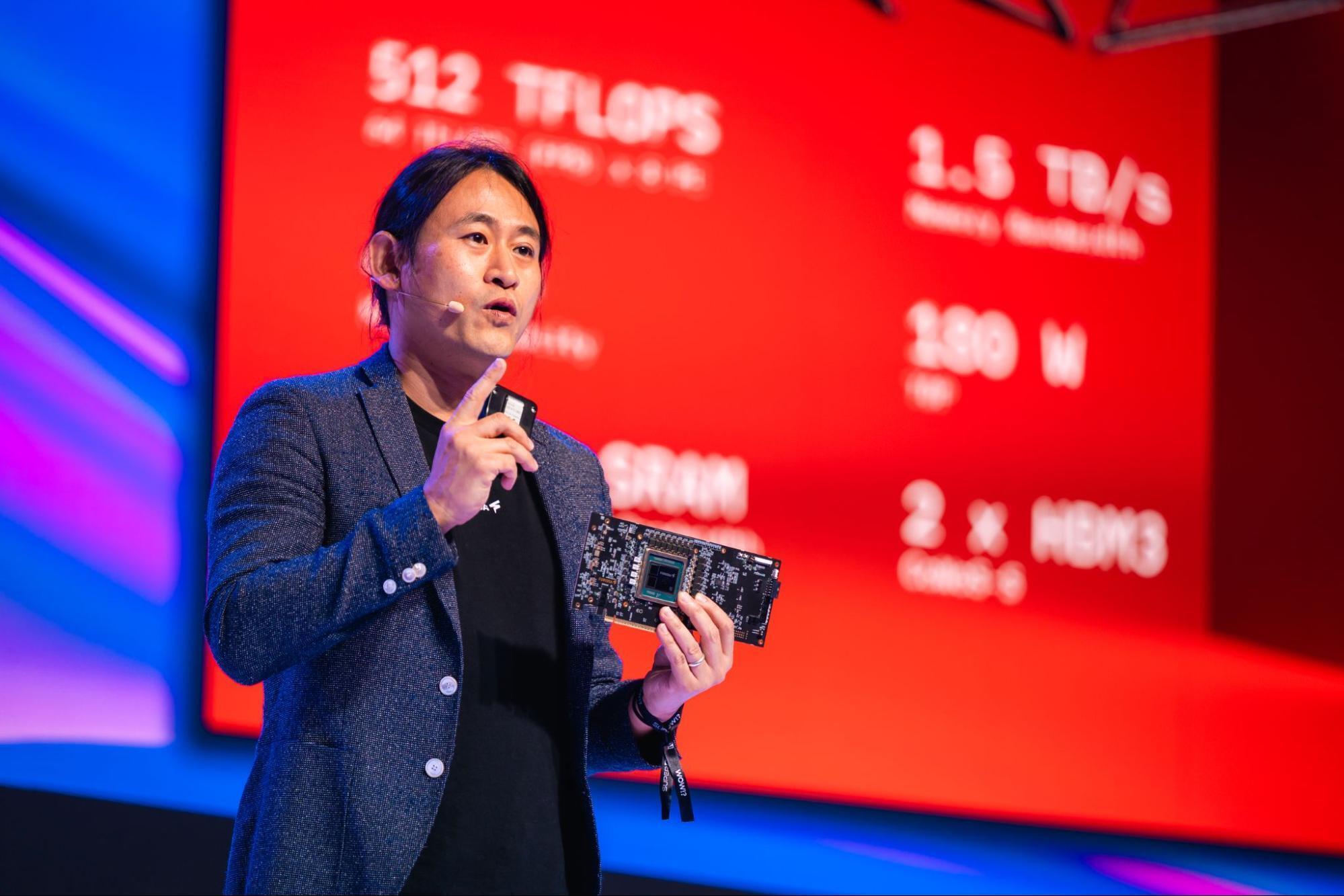

June Paik, CEO and co-founder of FuriosaAI, previously worked as a hardware and software engineer at AMD and Samsung before launching the company in 2017. We spoke with him about the challenges facing AI silicon startups, the future of data centers, and how FuriosaAI plans to compete in an industry shaped by energy limits, infrastructure costs, and Nvidia’s long-standing dominance.

- Historically, Japan and Europe delivered some great companies (ST Electronics, NEC etc), why are there so few AI silicon players outside of China and the US?

First, I’d challenge your premise, at least slightly: Very few hardware startups anywhere have made inroads against Nvidia’s dominance in AI. Even a decade and a half after AlexNet, we are still in the early days of this industry. And some AI hardware innovators, such as Hailo and Axelera, have in fact emerged outside of the U.S. or China.

But the list is short for structural reasons.

Unlike crypto mining, where the algorithms are fixed and simple ASICs work well, AI is advancing and evolving rapidly.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Building a chip for a moving target requires both hardware and compiler expertise, which tends to cluster in places with a strong semiconductor heritage. There are also only a few places in the world that have deep relationships with fabs and chip manufacturing partners.

This is why being based in South Korea was actually a competitive advantage for Furiosa.

We had access to talent from world-class engineering programs at Korean universities and Korean tech giants. We also were able to develop partnerships with leading companies in Korea (like SK Hynix to provide HBM3 for our second-generation chip inference chip, RNGD) and elsewhere in Asia (like our foundry partner, TSMC, in Taiwan).

Distance from Silicon Valley also forced us to take a very disciplined approach. Furiosa launched with just $1 million USD in seed funding and we spent several years refining our ideas before shipping silicon.

We committed fully to our tensor contraction-based approach and were able to ignore conventional wisdom and hype in the Valley.

But hardware must compete in a global marketplace; we can't just be a regional provider. Now that we are shipping RNGD in volume, we are working with enterprise customers all around the world.

- Hardware is only part of the equation and Nvidia has taken years to build its now infamous software moat (CUDA). What is Furiosa doing to compete with its formidable adversary?

Trying to replicate Nvidia’s massive CUDA library is a strategic dead end.

We took a bolder path by co-designing our hardware and software from first principles specifically for AI, so we wouldn’t have to recreate CUDA.

Our chips use Furiosa’s proprietary Tensor Contraction Processor (TCP) architecture, which natively executes the multidimensional math of deep learning rather than forcing it into the legacy structures used by GPUs.

This allows our compiler to optimize models without needing thousands of hand-tuned kernels.

RNGD, which is now in mass production, demonstrates the advantages of our approach. It delivers high-performance inference for the world's most demanding models while consuming just 180 watts (compared to the 600 watts or more required by GPUs).

Global partners like LG AI Research have verified this breakthrough efficiency in production.

We’ve also broken the lock-in of CUDA by building our software stack to integrate seamlessly with standard tools like PyTorch and vLLM so developers can access this performance without changing their workflow.

- Slowly the biggest hyperscalers are building their own AI chip solutions (Google, Microsoft, Amazon) to reduce their reliance on third parties. Where does Furiosa (and others) fit in that picture?

The current GPU paradigm is creating extreme energy challenges and infrastructure bottlenecks for the entire industry, including hyperscalers. We see a future defined by heterogeneous compute, where different architectures work side-by-side to serve different needs (such as training vs. inference) most effectively.

Because we prioritize TCO, power efficiency, and flexibility, our technology will play a central role in solving this for everyone.

Our focus right now is on four specific sectors that are feeling the power and infra headaches most acutely:

● Nations and regulated industries need to work with sensitive data on-premise, not in a public cloud. RNGD allows them to deploy high-performance inference within their existing power envelopes, ensuring data sovereignty without requiring massive new infrastructure projects.

● For enterprise customers, TCO and flexibility are king. RNGD fits into standard, 15kW air-cooled racks and avoids the expensive liquid-cooling retrofits required by legacy GPUs, making it the fastest, most cost-effective way to scale.

● Regional and specialized clouds need to compete with the Big 3 on margins. RNGD’s high compute density allows CSPs to maximize revenue per rack while keeping OpEx low.

● Other sectors, like telcos, are working with power-constrained data center environments at the network edge. RNGD’s power efficiency addresses their needs, too.

Our next-generation chip, currently in development, will target hyperscalers directly alongside these key sectors.

- How do you see the future of the data center evolving (you are visiting a data center in 2036, what do you see inside)?

In 10 years, “data center” will mean many different things, just as “computer” has come to refer to everything from your smart watch to a high-performance server.

Some data centers will be the kind of futuristic setup you’re imagining: enormous and (probably) fusion-powered or orbiting the Earth. But others will be small and hyperefficient.

For example, your local hospital will have an on-prem AI data center to run intelligent assistants for doctors and nurses, keeping latency low and data local and secure.

Telcos will have many high-performance “edge” AI data centers optimized for very low latency.

One thing I’m sure of is that the AI data centers of 2036 won’t be filled with GPUs. It will be a variety of AI-specific silicon for different needs.

That’s in part because of GPUs’ power inefficiencies. But fundamentally there’s broad agreement that the GPU architecture isn’t ideal for AI.

GPU makers have worked to bridge that gap with innovations like adding tensor cores to their chips, but ultimately the benefits of moving from GPUs to AI-first architectures will be too great to ignore.

- Without giving too many details for your roadmap, will Furiosa silicon follow the traditional path of AI products?

Our focus now and with future products is delivering what enterprise customers need most: high-performance data center inference that is also power efficient, cost-effective, and easy to deploy without huge infrastructure upgrades.

That means prioritizing metrics like tokens per watt and tokens per rack for greater compute density.

We will also aggressively leverage new industry advances, like smaller nodes and new memory technologies.

Our first-generation chip used a 14-nanometer node. Our RNGD chip uses HBM3 and a 5-nanometer node and we’ll continue to advance. But it’s our architectural innovation that enables us to achieve better, more energy-efficient performance than GPUs built on the same node.

One more thing to note about our roadmap is that it’s as much about software as hardware. Furiosa has more software engineers than hardware engineers because we must continue to support new models and deployment tools quickly and effectively.

We released three major SDK updates in 2025 and will keep that pace in 2026 and beyond.

- Furiosa hails from South Korea. How do the huge Chaebols (Samsung, LG, Hyundai, SK Hynix) view this startup? What partnerships currently exist?

We’ve worked with major Korean tech leaders as both partners and customers: Samsung Foundry manufactured our first-generation chip, SK Hynix supplies the HBM3 memory for RNGD, and LG is a flagship enterprise customer currently deploying our silicon. These are deep relationships forged over many years.

Beyond conglomerates, we are working with globally competitive, Korea-based AI startups like Upstage, and we benefit from Korea’s AI-savvy consumers and large industrial base across auto, shipbuilding, and other sectors.

AI is also the top policy priority of the Korean government. Korea’s president has set forth ambitious initiatives to become a global AI powerhouse, with AI chips at the center.

We’re very excited about Korea’s momentum in AI, and this will enhance the new ecosystem we are building to move the industry beyond GPUs.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

Désiré has been musing and writing about technology during a career spanning four decades. He dabbled in website builders and web hosting when DHTML and frames were in vogue and started narrating about the impact of technology on society just before the start of the Y2K hysteria at the turn of the last millennium.

- Wayne WilliamsEditor

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.