Do more with graphics: power up with GPGPU

The GPGPU revolution is more like a slow spin.

If you're an average home user, what has GPGPU ever done for you other than provide another bullet point on a graphics card manufacturer's box?

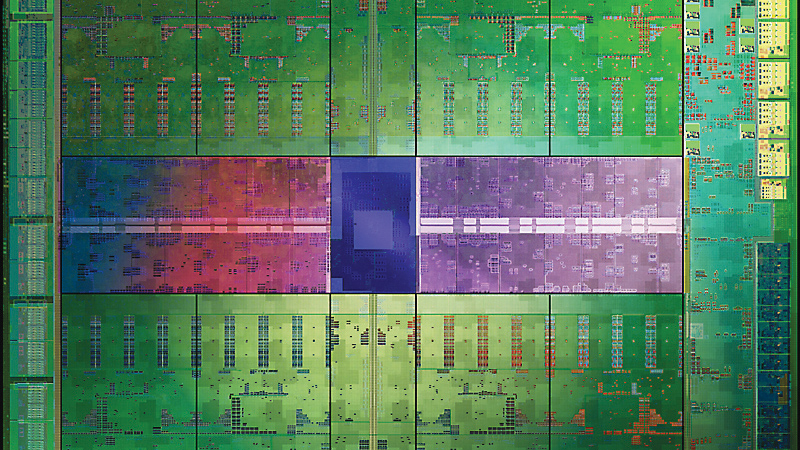

The truth is that professional high-performance computing offered via GPGPU is big business. Around a quarter of Nvidia's $1 billion turnover comes from 'professional' services, if you know what we mean. And we mean super computing. For specific applications – often 3D rendering and scientific modelling – GPGPU networked render farms provide a cost effective way of delivering massive computational power.

In days gone by, if you wanted teraflops of processing power you'd trundle over to the likes of IBM or Cray with your cheque book open, and let it get on with designing bespoke supercomputer systems with cryogenic cooling. Today, off-the-shelf components can do the same work thanks to bespoke software solutions.

All this hard development work eventually trickles down to the lowly individual users like you and me.

The bad news, depending on your outlook, is that even after being trickled, GPGPU tools all tend to be heavily maths biased. Great if you love maths, but who does?

Do the maths

Maths isn't a bad thing; ultimately 3D games are maths in the form of matrix transformations. It's just that any GPGPU functions need to work on a similar level. This limits applications until conditional branching becomes mainstream, which is happening, but slowly.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

From the other direction, most GPGPU programs support all cards. With the exception of dedicated Nvidia CUDA builds, the main difference is the amount of work the card is capable of, and so its ultimate top speed. In some cases it's quite possible that an older graphics card could be out- performed by a more modern processor, even though our tests with encoding still saw a relatively poor Nvidia 6600 GT doing relatively well.

So what programs can you hunt down that will take advantage of your lazy, good-for-nothing GPU shaders? Well, to start, WinZip offers OpenCL acceleration for compressing and decompressing files with a 20-30 per cent increase in speed.

One of the original uses, and one that remains strong, is cracking encryption and passwords. Have a look at CRARk. A clever play on Crack RAR, this unfriendly- looking command line program is in fact a hardcore RAR password cracker, which uses GPGPU to increase attack speeds 20-fold. Using its benchmark mode, password checks jumped from 283 per second to 4,281 per second. We doubt it'll be particularly useful, but it's a real example of what can be achieved. If you want something slightly easier to use, try Parallel Recovery.

Another oldie but goodie is Folding@Home. This was – and still is – one of the best known applications of GPGPU, which was made extra famous by taking advantage of the PlayStation 3 Cell processor. Equally clever is the distributed modelling system that hands out work tasks to individual systems. However, despite its cleverness and the fact that it could be helping humanity advance, Folding@Home doesn't actually do anything practical.

Practical uses

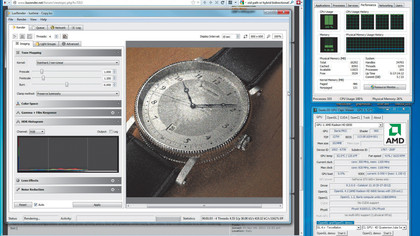

The first truly useful program is Musemage, an image processor written from the ground up around GPGPU acceleration. This makes it lightning-fast, and enables it to apply filters, effects and image manipulations in real time. It's an impressively swift package, and it's interesting how a little load on the GPU makes for a huge gain in program performance. For example, adjusting blur levels adds just a 5 per cent GPGPU load.

The same thing is coming to GIMP via a technology called GEGL, but this isn't due to be fully implemented until version 2.10. There was talk of it being partially implemented on certain filters for 2.8 RC1, but that seems to be unavailable for now.