Adobe's new AI tools could be a ChatGPT moment for video editing

Video editing is about to get a whole lot easier (and quicker)

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

ChatGPT and Midjourney may be the frontrunners of the AI-powered revolution in chatbots and image creation, but giants like Adobe aren't going to be left behind – and the company behind Photoshop has previewed some new AI tools that could completely democratize video editing.

In March we saw the arrival of Adobe Firefly, which is the company's new family of generative AI tools. And now Adobe has previewed exactly how Firefly will power its video, audio, animation and motion graphics apps, which could include beginner-friendly video editors ones like Adobe Premiere Rush or Spark Video.

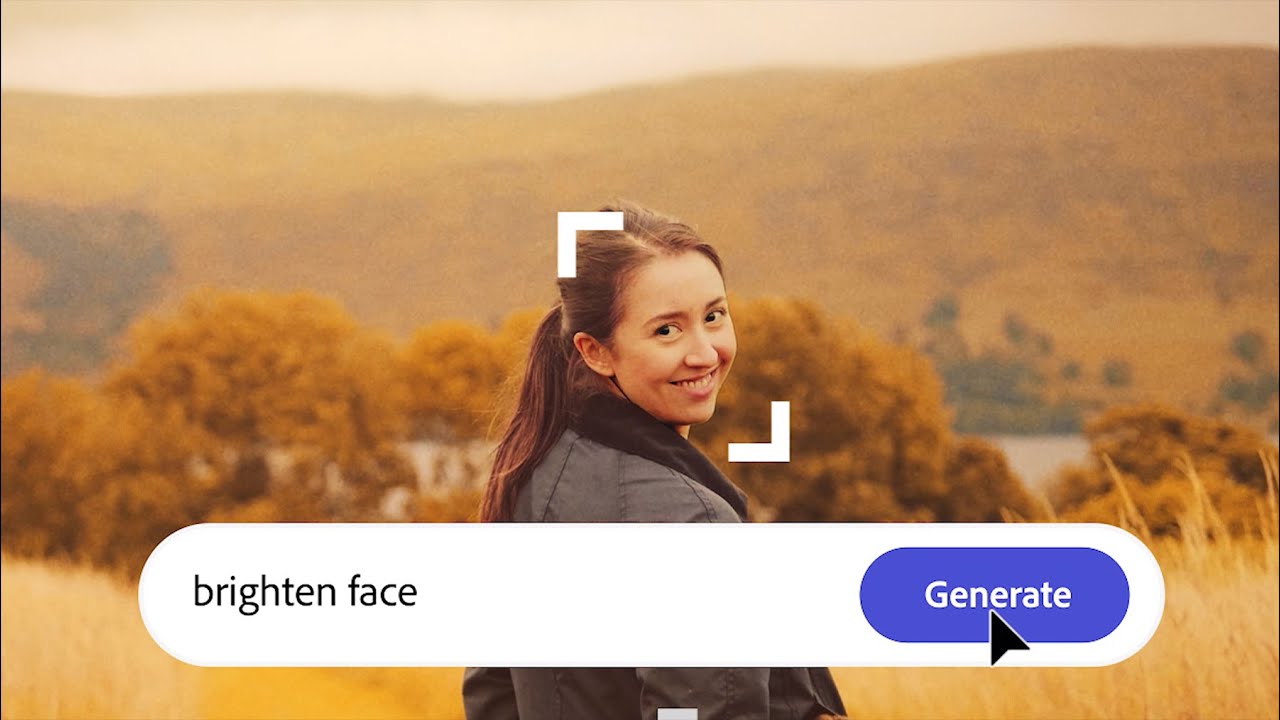

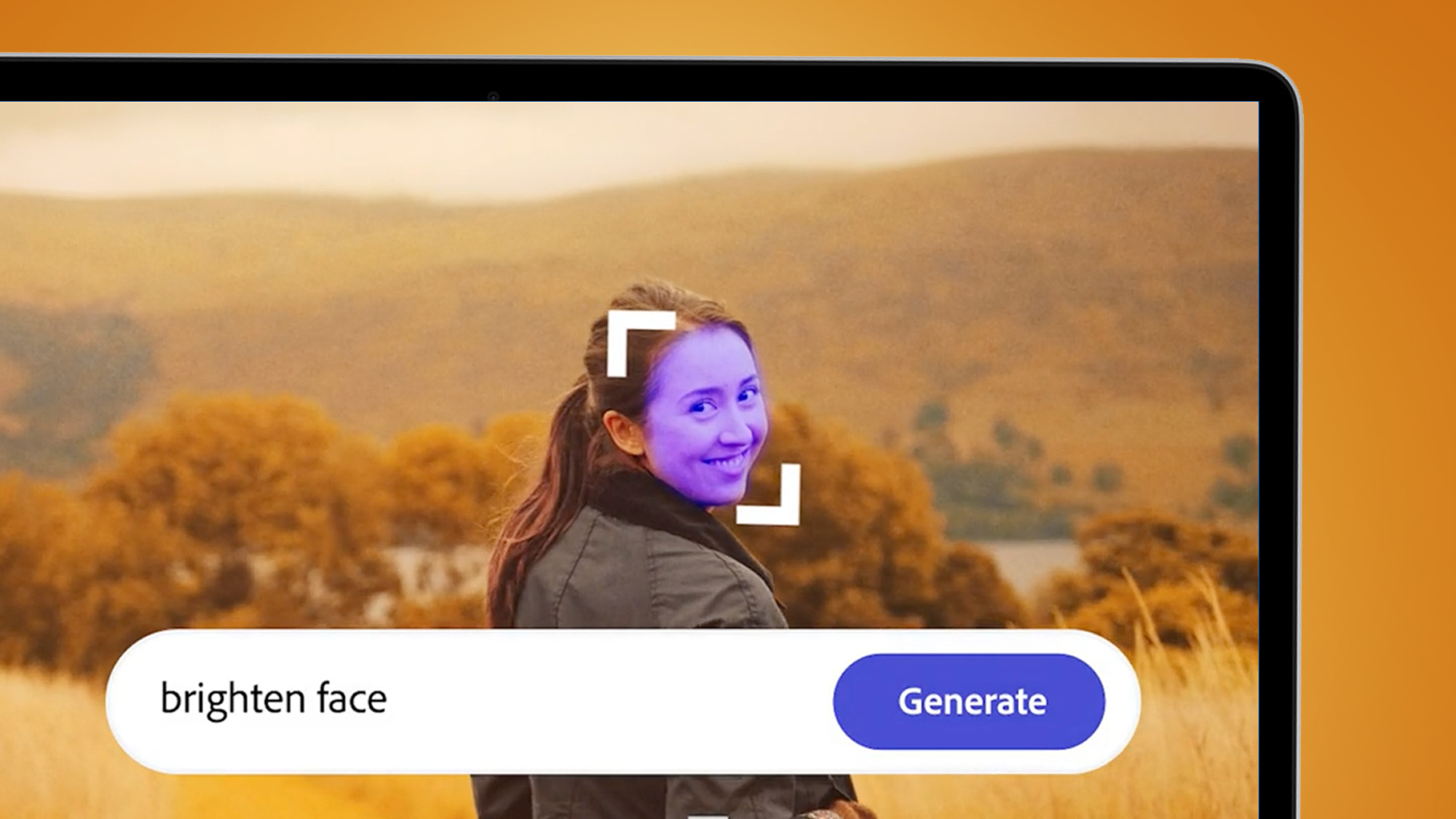

While the features are only previews, rather than imminent updates, it's clear how powerful these text-to-image tools are going to become. We've already seen how useful text-based editing is going to be in Premiere Pro, but these new tools look particularly suitable for novices – in fact, they could completely negate the need to learn complex video editing tools in most situations.

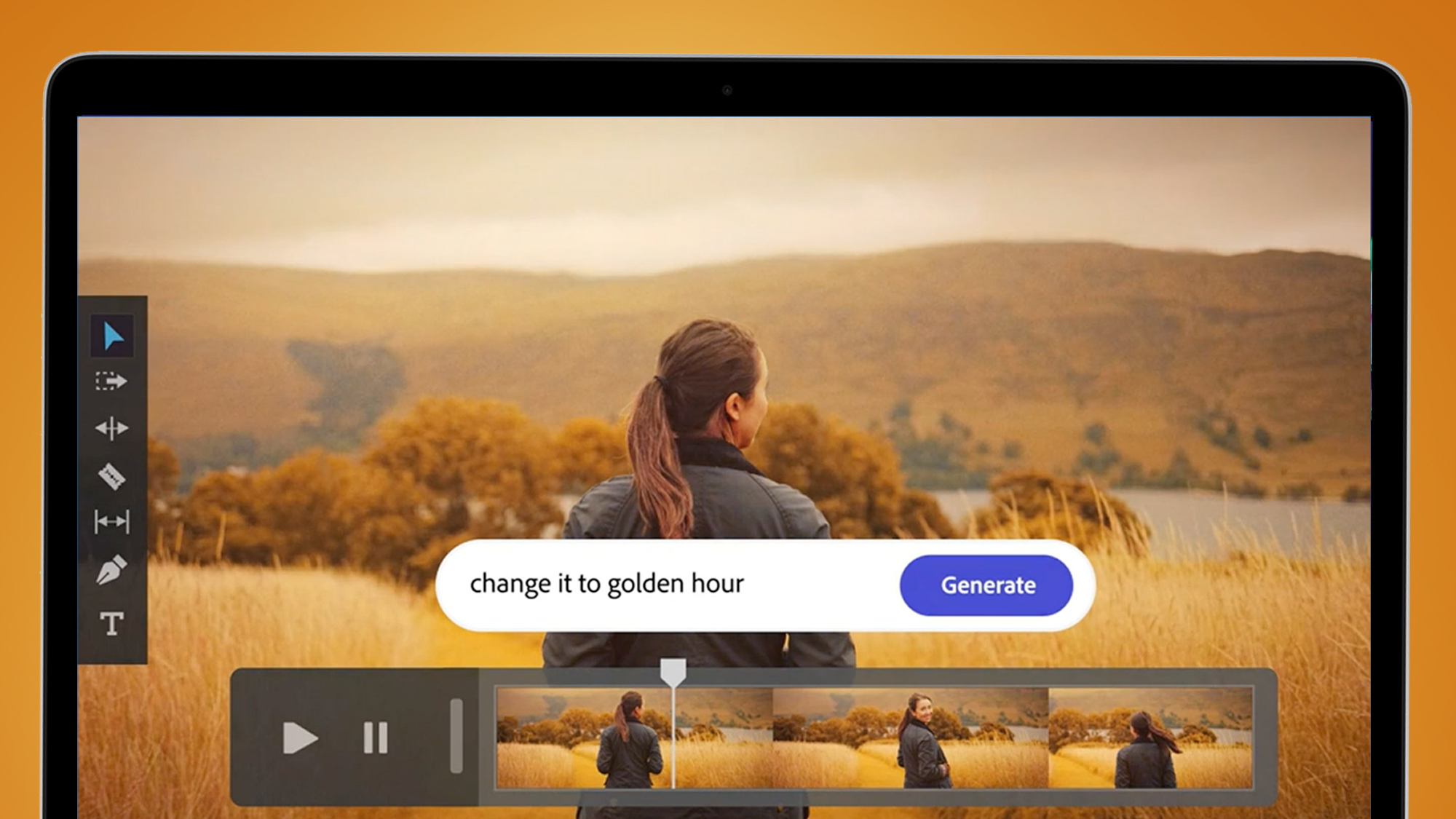

For example, one example shown by Adobe is text-to-color enhancements for videos. Type in the time of day, season or color scheme you want for an existing video, and it'll be able to apply the necessary edits. Even vague prompts like "make this scene feel warm and inviting" will work in Firefly-powered programs.

Not that you'll only be limited to automated color grading. Pretty much every aspect of a video's creation, from music, sounds effects, texts, logos and b-roll, will be editable in new AI-powered text boxes like the one we've seen in ChatGPT.

In the company's 'Meet Adobe Firefly for Video' demo, a prompt for "bright, adventurous, cheerful music" (just type your terms in, and hit 'generate') adds a royalty-free background tune for a video, along with the option to add ocean foam sound effects to match the scene.

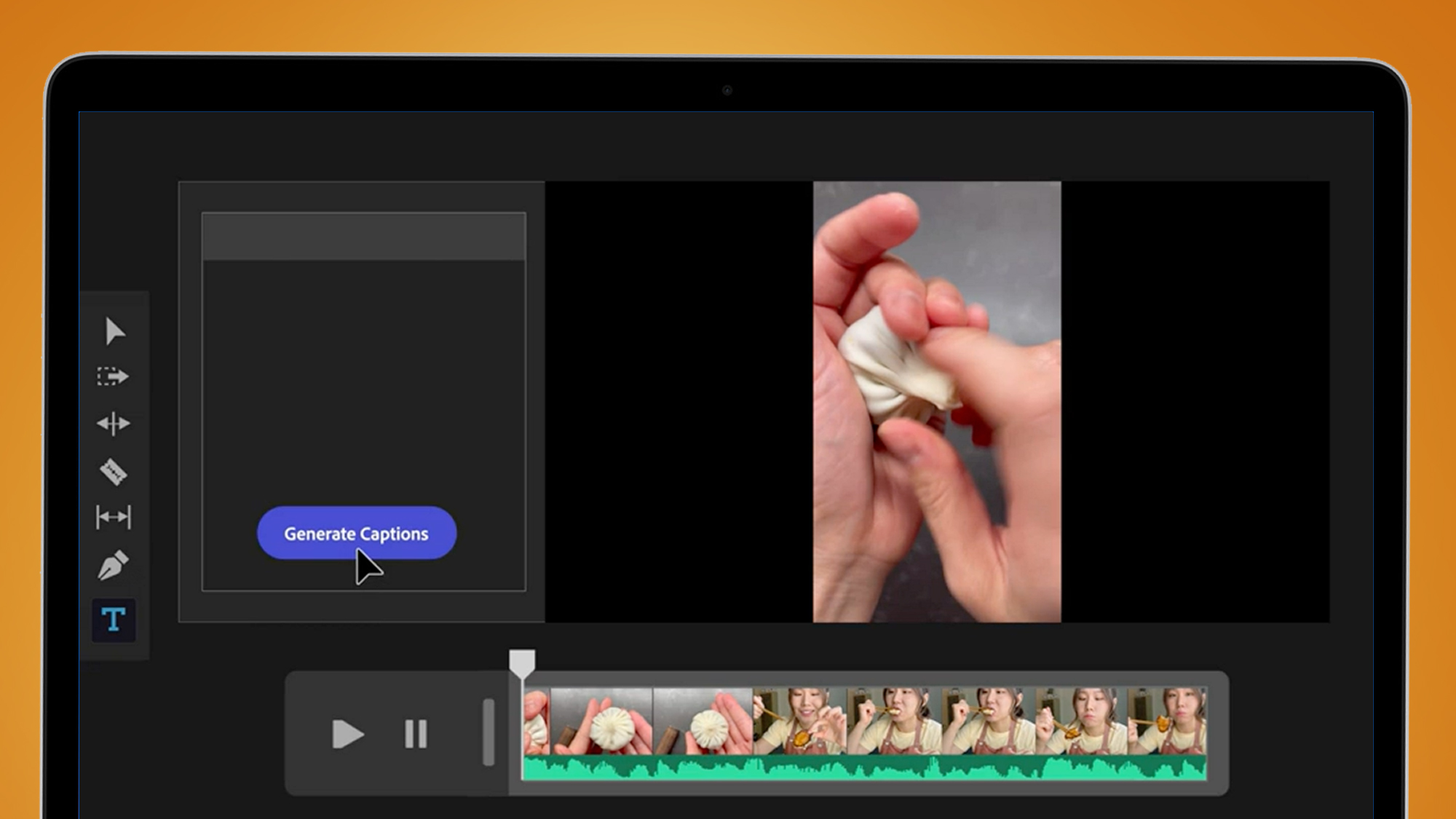

The time-saving potential of these new AI tools is also clear from the 'generate captions' demo, which shows a transcript being analyzed and split into perfectly-timed captions for a short-form social video. Similarly,, a 'Find b-roll' button scans through an auto-generated script and drops suitable cutaway clips into a video timeline.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Perhaps the most mind-blowing idea of all, though, is a 'generate storyboards' button, which again scans a written script (albeit one with clearer, human-made signposting like 'wide shot' and 'close up') and sketches out an entire sequence of shots for you (or your video shooter) to follow.

Clearly, these editing concepts are in their early stages, and there's no timeline for their rollout across Adobe's programs. Given that we're also in something of an AI hype bubble, we'll withhold judgement until we see them ship in Adobe apps and can try them in the real world.

But the potential for video creation, particularly for anyone who makes commercial or social media shorts, is huge – and Adobe is in the best place to make it all viable, given that its models have apparently been trained on openly-licensed or public domain content on which the copyright has expired.

Analysis: the power of prompts

Just like the best AI art generators, Adobe's incoming Firefly tools for video aren't a replacement for genuine creativity or skilled video creators. But they will likely open up basic video-editing skills to a whole new audience, and take the usability of apps like Adobe Premiere Rush to a whole new level.

There's also a strong time-saving element to tools like text-to-color enhancements and automatic b-roll generation – the whole process of editing a video, including music, sounds effects and captions, could be cut drastically if the tools work as well as they do in the demos.

Just like ChatGPT, the key skill required is likely to be learning which prompts to use to achieve your desired edit or effect. One of the main benefits of Firefly appears to be its natural language processing and ability to understand vague statements like "make this scene feel warm and inviting". But you'll still need to know how to describe the look you want – and the more specific you are, the better the final result will be.

Still, the big change is that we're moving from a world of video editing apps that are packed with arcane symbols and jargon to ones with simple text boxes that can understand vague prompts – and that's only going to open up video editing software to a wider audience.

Mark is TechRadar's Senior news editor. Having worked in tech journalism for a ludicrous 17 years, Mark is now attempting to break the world record for the number of camera bags hoarded by one person. He was previously Cameras Editor at both TechRadar and Trusted Reviews, Acting editor on Stuff.tv, as well as Features editor and Reviews editor on Stuff magazine. As a freelancer, he's contributed to titles including The Sunday Times, FourFourTwo and Arena. And in a former life, he also won The Daily Telegraph's Young Sportswriter of the Year. But that was before he discovered the strange joys of getting up at 4am for a photo shoot in London's Square Mile.