I finally tried the Meta AI in my Ray-Ban smart glasses thanks to an accidental UK launch – and it's by far the best AI wearable

'Look and Ask' is flawed, but surprisingly useful

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Officially, the Meta AI beta for the Ray-Ban Meta smart glasses is only available in the US and Canada, but today I spotted it had rolled out to my Meta View app here in the UK so I’ve been giving the feature a test run in and around London Paddington, near to where our office is based.

Using the glasses’ in-built camera, and an internet connection, I can ask the Meta AI questions like you would with any other generative AI – such as ChatGPT – with the added benefit of providing an image for context by beginning a prompt with “Hey Meta, look and …”

Long story short, the AI is – when it works – fairly handy. It wasn’t 100% perfect, struggling at times due to its camera limitations and an overload of information, but I was pleasantly surprised by its capabilities.

Here’s a play-by-play of how my experiment went.

I'm on an AI adventure

Stepping outside our office, I started my Meta AI-powered stroll through the car park and immediately asked my smart specs to do two jobs: identify the trees lining the street and then summarize a long, info-packed sign discussing the area’s parking restrictions.

On the trees task it straight up failed – playing a few searching beeps before returning to silence. Great start. But with the sign, the Meta AI was actually super helpful, succinctly (and accurately) explaining that to park here I needed a permit or I’d risk paying a very hefty fine, saving me a good five minutes I’d have spent deciphering it otherwise.

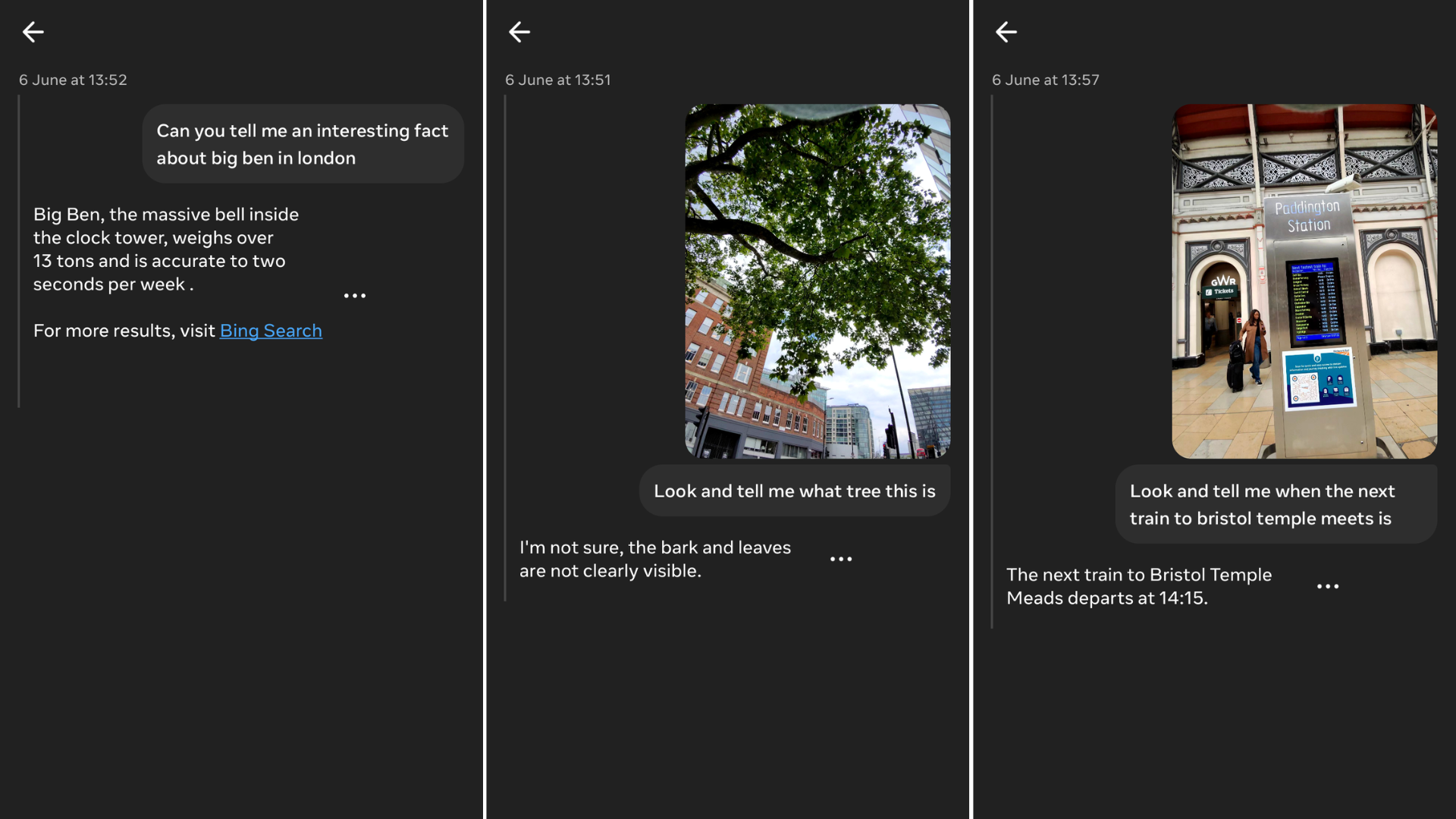

Following this mixed success, I continued walking towards Paddington Station. To pass the time, I asked the specs questions about London like I was a bonafide tourist. They provided some interesting facts about Big Ben – reminding me the name refers to the bell, not the iconic clock tower – but admitted they couldn’t tell me if King Charles III currently resides in Buckingham Palace or if I’d be able to meet him.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Admittedly, this is a tough one to check even as a human. As far as I can tell he’s living in Clarence House, which is near Buckingham Palace, but I can’t find a definitive answer. So I’ll mark this test as void and appreciate that at least the AI told me it didn’t know instead of hallucinating (a technical term used when AI makes things up or lies).

I also tried my initial tree test again with a different plant. This time the glasses said they believed it was a deciduous tree, though couldn’t tell me precisely what species I was gawking at.

When I arrived at the station I gave the specs a few more Look and Ask tests. They correctly identified the station as Paddington, and in two out of three tests the Meta AI correctly used the departure board to tell me what time the next train to various destinations was leaving. In the third test it was way off: it missed both of the trains going to Penzance and told me a later time for a completely different journey that was going to Bristol.

Before heading back to the office, I popped into the station’s shop to use the feature I’ve been most desperate to try – asking the Meta AI to recommend a dinner based on the ingredients before me. Unfortunately, it seems the abundance of groceries confused the AI and it wasn’t able to provide any suggestions. I’ll have to see if it fares better with my less busy fridge at home.

When it's right, it's scary good

On my return journey, I gave the smart glasses one final test. I asked the Meta AI to help me navigate the complex Tube map outside the entrance to the London Underground, and this time it gave me the most impressive answer of the bunch.

I fired off a few questions asking the glasses to help me locate various tube stations amongst the sprawling collection and the AI was able to point me to the correct general area every time. After a handful of requests I finished with “Hey Meta, look and tell me where Wimbledon is on this map.”

The glasses responded by saying it couldn’t see Wimbledon (perhaps because I was standing too close for it to view the whole map) but said it should be somewhere in the southwest area, which it was. It might not seem like a standout answer, but this level of comprehension – being able to accurately piece together an answer from incomplete data – was impressively human-like. It was as if I was talking to a local.

If you have a pair of Meta Ray-Ban smart glasses I’d recommend seeing if you can access the Meta AI. Those of you in the US and Canada can, for sure, but those of you who aren’t might be lucky like me to have the beta available to you. The best way to check is to simply say “Hey Meta, look and …” and see what response it gives. You can also check the Meta AI settings in the Meta View app.

A glimpse of the AI wearable future

There are a lot of terrifying realities to our impending AI future – just read this fantastic teardown of Nvidia’s latest press conference by our very own John Loeffler – but my test today highlighted the usefulness of AI wearables following the recent disasters for some other gadgets in the space.

For me, the biggest advantage of the Ray-Ban Meta smart glasses over something like the Humane AI Pin or Rabbit R1 – two wearable AI devices that received scathing reviews from every tech critic – is that they aren’t just AI companions. They’re open-ear speakers, a wearable camera, and, at the very least, a stylish pair of shades.

I’ll be the first to tell you, though, that in all but the design department the Ray-Ban Meta specs need work.

The open-ear audio performance can’t compare to my JBL SoundGear Sense or the Shokz OpenFit Air headphones, and the camera isn’t as crisp or easy to use as my smartphone. But the combination of all of these details makes the Ray-Bans at least a little nifty.

At this early stage, I’m still unconvinced the Ray-Ban Meta smart glasses are something everyone should own. But if you’re desperate to get into AI wearables at this early adopter stage they’re far and away the best example I’ve seen.

You might also like

Hamish is a Senior Staff Writer for TechRadar and you’ll see his name appearing on articles across nearly every topic on the site from smart home deals to speaker reviews to graphics card news and everything in between. He uses his broad range of knowledge to help explain the latest gadgets and if they’re a must-buy or a fad fueled by hype. Though his specialty is writing about everything going on in the world of virtual reality and augmented reality.