Apple's AI upgrades for your iPhone are reportedly on track for 2024 – here's what to expect

iOS 18 could be a landmark release if the rumors are true

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

It's shaping up to be another huge year for generative AI – and one of the biggest stories could be Apple's AI upgrades for iOS 18 and the iPhone, which are reportedly still on track for an announcement at WWDC 2024 in June.

We've previously heard that Apple is planning to reveal AI boosts for Siri, Messages, Apple Music, Pages and more during its developer's conference this year, and Bloomberg's Mark Gurman has confirmed that these announcements are still on track for a big unveiling at WWDC 2024.

The foundation for these upgrades is apparently a large language model (LLM) called Ajax, which Apple has seemingly been testing since early 2023. But what's interesting about these latest rumors is how broadly Apple is apparently planning to apply its capabilities across hardware and software, and not just on the iPhone.

We've already heard that Apple is planning to give Siri a major brain transplant to make it more conversational, like ChatGPT – and that's something it undoubtedly has to do in order to keep pace with AI rivals in 2024. But Gurman also claims that Apple is planning to add features like "auto-summarizing and auto-complete" to core apps like Pages and Keynote.

Arguably the biggest deal, though, is that Apple is apparently "working on a new version of Xcode and other development tools that build in AI for code completion" – If developers are able to harness AI to both write code and create new AI features, that could dramatically improve the quality of third-party apps on your iPhone. This follows the news in December that Apple had quietly released a new machine learning framework called MLX for Apple silicon.

The bad news? Gurman suggests that "the totality of Apple's generative AI vision" won't happen until at least 2025. Given how far behind Apple seemingly is with the technology that certainly seems likely, but we should at least get our first look at its next-gen AI upgrades in June at WWDC 2024.

What AI features could we see on iPhones?

Apple is reportedly spending over $1 billion a year on its big AI project – and while that isn't much compared to the $100 billion it's apparently spent developing the Apple Vision Pro, its mixed-reality headset that's now very close to launch, it is a significant amount for software. So what treats might it bring to our iPhones?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

The biggest is likely to be that long-rumored, next-gen Siri assistant. If you're tried using ChatGPT's free voice function on your iPhone, you'll know that it's a far more conversational voice assistant than Siri – albeit one that's still prone to hallucinations.

Plug those kinds of skills into iOS 18 and we should get much-improved voice control of our iPhones, which should work particularly well if you have AirPods. In other words, not just staccato, two-sentence commands like cooking timers, but a genuinely smart assistant that can handle multiple requests simultaneously, like smart home commands, calendar summaries and more.

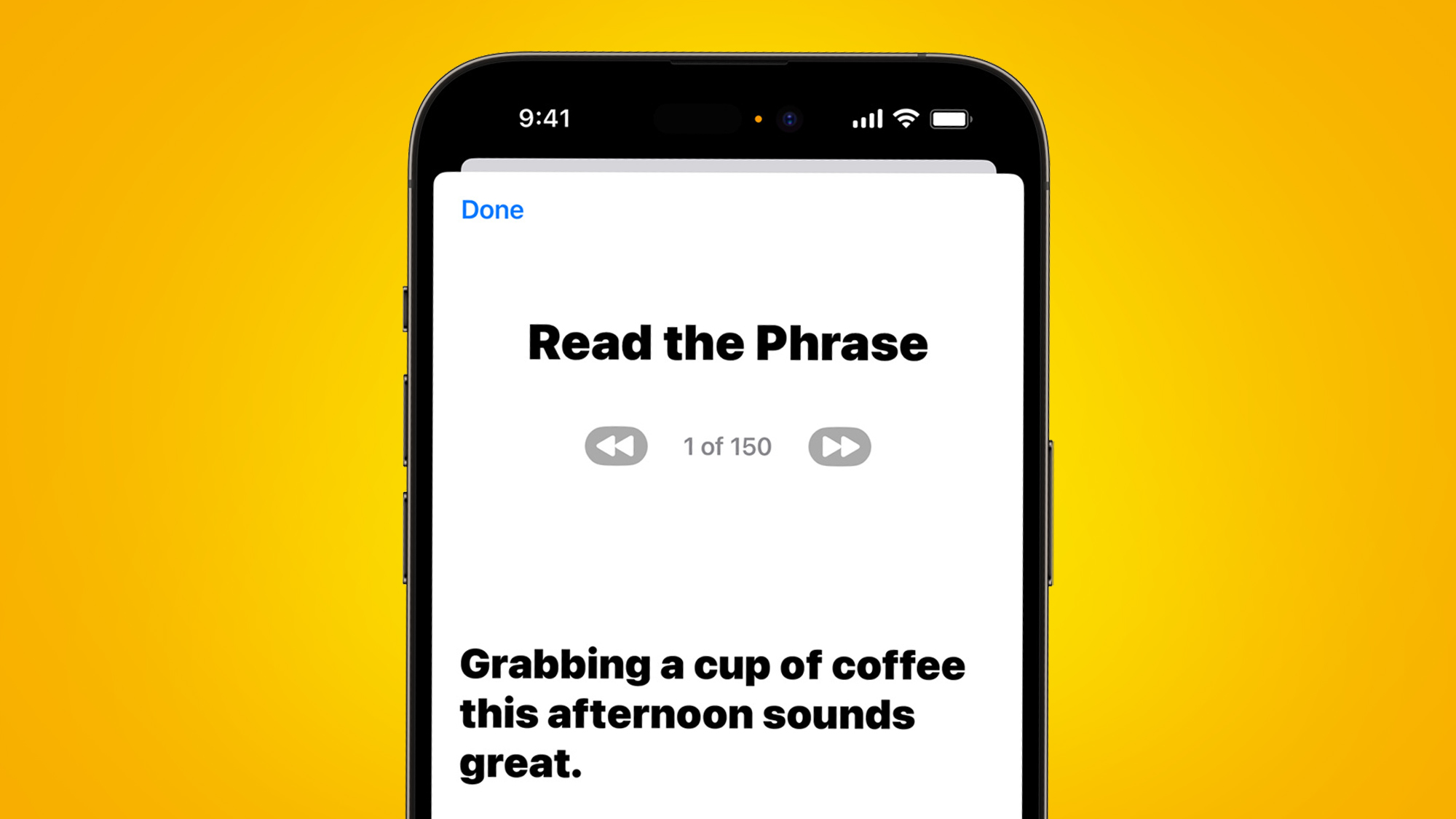

Elsewhere, we can expect to see AI appear in Messages to help answer questions and auto-complete our sentences. Apple has been using machine learning to improve the accuracy of the iOS keyboard for a few years now, so this would be a natural development of that work.

According to Gurman, Apple also plans to give Apple Music a major boost with AI features, for example by using it to "better automate playlist creation". This would see Apple Music begin to catch up to Spotify, which has already been pushing out similar features, such as its AI DJ.

A few big questions remain about Apple's AI announcements for WWDC 2024. Firstly, will Apple even refer to the new iOS 18 features as 'AI-powered'? Previously, it's avoided the term, sticking to the lesser-used (but often more accurate) 'machine learning'.

Also, will its AI features only work on-device, or will some be bolstered by cloud processing? The Google Pixel 8's new Video Boost with Night Sight feature relies on the extra power of the cloud, but Apple's focus on privacy may see it stick to local processing. If so, will the new AI features be restricted to newer iPhones? And which models?

We should find out the answers to all of these questions and more in around five months' time at WWDC 2024.

You might also like

Mark is TechRadar's Senior news editor. Having worked in tech journalism for a ludicrous 17 years, Mark is now attempting to break the world record for the number of camera bags hoarded by one person. He was previously Cameras Editor at both TechRadar and Trusted Reviews, Acting editor on Stuff.tv, as well as Features editor and Reviews editor on Stuff magazine. As a freelancer, he's contributed to titles including The Sunday Times, FourFourTwo and Arena. And in a former life, he also won The Daily Telegraph's Young Sportswriter of the Year. But that was before he discovered the strange joys of getting up at 4am for a photo shoot in London's Square Mile.