Google Photos: The pros and cons of Google's new photo service

It's a love/hate thing

This leads to one of the big potential benefits of Google Photos: Google's machine learning and neural net engines applied to automatically tag and group images. Aside from the unlimited storage, this was the one thing that I looked forward to most after Google Photos' introduction. But after using it for two weeks, my Google Photos experience has been decidedly mixed.

The default Google Photos view is a reverse chronological representation of images, by date. If you want to search for something specific, or you want to see Google's automatic groupings, you'll need to tap in the search bar (in the browser view) or tap on the floating search button on mobile.

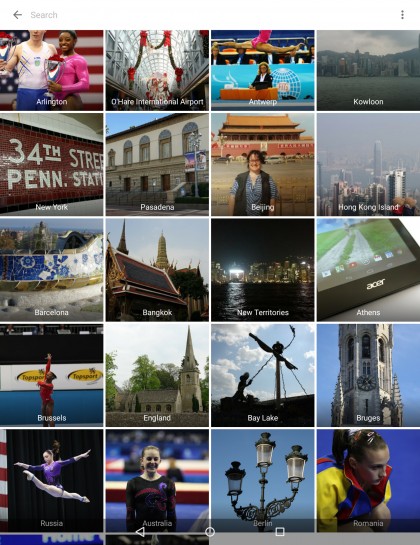

Google groups images for you, based on three basic tenets: people, places and things. Given the sheer volume of images I uploaded, though, I was surprised that Google didn't identify more categories. For example, it found 60 people, and generated thumbnails for those clusters. For things, it was only at 42.

I've seen plenty of instances being written about how easily Google can match progressions of a child, or find a man's face in the background of a picture. But my data set, and experience, is different than that: Of the 31,000+ images in Google Photos, I'd guess easily half of those were of gymnasts. A Holy Grail for any photographer is for the software to find and group all of the images of a particular person, regardless of the event, group or how many other people are in the group. This is where I expected Google Photos to excel, but instead it stumbled on my large and varied data set. In fact, the accurate groupings were so few and far between they felt like happy mistakes more than the intended result.

Often people in the images under the thumbnails were not the person represented in the thumbnail itself. While there were often shared characteristics - for example, blonde, pony-tailed young women in leotards - the reality was they were indeed different people.

That I saw certain physical similarities in images clustered as a single person was pure coincidence, based on what Google tells me. According to Dave Lieb, product lead at Google, the face grouping only uses attributes of the faces, not any details of the hair style or clothing. That said, I looked at the image clusters that perplexed me, and the reality was in many instances, the facial structures that were mistakenly grouped together looked nothing alike. Another reality, and more worrisome: The algorithm found some images, but nowhere close to all of the images uploaded of the same athlete. In one case, the algorithm found images of the same young woman, and gave her two different thumbnails - with no duplication of the images between the two. And the much talked about age-progression facial recognition? In one instance where I uploaded images of an athlete from both junior and senior competitions, the algorithm didn't pick up on that.

Google Photos' search and retrieval, and tagging, tries to mimic how humans perceive photos. But, the service lacks the more random finesse that humans add to the equation. Once uploaded into the Google cloud, the folder structure is flattened out and disappears. In addition to identifying images based on the content, Photos also uses geotags, timestamp, existing metadata that the service can read (some of my images had IPTC captions), and data from the folder something was filed in (but, if there are nested folders, it doesn't capture the info at the top-level).

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Google says Photos will learn from your efforts to manually weed out false positives. However, doing so is a chore, and can only be done on the smaller mobile screens for now - which makes doing so across large volumes of images even more difficult. I appreciate that Photos gets us as far as it does in finding people. It's frankly better than any other free solution today. But the lack of consistent identification is a concern, particularly when coupled with entries that just aren't finding all relevant images.

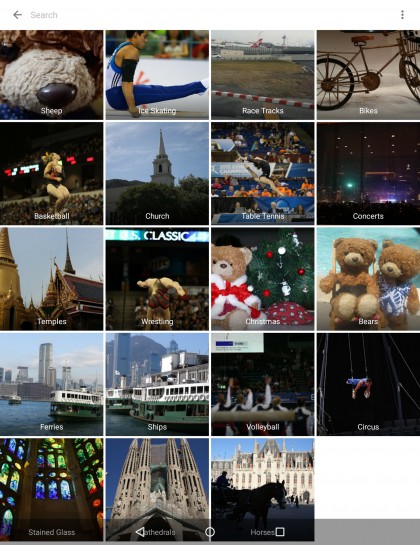

I had similar experiences with the images classified under Places and Things. If an image lacked a geotag, Google Photos was inconsistent recognizing where images were from, and what they represented. Gymnasts were identified correctly as "Gymnastics." And I could almost understand the images that ended up classified as "Dancing" and "Circus." But the same types of images were also identified as "Basketball," "Wrestling," "Ice Skating," "Table Tennis," and "Volleyball." And there was no way to reclassify those images back to "Gymnastics."

It wasn't just the gymnasts that ended up all over the map. Photos rightly identified a stuffed puppy as "Dog" (along with live dogs), but a teddy bear ended up under "Sheep" and "Bear." And only as "Bear" five days after the first images were uploaded. Google says the indexing is not instantaneous, and that matches my experience. Lieb notes that sorting begins within 24 hours of backup, and continues on a 24-48 hour basis. This explains why searching by some data points (i.e., the original folder name or the location) didn't work until four or five days passed.

Another interesting point is how the recognition works, period. The beginnings of the recognition engine lay in what we saw introduced a couple of years ago with Google+. The root here is machine learning technology, and that base technology is similar to what powers Google Image Search, but Lieb says "the clustering and search quality technologies are specifically tuned to personal photo libraries." So, perhaps, that explains why the teddy bear photos were identified as "Bears," while Google Image Search saves "Bears" for the live, breathing variety of bears, and identified the stuffed animal as a "Teddy Bear".

Sometimes, I had luck with typing in more abstract search terms. Typing "river" yielded results that included the Chao Praya river in Bangkok, and other waterways like Victoria Bay in Hong Kong. But the only way I could find a river scene from Bourton-on-the-Water was to search on the date of the one photo that Google Photos successfully identified as Bourton-on-the Water. "Sidewalk" turned up shots that included a sidewalk. "Tablet" turned up photos of tablets ... and phones, too.

Other times, I had no luck at all. Typing "child" yielded images of college-age gymnasts that don't look like children and of people of all ages, ranging from a woman holding a baby to an elderly relative posed alone. So much for narrowing the search.

Searching by criteria like the camera or device used, or other shooting metadata, might be useful in such cases, but that feature doesn't exist. A competing service, Eyefi Cloud, will be offering this feature for its $49-a-year unlimited storage service (which includes storing the full-resolution originals).

Current page: Identification headaches

Prev Page Ups and downs of the new Google Photos Next Page It's not all bad