TechRadar Verdict

Pros

- +

One of the all-time greats

- +

Still a solid performer

- +

Proven and reliable

- +

256-bit memory bus

- +

Tessellation might not take off

Cons

- -

Lacks DX11 support

- -

No tessellator or Compute Shader

- -

AMD's 5850 isn't much pricier

- -

AMD's 5770 isn't much slower

- -

Nvidia's GTX 275 is faster

Why you can trust TechRadar

Is this the greatest graphics card ever conceived? In historical terms, it's certainly up there with the very best including favourites from yesteryear such as Nvidia's astonishing return to form with the 16-pipe GeForce 6800 after the catastrophic GeForce FX series. Then there's the GeForce 256, the graphics card that coined the term GPU and marked the beginning of modern 3D era.

Stiff competition, then, but the Radeon HD 4800 series really is up there. As it happens, the 4800 was AMD's own return from the brink after the calamitous Radeon HD 2900 and the mediocre 3800. But more than that, it was the thinking man's GPU. Not the fastest you could buy, perhaps. But easily the most efficient performance GPU in terms of both power consumption and return on investment. Yes, Nvidia's GeForce GTX 200 cards were quicker. But nowhere near as much as you'd expect given the added cost and complexity.

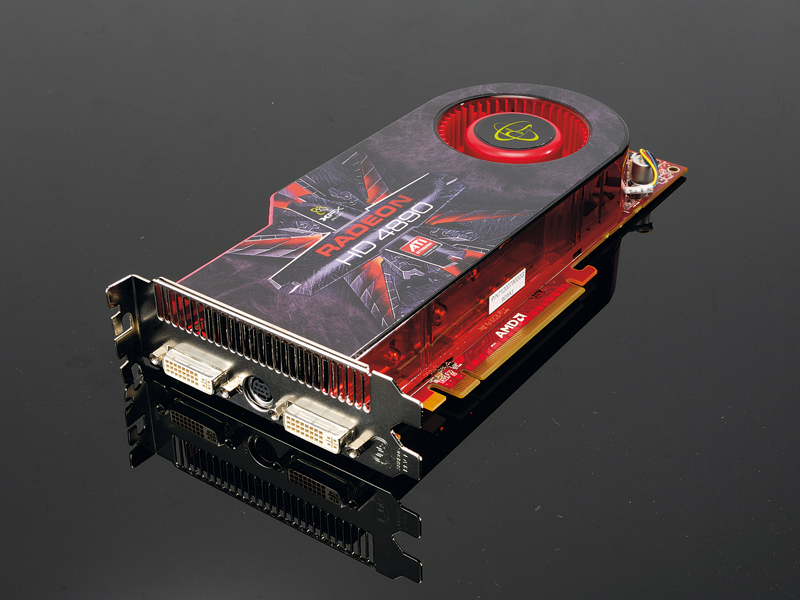

That was then. Does this 4890 board from XFX still make sense today? Features wise, it's at an obvious disadvantage. This is a DX10 chipset lost in a DX11 world. Firstly, that means it lacks support for hardware tessellation and therefore the massively increased geometric detail we're expecting from upcoming games. At least, that's the theory. Quite how much mileage game developers will get out of the DX11 tessellator remains to be seen.

It's also a non starter for the new DX11 Compute Shader. Like tessellation, it's not clear how important the Compute Shader will become. Will you really be able to run all kinds of general purpose applications on a GPU in 12 months time? While we have our doubts, we'd still prefer to hedge our bets with a pukka DX11 card.

Anyway, short of running Crysis at really high resolutions, this card will still handle anything you can currently throw at it. Indeed, it has several of AMD's new DX11 chipsets licked, including the the Radeon HD 5770 and 5830-based boards. The only slight snag is that examples of the Radeon HD 5850 are available for just £25 more. For the extra performance, features and future proofing, that's got to be £25 worth paying.

We liked

If it's no nonsense performance you seek, the XFX 4890 might just be your bag. Thanks to 800 stream shaders, decent clocks and a 256-bit memory bus, it pumps pixels at a rate that belies its status as an old timer based on an aging graphics architecture.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

For a card this powerful, it's also relatively cool running. That bodes well for its longevity. We certainly don't have the same reliability worries about this board as we do of those based on Nvidia's super-hot Fermi GPU.

We disliked

Pixel throughput is all very well well. But it's not much use if you lack actual hardware compatibility. That's increasingly going to be the case as more and more games hook into the rendering features in Microsoft's latest DirectX 11 graphics standard.

But even if that wasn't the case, the XFX Radeon HD 4890 would be up against it. Price-wise, it's far too close to Asus's £225 EAH5850 Voltage Tweak. That's a card that not only packs DX11 support. It's also significantly quicker across the board and comes with some snazzy extras including voltage tweakery. Making matters worse, MSI's Radeon HD 5770 isn't hugely slower. But it is massively less expensive at £125.

Verdict

XFX's take on the Radeon HD 4890 chipset isn't long for this world. That's probably just as well. In most of our benchmarks, it's no more than level pegging in pure performance terms with Asus's Radeon HD 5830. That's a card with DX11 support and therefore much longer legs in terms of future game compatibility.

More to the point, if the 5830 doesn't add up at £195, this 4890 card is a farce for £200. All in all, it's a sad end for a once great graphics chipset. The sooner it disappears off to the greats PCI Express slot in the sky, the better.

Follow TechRadar Reviews on Twitter: http://twitter.com/techradarreview

Technology and cars. Increasingly the twain shall meet. Which is handy, because Jeremy (Twitter) is addicted to both. Long-time tech journalist, former editor of iCar magazine and incumbent car guru for T3 magazine, Jeremy reckons in-car technology is about to go thermonuclear. No, not exploding cars. That would be silly. And dangerous. But rather an explosive period of unprecedented innovation. Enjoy the ride.