Google I/O 2024 – the 7 biggest AI announcements, from Gemini to Android 15

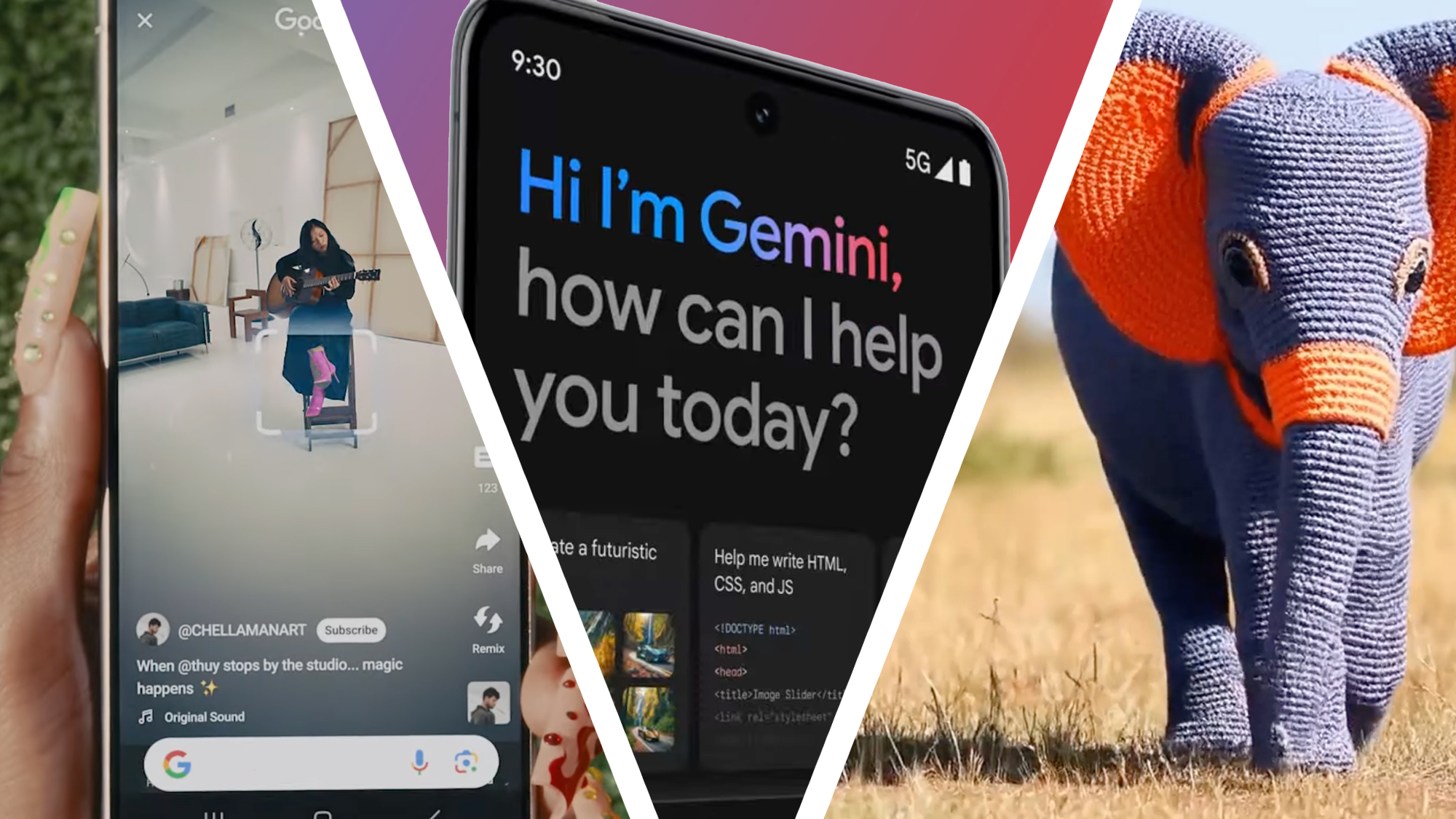

Gemini, Gemini, and more Gemini

The dust has now settled on the Google I/O 2024 keynote and there's no doubting what the big theme was – Google Gemini and new AI tools completely dominated the announcements, giving us a glimpse of where our digital lives are headed. CEO Sundar Pichai was right to describe the event as its version of The Eras Tour – specifically, the "Gemini Era" – at the very top.

Unlike previous years, the entire keynote was about Gemini and AI; in fact, Google said the latter a total of 121 times. From unveiling a futuristic AI assistant called "Project Astra" that can run on a phone – and maybe smart glasses, one day – to Gemini being infused into nearly every service or product the company offers, artificial intelligence was definitely the dominant theme.

The two-hour keynote was enough to melt the mind of all but the most ardent LLM enthusiast, so we've broken down the 7 most important things that Google announced during its main I/O 2024 keynote – and included the latest news on when we actually might see these new tools...

1. Google dropped Project Astra – an “AI agent” for everyday life

So it turns out that Google does have an answer to OpenAI’s GPT-4o and Microsoft’s CoPilot. Project Astra, dubbed as an "AI agent" for everyday life, is essentially Google Lens on steroids and looks seriously impressive, able to understand, reason, and respond to live video and audio.

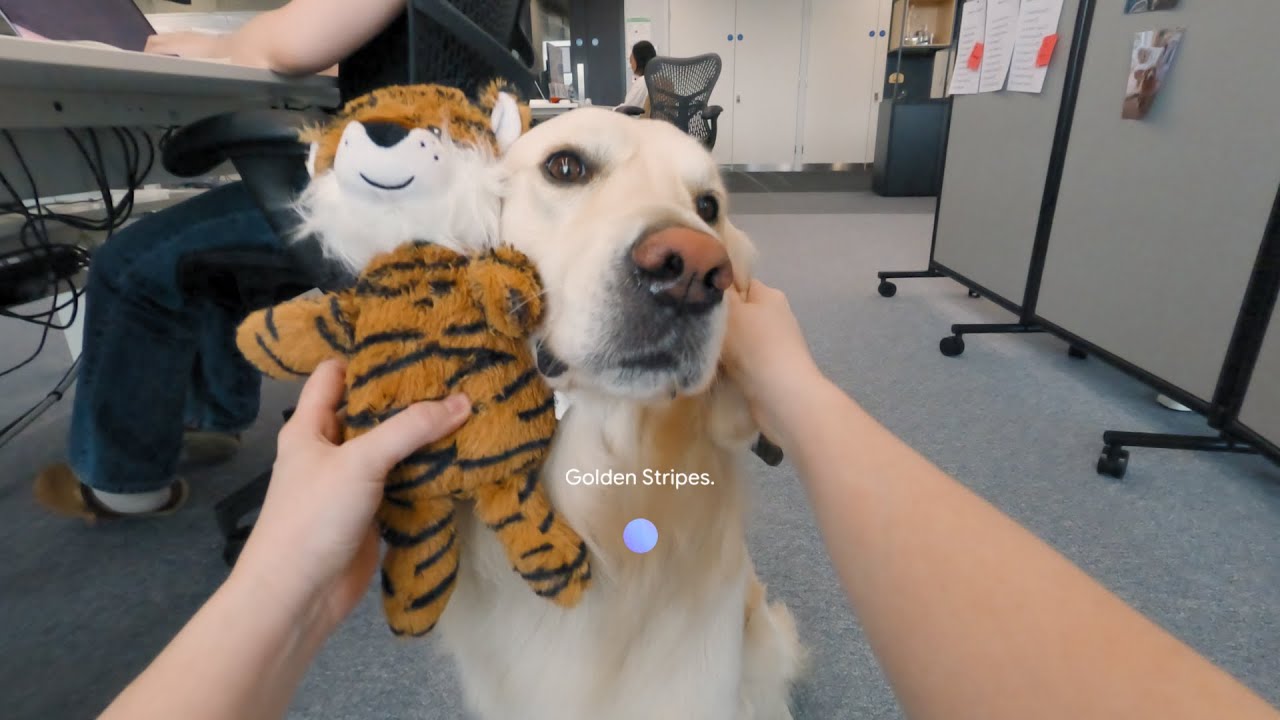

Demoed on a Pixel phone in a recorded video, the user was seen walking around an office, providing a live feed of the rear camera and asking Astra questions off the cuff. Gemini was viewing and understanding the visuals while also tackling the questions.

It speaks to the multi-modal and long-context in the backend of Gemini, which works in a jiffy to identify and deliver a response quickly. In the demonstration, it knew what a specific part of a speaker was and could even identify a neighborhood in London. It's also generative because it quickly created a band name for a cute pup next to a stuffed animal (see the video above).

It won’t be rolling out immediately, but developers and press like us at TechRadar will get to try it out at I/O 2024. And while Google didn’t clarify, there was a teaser of glasses for Astra, which might mean Google Glass could make a comeback.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Still, even as a demo during Google I/O, it's seriously impressive and potentially very compelling. It could supercharge smartphones and the current assistants we have from Google and even Apple. Furthermore, it also shows off Google's true AI ambitions, a tool that can be immensely helpful and no chore at all to use.

- When will it launch? Unknown right now – Google describes it as "our vision for the future of AI assistants"

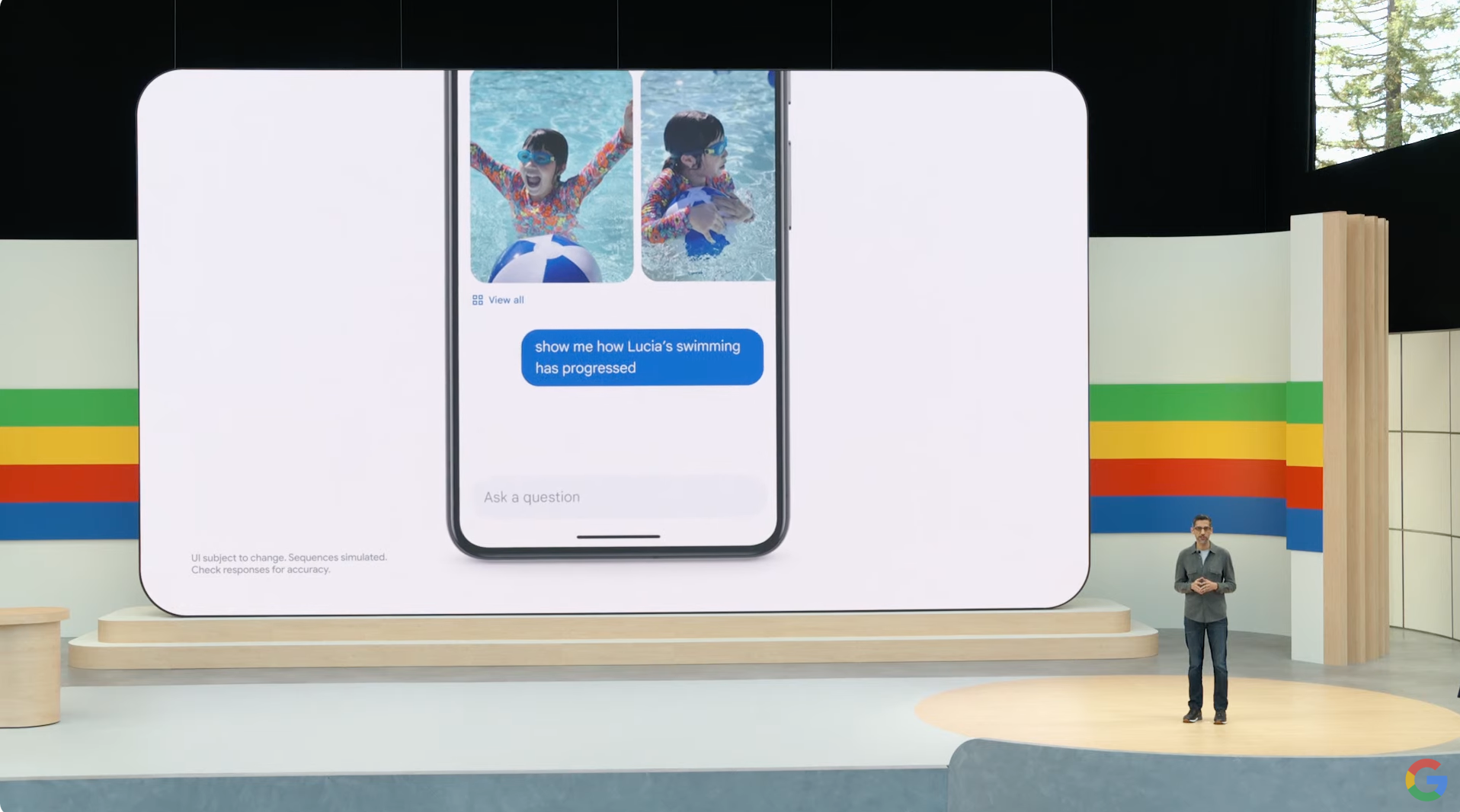

2. Google Photos got a helpful AI boost from Gemini

Ever wanted to quickly find a specific photo you captured at some point in the distant past? Maybe it's a note from a loved one, an early photo of a dog as a puppy, or even your license plate. Well, Google is making that wish a reality with a major update to Google Photos that fuses it with a Gemini. This gives it access to your library, lets it search it, and easily delivers the result you’re looking for.

In a demo on stage, Sundar Pichai revealed that you can ask it for your license plate, and Photos will deliver an image showing it and the digits/characters that make up your plate. Similarly, you can ask for photos of when your child learned to swim along with any more specifics. It should make even the most disorganized photo libraries a bit easier to search.

Google has dubbed this feature “Ask Photos,” and will roll it out to all users in the "coming weeks". And it will almost certainly come in handy, and make folks who don't use Google Photos a bit jealous.

- When will it launch? "In the coming months" as an experimental feature, according to Google

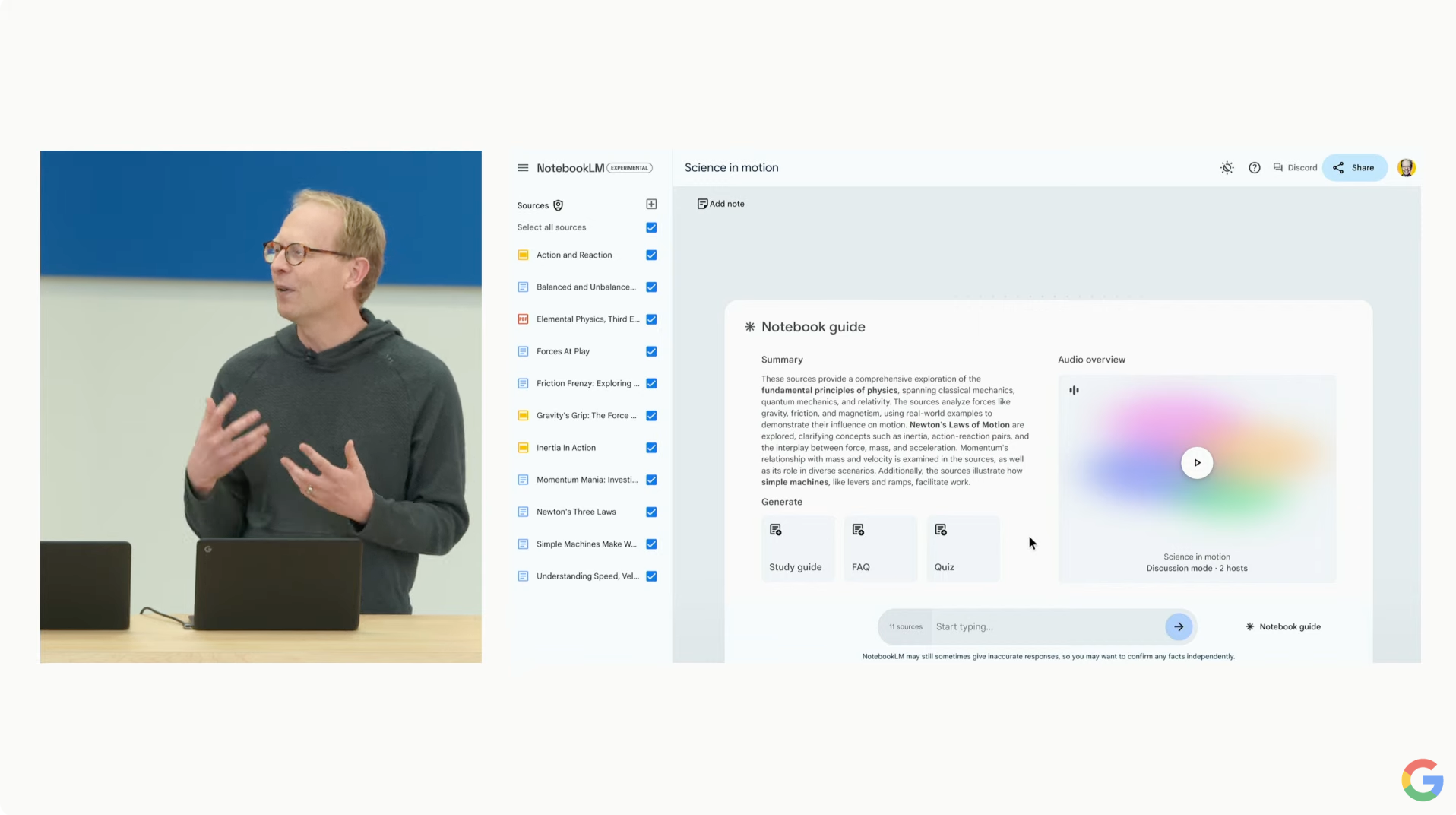

3. Your kid’s homework just got a lot easier thanks to NotebookLM

All parents will know the horror of trying to help kids with homework; if you ever knew about this stuff in the past, there’s no way the knowledge still lurks within your brain 20 years later. But Google may have just made the task a lot easier, thanks to an upgrade to its NotebookLM note-taking app.

NotebookLM now has access to Gemini 1.5 Pro, and based on the demo given at I/O 2024, it’ll now be a better teacher than you’ve ever been. The demo showed Google’s Josh Woodward loading up a notebook filled with notes about a learning topic – in this case, science. With a single button press, he was able to create a detailed learning guide, with further outputs including quizzes and FAQs, all pulled from the source material.

Impressive – but it was about to get a lot better. A new feature – still a prototype for now – was able to output all of the content as audio, essentially creating a podcast-style discussion. What’s more, the audio featured more than one speaker, chatting about the topic naturally in a way that would definitely be more helpful than a frustrated parent attempting to play the role of teacher.

Woodward was even able to interrupt and ask a question, in this case “give us a basketball example” – at which point the AI switched tack and came up with clever metaphors for the topic, but in an accessible context. The parents on the TechRadar team are itching to try out this one.

- When will it launch? Unknown right now

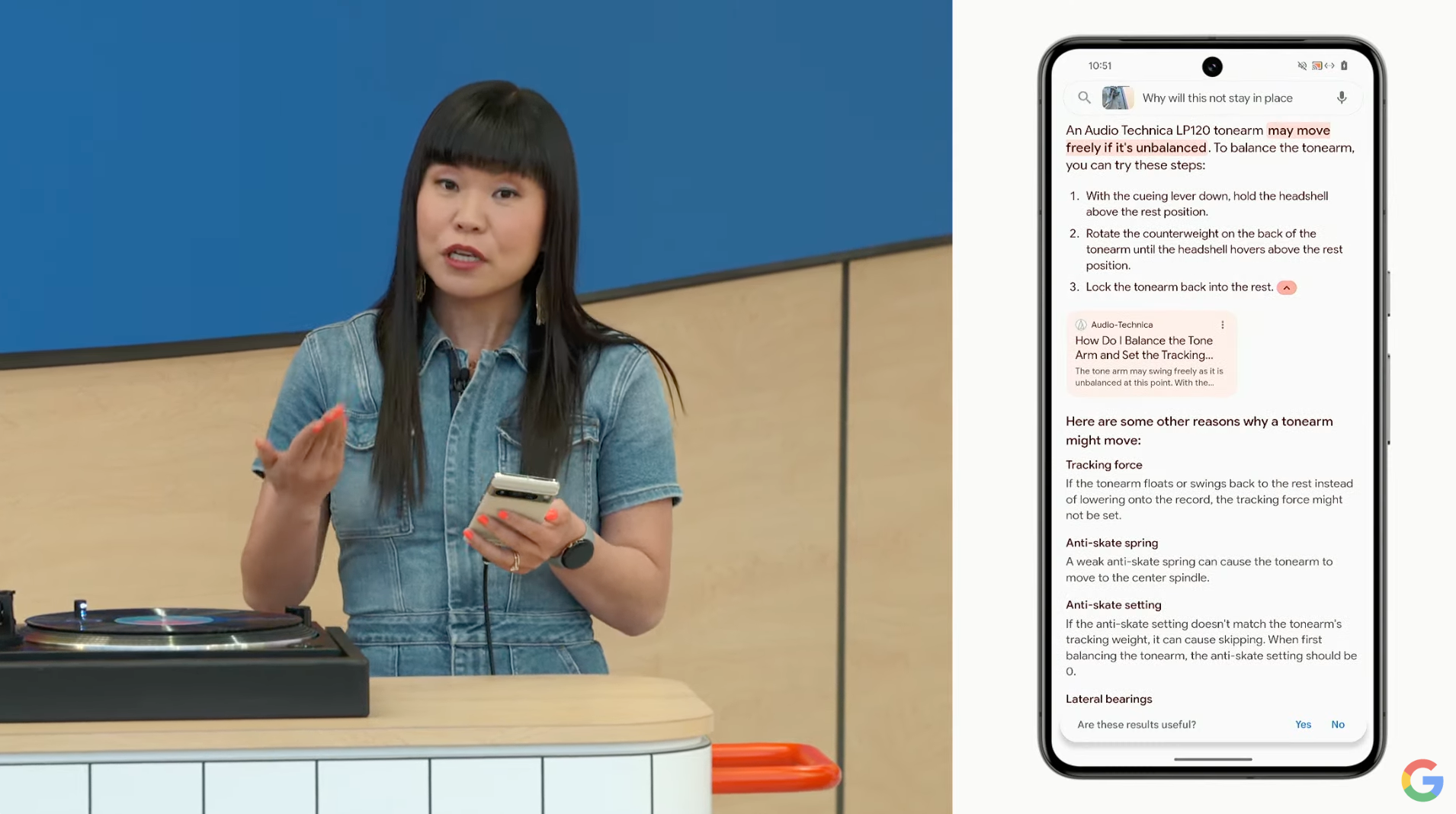

4. You’ll soon be able to search Google with a video

In a strange on-stage demo with a record player, Google showed off a very impressive new search trick. You can now a record a video, and search it to get results, and hopefully an answer.

In this case, it was Googler who was wondering how to use a record player; she hit record to film the unit in question while asking something and then sent it off. Google worked its search magic and provided an answer in text, which could be read aloud. It's an entirely new way to search, like Google Lens for video, and also distinctly different from the forthcoming Project Astra everyday AI, as this needs to be recorded and then searched versus working in real-time.

Still, it’s part of a Gemini and generative AI infusion with Google Search, aiming to keep you on that page and make it easier to get answers. Before this demo of searching with video, Google showed off a new generative experience for recipes and dining. This allows you to search for something in natural language and get recipes or even eatery recommendations on the results page.

Simply, Google’s going full throttle with generative AI in search, both for results and various ways to get the results.

- When will it launch? Google says that "searching with video will be available soon for Search Labs users in English in the US" and will "expand to more regions over time"

5. Google took on OpenAI’s Sora with its Veo video tool

We’ve been marveling at the creations of OpenAI’s text-to-video tool Sora for the past few months, and now Google is joining the generative video party with its new tool called Veo. Like Sora, Veo can generate minute-long videos in 1080p quality, all from a simple prompt.

That prompt can include cinematic effects, like a request for a time-lapse or aerial shot, and the early samples look impressive. You don’t have to start from scratch either – upload an input video with a command, and Veo can edit the clip to match your request. There’s also the option to add masks and tweak specific parts of a video too.

The bad news? Like Sora, Veo isn’t widely available yet. Google says it’ll be available to select creators through VideoFX, one of its experimental Labs features, “over the coming weeks.” It could be a while until we see a wide rollout, but Google has promised to bring the feature to YouTube Shorts and other apps. And that’ll have Adobe shifting uneasily in its AI-generated chair.

- When will it launch? You can now join the Veo waitlist, with Google stating that it'll be "available to select creators in private preview in VideoFX". Google also says that "in the future, we’ll also bring some of Veo’s capabilities to YouTube Shorts" and other products

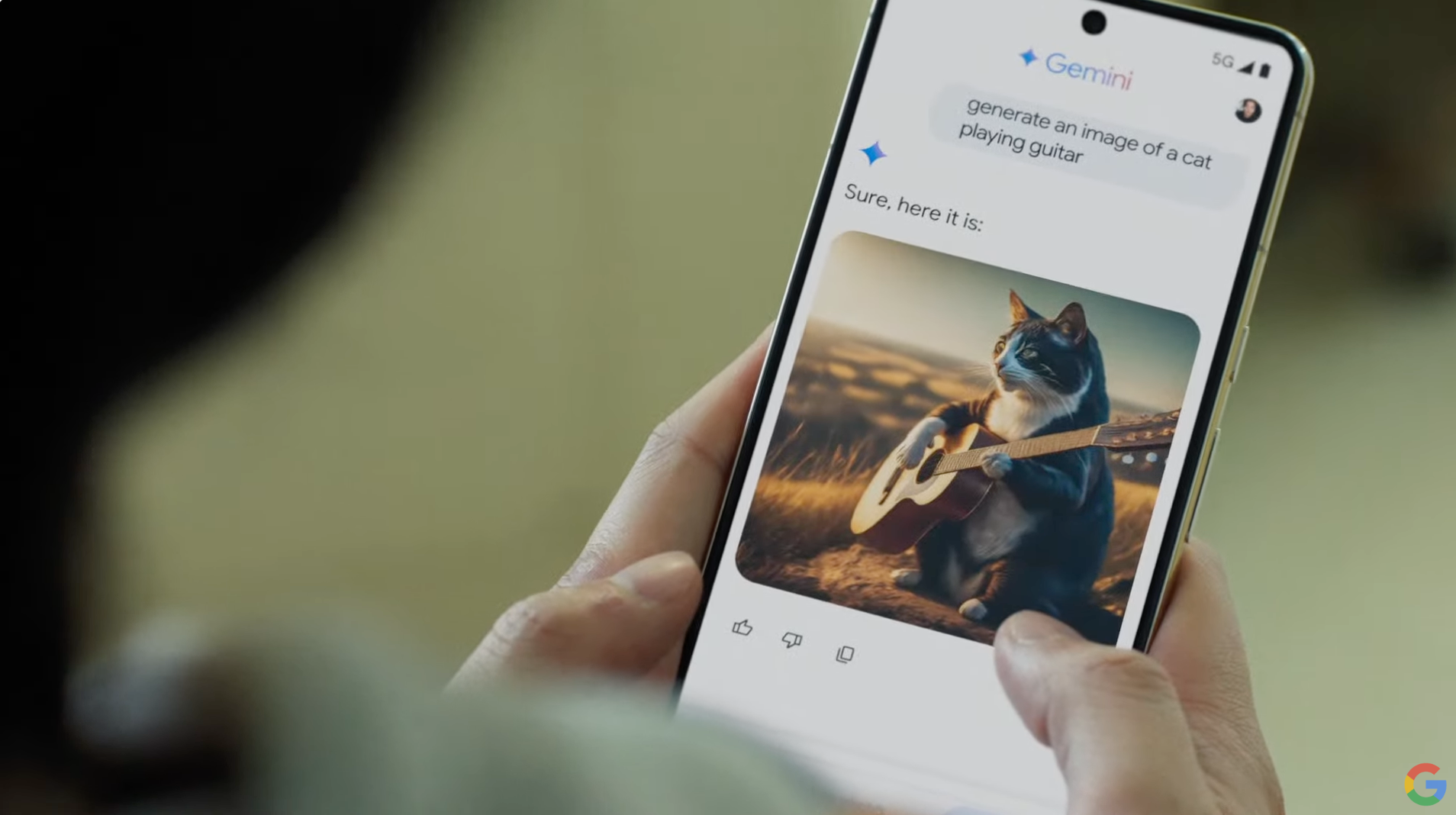

6. Android got a big Gemini infusion

Much like Google's "Circle to Search" feature lays on top of an application, Gemini is now being integrated into the core of Android to integrate with your flow. As demonstrated, Gemini can now view, read, and understand what is on your phone's screen, letting it anticipate questions about whatever you view.

So it can get the context of a video you're watching, anticipate a summarization request when viewing a lengthy PDF, or be ready for myriad questions about an app you're in. Having a content-aware AI baked into a phone's OS isn't a bad thing by any stretch and could prove super useful.

Alongside Gemini being integrated at the system level, Gemini Nano with Multimodality will launch later this year on Pixel devices. What will it enable? Well, it should speed things up, but the landmark feature, for now, is Gemini listening to calls and being able to alert you in real time if it's spam. That's pretty cool and builds upon call screening, a long-standing feature of Pixel phones. It's poised to be faster and process more on-device rather than sending it off to the cloud.

- When will it launch? Google says that 'Gemini Nano with Multimodality' will be available "on Pixel later this year". Circle to Search improvements and the new bank scams feature for phone calls will also land "later this year"

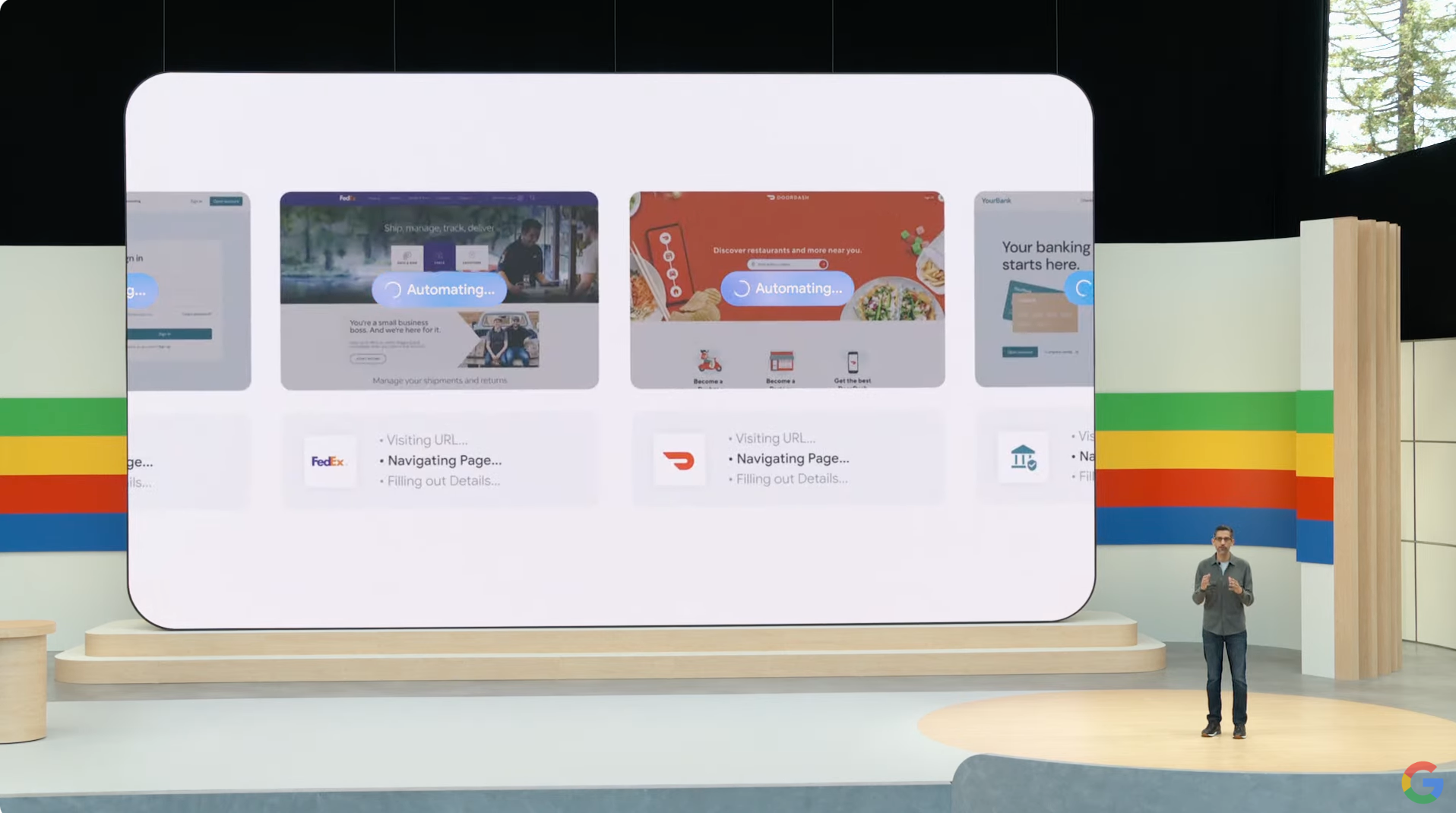

7. Google Workspace will get a lot smarter

Workspace users are getting a treasure trove of Gemini integrations and useful features that could make a big impact daily. Within Mail, thanks to a new side panel on the left, you can ask Gemini to summarize all the recent conversations with a colleague. The result is then summarized with bullet points highlighting the most important aspects.

Gemini in Google Meet can give you the highlights of a meeting or what other folks on the call might be asking. You would no longer need to take notes during that call, which could prove helpful if it’s lengthy. Within Google Sheets, Gemini can help make sense of data and process requests like pulling a specific sum or data set.

The virtual teammate “Chip” may be the most futuristic example. It can live in a G-chat and be called up for various tasks or queries. While these tools will make their way into Workspace, likely through Labs first, the remaining question is when they will arrive for regular Gmail and Drive customers. Considering Google's approach of AI for all and pushing it so hard with search, it is likely a matter of time.

- When will it launch? Gemini's side panel in Gmail, Docs, Drive, Slides and Sheets will be upgraded to Gemini 1.5 Pro "starting today" (May 14). For the Gmail app, the 'summarize emails' feature will be available to Workspace Labs users "this month" (May) and to Gemini for Workspace customers and Google One AI Premium subscribers "next month"

You Might Also Like

Jacob Krol is the US Managing Editor, News for TechRadar. He’s been writing about technology since he was 14 when he started his own tech blog. Since then Jacob has worked for a plethora of publications including CNN Underscored, TheStreet, Parade, Men’s Journal, Mashable, CNET, and CNBC among others.

He specializes in covering companies like Apple, Samsung, and Google and going hands-on with mobile devices, smart home gadgets, TVs, and wearables. In his spare time, you can find Jacob listening to Bruce Springsteen, building a Lego set, or binge-watching the latest from Disney, Marvel, or Star Wars.

- Marc McLarenGlobal Editor in Chief

- Mark WilsonSenior news editor