I'm a die-hard iPhone fan, but switching to Android has shown me what Apple Intelligence is missing

Opinion: A recent shift from iPhone to Android has shown me what AI is supposed to look like in 2025

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Say what you want about Apple in 2025, but I truly believe that iOS still represents the very best of mobile software design.

It's intuitive, with a great design language that permeates throughout the OS, and it’s just really fun to use. But if there’s one area that hangs like a lifeless limb from iOS' otherwise muscular frame, it’s Apple Intelligence.

I can’t blame Apple for wanting to go all-in with its own take on artificial intelligence. After all, AI features are becoming key USPs of today's flagship (and even not-so-flagship) devices – from Galaxy AI on the best Samsung phones to Google’s in-house AI systems on the best Pixel phones. To not join in with the current AI revolution is to run the risk of being seen as old-fashioned.

Despite Apple's best intentions, though, Apple Intelligence – in its current form, at least – is a dud.

Of course, it’s tricky to know exactly where Apple Intelligence has gone wrong if you’re accustomed to Apple’s way of doing things. That's why, over the last week, I’ve been using AI on the OnePlus Open to see what I’ve been missing. I wasn’t quite sure what to expect, but now the experience has shown me just how much ground Apple has given up to the competition.

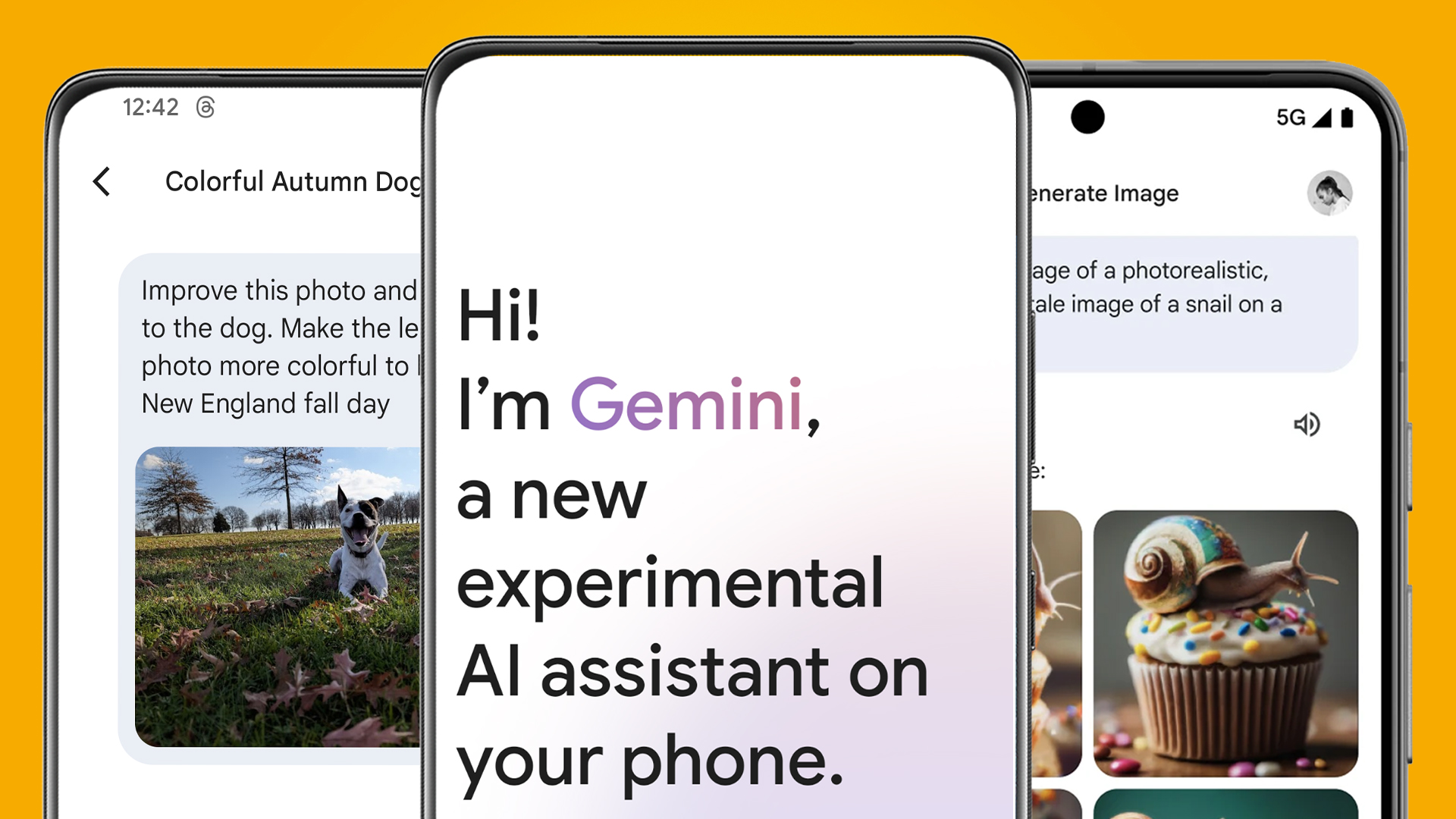

Google Gemini is just on a whole other level

To give Siri some credit, when it comes to setting timers, calling contacts, or setting reminders, it can do the job just fine, and if your requests stay within those confines, then you won’t have an issue. It's when you go beyond those parameters that it starts to fall apart.

Somewhat laughably for a man in his early thirties, I’m now finally making an attempt to get into football after feeling like too much of a social outcast whenever conversations turn to last night’s match, and I’ve been trying to use AI to keep me in the loop with everything that’s going on.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

For example, instead of scrolling through a timetable of upcoming fixtures, I instead decided to simply ask Siri when the next Liverpool match was set for. The assistant responded in kind, but when I asked if it could then add that match as an event in my calendar, it hadn’t the faintest idea what I was talking about.

Moving over to Google Gemini and following through the same set of questions, it did exactly what I asked of it in next to no time.

Dropping it down a tad and giving Siri a lowball that I thought it would knock out of the park, when asking who the current Liverpool manager is, it couldn't respond without asking if I wanted the results via a Google search or a ChatGPT request. I can understand Apple wanting to give me that option if I’d asked Siri something about theoretical physics, but not for something so basic, and I don’t understand why it's unable to differentiate between the two.

These are the features I want to use AI for: simple requests that make my day just that little bit easier. I do at least have some hope that Apple can catch up at this level. Where the real uphill battle lies is in Apple's fight to compete with Gemini Live.

Living with a true digital assistant

Using Gemini Live for the first time, I felt a kinship with those who must have marveled at the very first consumer-grade computers as they started to recognize all of the possibilities on the horizon.

This feature lets you talk to Gemini in the style of free-flowing conversation – there’s no need to type or press any buttons, just speak what’s on your mind, and Gemini will respond much like a normal person.

Gemini Live feels like the full realization of what having a digital assistant is supposed to be.

If you ask Gemini for a realistic schedule that lets you juggle both your full-time job and your side hustle, then it’ll create one for you. For when you want advice on how to talk to a friend who is struggling with their mental health, Gemini can be surprisingly insightful. At one point, it even mentioned that it could pick up on the nuances and tone of my voice to recognize whether I’d said something in anger or jubilation. This feels like the full realization of what having a digital assistant is supposed to be, and Siri (in its current form) doesn’t compare in the slightest.

The disparity is so cavernous here that I do wonder whether Apple should change tact and invest its resources in changes that make sense. For example, OnePlus is one of the few companies that hasn’t changed its entire outlook to focus on AI, but it has included meaningful AI features that are available (like AI summaries of web pages) on the OnePlus 13, but never thrown in your face.

Thankfully, with the introduction of Call Screening and Live Translation in iOS 26, it seems as though Apple is trying to gain back some ground where functional AI features are concerned, and that’s great.

Beyond that, I think it might be time for Apple to abandon any plans of getting Siri to compete – after what I’ve seen from Google, I’m going to assign the Gemini app to my Action button anyway.

You might also like

After cutting his teeth covering the film and TV industries, Tom spent almost seven years testing the latest tech over at Trusted Reviews before heading out into the world of freelance writing. From vacuum cleaners to video games, there isn't much that Tom hasn't written about, but being something of a gym fanatic, he tends to harbour an obsession where smartwatches are concerned.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.