How MP3 compression works

Ask us to name a universally known file format, and it would probably be a toss-up between MP3 and JPG.

Simply put, if you're a fully paid up member of the digital multimedia revolution, you will have thousands of these files on your computer's hard drive – music you listen to and photos you look at – both of which have been compressed to cram as much information as possible into the minimum of space.

What is an MP3?

The MP3 is a fairly recent invention in digital music and sound. Before that, there was the compact disc, or CD. The audio on a CD is converted from an analog source, such as the master tape (although these days, most audio is recorded directly as digital).

The analogue wave can't be recorded digitally as it is, so a digital audio processor is used to sample the analogue audio wave 44,100 times a second. This means, at every tick, the digital audio processor works out the amplitude of the original very complex audio wave.

It records this as a two-byte value, so there are 65,536 possible values for this amplitude: 32,767 values above zero and 32,767 below. It does this sampling for the two channels of stereo as well.

For a CD, the values of the amplitudes are stored directly onto the CD as a series of pits that the laser in your optical drive can read and interpret. No compression is done on the data stream.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Since a CD can store up to 74 minutes of music, we can calculate the storage capacity of a CD: 74 minutes ≈ 60 seconds per minute ≈ 44,100 samples per second ≈ two bytes per sample ≈ two channels = 783,216,000 bytes or 747MB.

Furthermore, the I/O channel that the CD uses needs to be able to transfer 176kB of data per second to the digital audio processor (the one that reconstructs the analogue audio wave from the digital data and then feeds it through the amplifier to the speakers).

Recording soundwaves

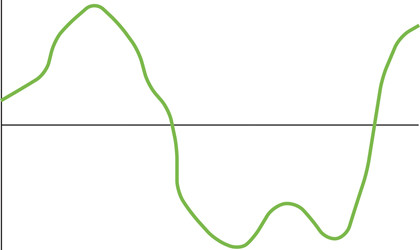

The reason for the sample rate and the amplitude measurement is fairly mundane. Suppose we're sampling the waveform shown in Figure 1.

If we sample at too low a rate, we may miss some peaks and troughs in the original audio and so the resulting waveform may sound completely different and muddy.

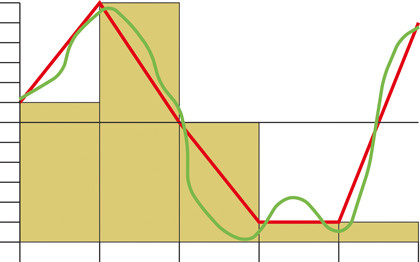

Figure 2 shows this scenario, where the resulting waveform in red looks quite different from the original. We therefore need to sample much more often. Given that the human ear (in general) only hears a tone up to about 20kHz in frequency, we should therefore sample at least twice that rate in order to properly capture the highs and lows of the audio wave at that frequency. With a fudge factor added just in case, the rate settled on was 44,100Hz.

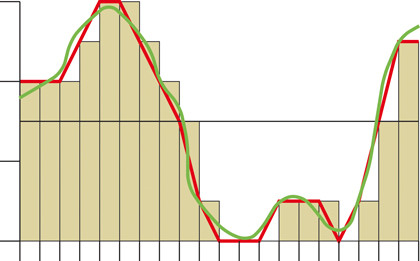

Figure 3 shows a different problem: the number of possible values for the amplitude is fairly small. From the original measured amplitude, the processor must choose the closest value it can record. Here we've got a fairly high sample rate, but the measurements of the amplitude are pretty coarse.

Again, the resulting waveform looks different from the original – a little more subtle perhaps, but it could still alter the sound pretty badly (highs might be higher than the original, for example, making the result more shrill and meaning that subtle nuances in the music are lost).

Here, a different criterion comes into play: making the sample values fit into a whole number of bytes to help make the output DAC's job easier. One byte would be far too small for this (with only 256 different values for the amplitude), so the original designers decided on two bytes per sample.