5 ways AI is transforming how our cameras work

AI is making life easier for photographers and filmmakers

As TechRadar’s Cameras Editor, AI is the subject I’ve written about the most this year. AI is fooling judges to win photography contests, and magically improving photo quality in the best photo editors, such as Topaz Photo AI, while AI image generation has come to the industry-leading photo editor Adobe Photoshop in the form of the Generative Fill tool.

AI is now the buzzword for the best cameras, too, as it's increasingly being used to enhance their performance. It's behind new camera features and improved performance even in serious full-frame cameras like the new Sony ZV-E1. In our in-depth Sony ZV-E1 review, video producer Pete Sheath and I touch on some of the camera’s AI-powered features, which include Auto Tracking (not to be confused with subject tracking AF).

The ZV-E1 is the clearest nod towards a future where AI will increasingly be involved in how our cameras operate. So how is AI enhancing camera performance right now? Let’s take a look.

1. Subject detection autofocus

In 2023, some big camera names are labeling subject-detection autofocus as AI, but the truth is that the technology has been around for many years in a different guise before AI started to populate our news feeds: deep learning or machine learning autofocus.

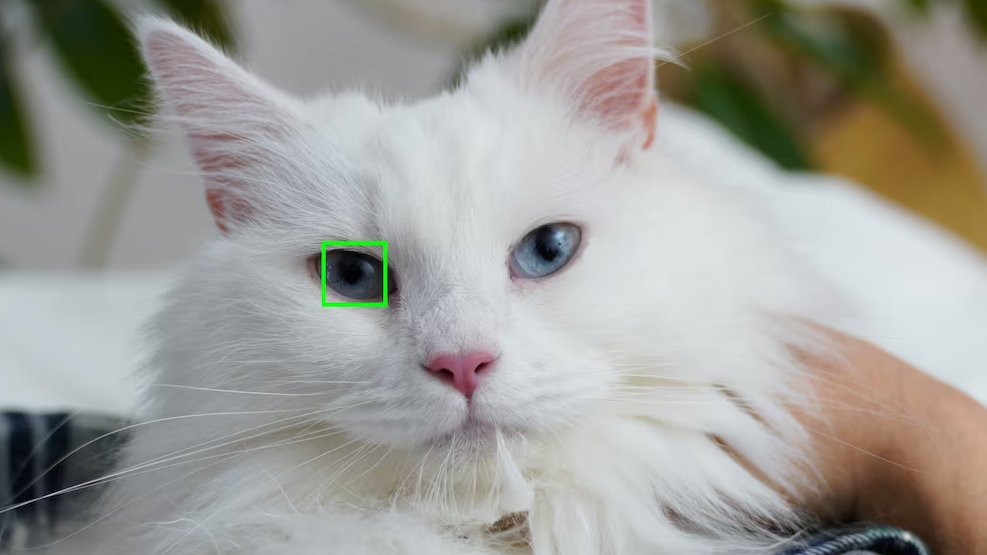

The deep learning that powers subject-detection autofocus gets is 'trained' using a pool of images, much like the best AI art generators, and in the early days that pool was limited to human subjects, to help AF systems better recognize and track humans for sharp focus. These systems offer face and eye detection, and even left or right eye detection, so you can pick which eye the camera focuses on.

Each camera generation benefits from an ever-growing pool of images to widen the subjects its deep learning can detect and improve its performance. In 2023, the range of subjects that the latest and greatest cameras can reliably track covers cats, dogs, birds, insects, planes, trains and cars, in addition to humans. The Canon EOS R3 can even recognize a motorcyclist wearing a helmet and reliably track them.

Autofocus performance is so good in 2023 that as a photographer it can feel like you’re cheating.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

What’s important to understand is that the information for deep learning (or ‘AI’) autofocus is hardwired on delivery, and can’t even be modified through a firmware update – the deep learning element of autofocus is not active. If you take a picture of a gorilla today, that isn’t then actively added to your camera’s deep learning so that the next time you take a picture of a gorilla, your camera recognizes a gorilla.

Almost all of today’s cameras operate using hardware rather than software, and so the range of subjects covered by your camera’s autofocus won't be expanded – you’ll have to look elsewhere, or wait until the next edition if you want more. One camera that hopes to buck this way of operating is the yet-to-be-launched software-based Alice Camera.

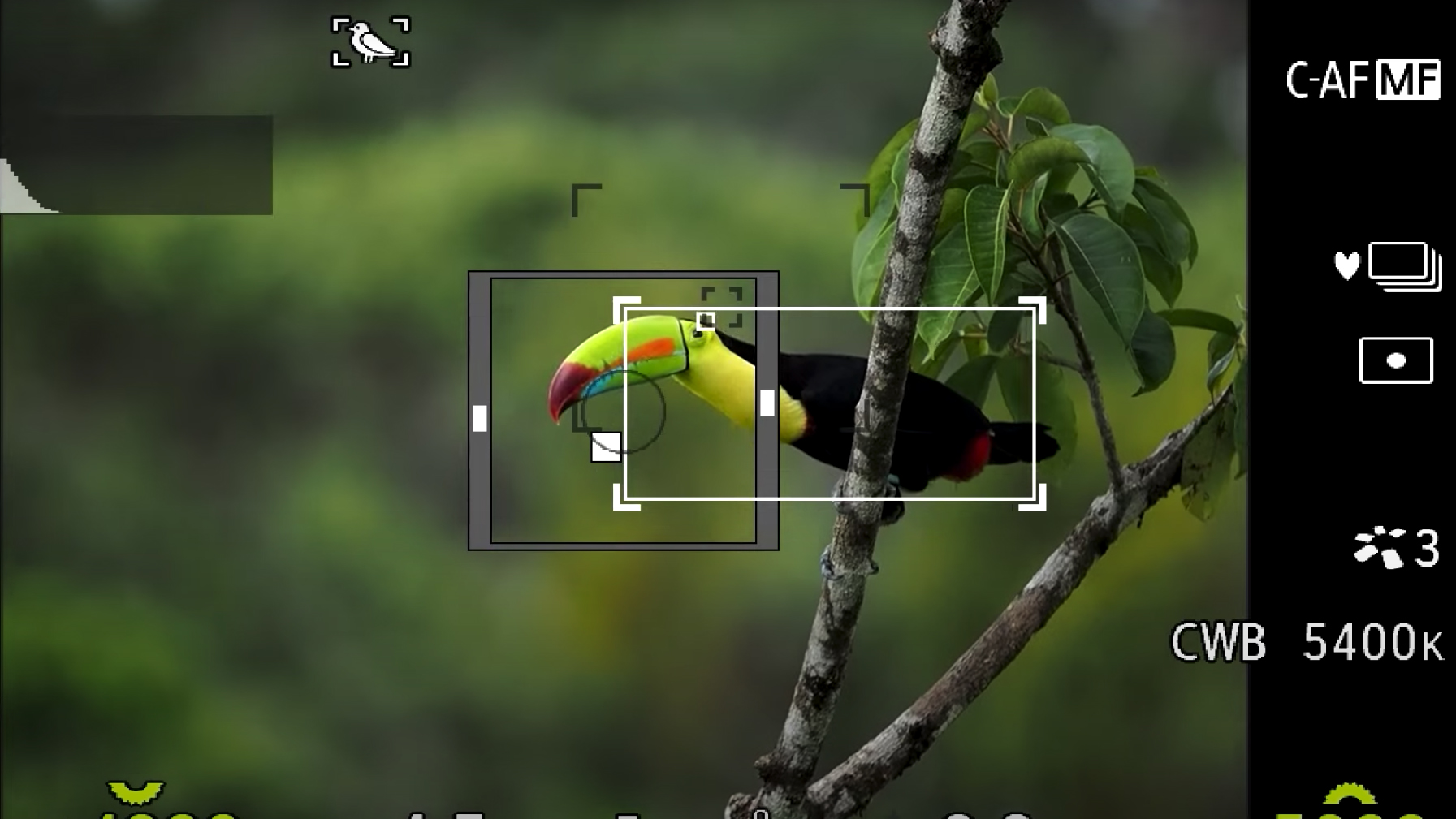

2. Auto Tracking with the Sony ZV-E1

The Sony ZV-E1 has a neat AI-powered Auto Tracking feature that offers three different-sized crops into the full image area: small, medium and large. The camera then convincingly follows a tracked subject as they move about within the full image frame. What you get as a result is the perception of a human-operated camera following a subject, even though the camera is static.

Our field test revealed that the effect feels genuine, although it is fallible. Relying on subject recognition it proved to be less reliable in low-light scenarios and with fast-moving subjects, but outdoors it was virtually flawless.

This tracking effect can be created in post production without the AI-powered mode, but the ZV-E1 makes the whole process much simpler and much quicker, and streamlines the edit for small crews. What’s more, with an Atomos monitor attached, you can dual-record the full-area image and the cropped tracking frame simultaneously, effectively giving you an A cam and B cam from your lone, static ZV-E1.

3. Video aperture-racking

Another AI-powered Sony ZV-E1 feature is what's best described as aperture-racking, which I’ve found pretty reliable. It works by subject recognition, and automatically switches between a wide aperture with shallow depth of field that makes a single subject stand out, and a small aperture with extensive depth of field to keep multiple subjects in sharp focus.

Aperture racking is your quickest technique for scenes where the number of people in the shot changes, ensuring the best blend of shallow depth of field and critical focus for all subjects in the shot. Making use of it as an auto AI-powered in-camera mode effectively takes care of another camera operator task. All in all, a single-person crew can achieve a lot with the ZV-E1.

4. Computational photography

Another set of in-camera AI tools that makes life easier for creators goes under the name computational modes. The OM System OM-1’s computational modes include focus stacking, which combines multiple images shot at different focus distances into one image for greater depth of field – a technique widely used for macro photography.

Again, you could take these pictures individually, shifting the focus distance incrementally between each one, and then combine them using editing software, but what AI does is expedite the process, so you get a stacked image in-camera at the press of a button. Computational focus stacking isn’t perfect – I’ve regularly noticed a halo effect with brightly-lit high-contrast subjects, but it does make the process much easier.

The same camera also offers Live ND, which is a bit like the Motion Mode in the latest Google Pixel handsets, which can replicate the popular landscape photography long-exposure effect by blurring moving subjects or elements, such as water. High Res shot, High Dynamic Range… all of these computational modes empower modern cameras beyond what was possible a few years ago.

5. Improved image processing

This isn't strictly an in-camera AI tool, but earlier this year Canon shared its neural network technology for its cloud-based image.canon app, which addresses image defects such as noise, false color/moire and lens softness, using deep-learning AI image processing that goes way beyond the standard in-camera image processing.

Where standard in-camera image processing applies an edit such as noise reduction on a pixel-by-pixel level, AI image processing analyzes the bigger picture, detecting subjects and applying different enhancements to different areas of the same image to maximize detail. Canon describes the noise-reduction function of its new tech like this: “In photographic terms, it equates to reducing the ISO setting by about two values without affecting the exposure.”

It’s a little bit like how the Topaz Photo AI editor can magically improve image quality, but linking directly to Canon cameras via Canon’s own apps. Whether this level of correction can be built directly into a Canon camera and its processor, we’ll see.

What’s next for in-camera AI?

We can look to the latest generation of smartphones for the possible ways that AI might continue improving how cameras operate. Some improvements will pertain to the kind of images that our cameras are capable of producing, while others will be at the operational level, making life easier for photographers and filmmakers.

It could be neat to have the smartphone portrait mode effect available for cheaper, slower camera lenses that don't quite offer the shallow depth you want, like a 18-55mm F3.5-5.6 kit lens. And what's stopping AI subject detection being used to power prompts that tell you how to compose a scene better based on what it sees in the shot, in real time? Whatever comes next, the AI imaging revolution shows no signs of slowing down.

Tim is the Cameras editor at TechRadar. He has enjoyed more than 15 years in the photo video industry with most of those in the world of tech journalism. During his time as Deputy Technical Editor with Amateur Photographer, as a freelancer and consequently editor at Tech Radar, Tim has developed a deeply technical knowledge and practical experience with cameras, educating others through news, reviews and features. He’s also worked in video production for Studio 44 with clients including Canon, and volunteers his spare time to consult a non-profit, diverse stories team based in Nairobi. Tim is curious, a keen creative, avid footballer and runner, and moderate flat white drinker who has lived in Kenya and believes we have much to enjoy and learn from each other.