The OM System OM-1 shows computational tricks are the future of mirrorless cameras

Opinion: Computational trickery isn't just for smartphones

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

I recently tested the OM System OM-1. It's a serious bit of kit for serious photographers, with a rugged DSLR-style build, an impressive stacked sensor, and excellent lenses. It's also a flagship camera that will set you back $2,200 / £2,000 / AU$3,300 body-only.

Yet something altogether different snuck into the headlines about this new camera – its computational modes. Surely computational photography is for the smaller sensors of supposedly inferior smartphones? Not so.

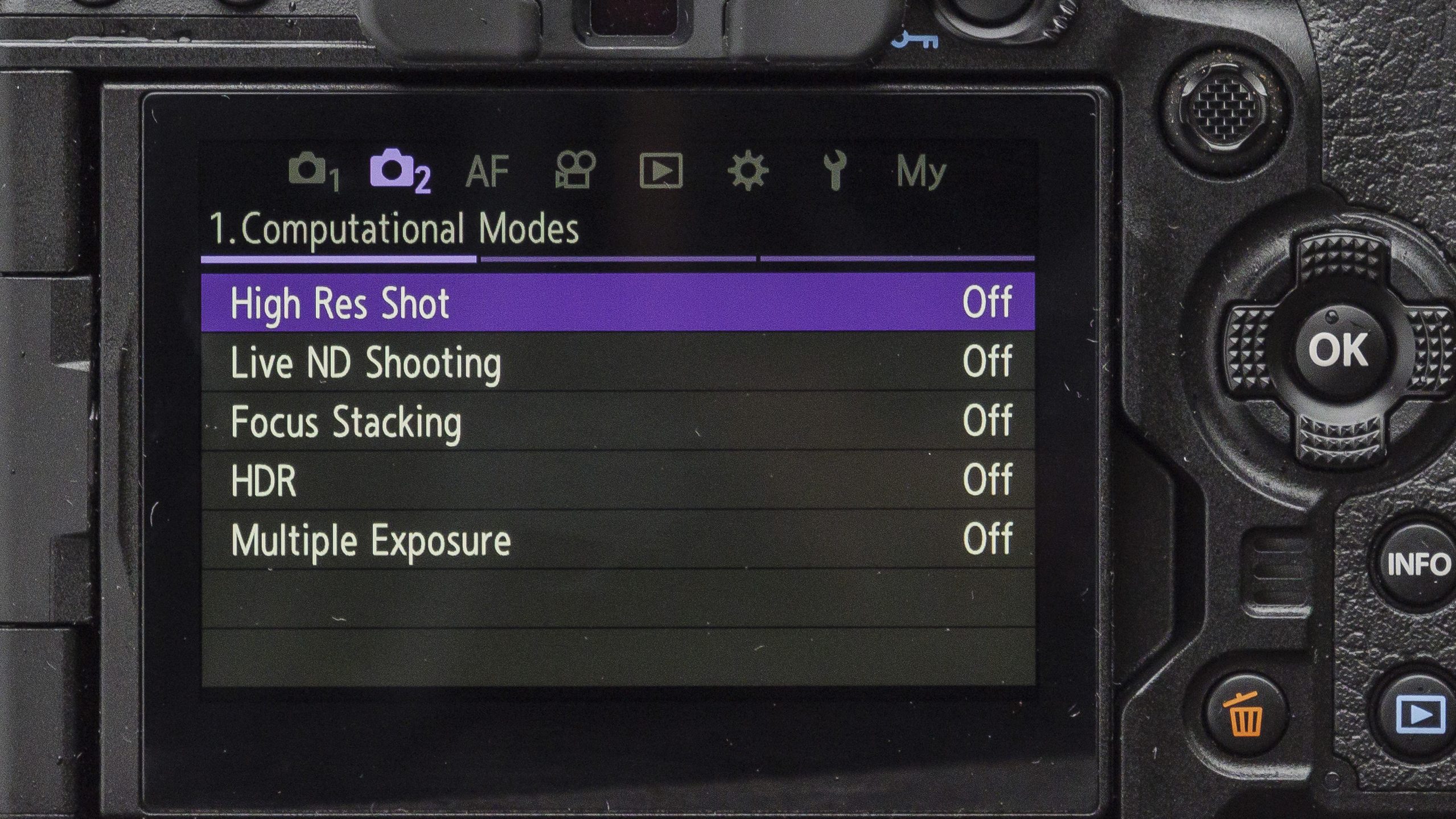

Not only do 'Computational Modes' now command a dedicated space within the OM-1's menu, the handling and output of these modes is improving all the time. It got me thinking – is this a nod to the future for Micro Four Thirds or mirrorless cameras in general?

So join my thought train as I unpack what computational photography is, how it's applied today, and what we might expect to come in 'proper' cameras.

What's computational photography again?

We all know about Portrait Mode, which has long been the poster child for smartphone computational photography. Without it, my Google Pixel 4a would otherwise be incapable of shooting its flattering portraits with blurry backgrounds.

It works by using edge detection, and sometimes depth-mapping, to distinguish a subject from its background and then computationally apply a uniform blur to those surroundings to make your subject pop. While fallible, the Portrait Mode effect is magical and brings big-camera power to our pockets.

If I updated my Pixel 4a to Google's current crop, I’d get Motion Mode too, whereby motion blur can be added to an image, much like what you get by adding an ND (neutral density) filter for long exposure landscapes. You can work things the other way too, by removing unwanted motion blur. Smart stuff, that's getting ever smarter.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

But while we tend to associate computational photography with smartphones, Olympus (now OM System) was in fact a pioneer, broadening the application of this tech in its Micro Four Thirds cameras. And the best example of this is its new OM System OM-1.

Computational photography allows a camera to do things that would otherwise be impossible due to factors like sensor size limits. And while the Micro Four Thirds sensor of the OM System OM-1 is much larger than the one you'll find in a smartphone like the Google Pixel 6, it still looks up to the larger-still full-frame.

The OM-1's 20MP resolution pales in comparison to the full-frame Sony A7R IV's 61MP. So to increase its image size, the OM-1 can use its 'High Res Shot' mode to combine multiple images into one for a final output of up to 80MP.

Phones use multi-lenses, software and AI to apply computational photography, whereas cameras like the OM-1 usually make use of multi-shot modes, combining several quick-fire pictures into one. Same theory, but with nuanced methods and purposes.

There's also a distinction in how computational photography is applied. A smartphone automatically builds computational photography like HDR into the handling of its camera (or at least it's one click away), whereas in a camera the computational modes feel somewhat separate from the general operation, like something you have to actively choose.

What's consistent between smartphone and camera tech is that hardware improvements have slowed down and the most exciting developments lie within the computational world and the power behind it. Let's look at some more examples.

Peak Olympus

The OM-1 now has a dedicated menu for 'Computational Modes', pointing to the growing importance of this type of photography in mirrorless cameras.

The list of modes includes 'High Res Shot', Live ND, HDR, Focus Stacking and Multiple Exposure. While there are no brand new computational modes brought to the table this time, the handling and performance of these in-camera tricks really has been improved.

Want that motion blur effect? Ditch your ND filters and try Live ND which can now go up to 6EV (or six stops). Paired with the OM-1's amazing image stabilisation, you won't even need a tripod to keep what's still in the scene nice and sharp.

Disappointed that you're not getting detail in the bright and dark parts of your picture? Go for HDR to increase dynamic range up to ±2EV. You'll even get a bump in dynamic range by using the High Res Shot mode that's primarily designed to increase image size, while getting sharp 50MP images handheld.

What's behind the improvements? Processing power. The OM-1 has a processor that's three times more powerful than the one in the E-M1 III, plus a stacked sensor that doubles sensor readout speed. Computational mode pictures are processed at least twice as quick.

Personally, I don't mind waiting a short while for that High Res Shot picture to pop-up on the camera screen. But what's more important to me is the flexibility at the capture stage, and its impact on the final image. Right now there are still real-world application limits to computational photography.

Computational dreams

Blending multiple 20MP images into one takes time at the capture stage, meaning High Res Shot images are currently not possible when there's movement in your scene, because of the ghosting effect. This could be movement like trees swaying in the wind, or a person walking.

Could I expect an even-quicker sensor read-out and processor to power through multi-shot images with a capture-stage-speed that eliminates the adverse effect of ghosting? If so, this could render resolution limits a thing of the past.

How about the same theory applied to the OM System's handling of HDR? Could HDR be applied automatically instead (with an opt-out), much like in a smartphone? The OM-1 is as close to a smartphone experience you get in a DSLR-style camera, but the computational element to it is still optional.

And even though computational photography is applied differently in OM System cameras than it is for smartphones – for example, Focus Stacking increases depth of field for disciplines like macro photography – what about new modes?

Could a new Portrait Mode be applied to slower OM-System lenses that are less capable of blurring backgrounds? Or new elements to in-camera editing that include post-capture blur effects, a la Google.

What about a Night Mode section – what would be the method for brighter, cleaner looking images after the sun's gone down? Could astro-photography modes fall within this? Auto star trails anyone?

The oh-so-capable OM-1 powers up from its predecessors, while other existing cameras like the Nikon Z9 are quicker still. But hopefully in future generation OM-System cameras we'll see even greater power, applied computationally.

If so, sensor size might well become irrelevant. The whole experience at the handling and capture stage could be transformed. My thought train has several more stops in every direction, and I'll leave it to you which route yours takes.

Tim is the Cameras editor at TechRadar. He has enjoyed more than 15 years in the photo video industry with most of those in the world of tech journalism. During his time as Deputy Technical Editor with Amateur Photographer, as a freelancer and consequently editor at Tech Radar, Tim has developed a deeply technical knowledge and practical experience with cameras, educating others through news, reviews and features. He’s also worked in video production for Studio 44 with clients including Canon, and volunteers his spare time to consult a non-profit, diverse stories team based in Nairobi. Tim is curious, a keen creative, avid footballer and runner, and moderate flat white drinker who has lived in Kenya and believes we have much to enjoy and learn from each other.