Microsoft shows AI journalism at its worst with Little Mix debacle

Opinion: Microsoft can't get away with such avoidable errors

With a global pandemic keeping us pretty occupied for the past few months, you may have allowed yourself to forget about that other apocalyptic danger – that is, unchecked artificial intelligence. Unfortunately, an AI program being put to use at Microsoft's MSN.com has brought it back into the spotlight.

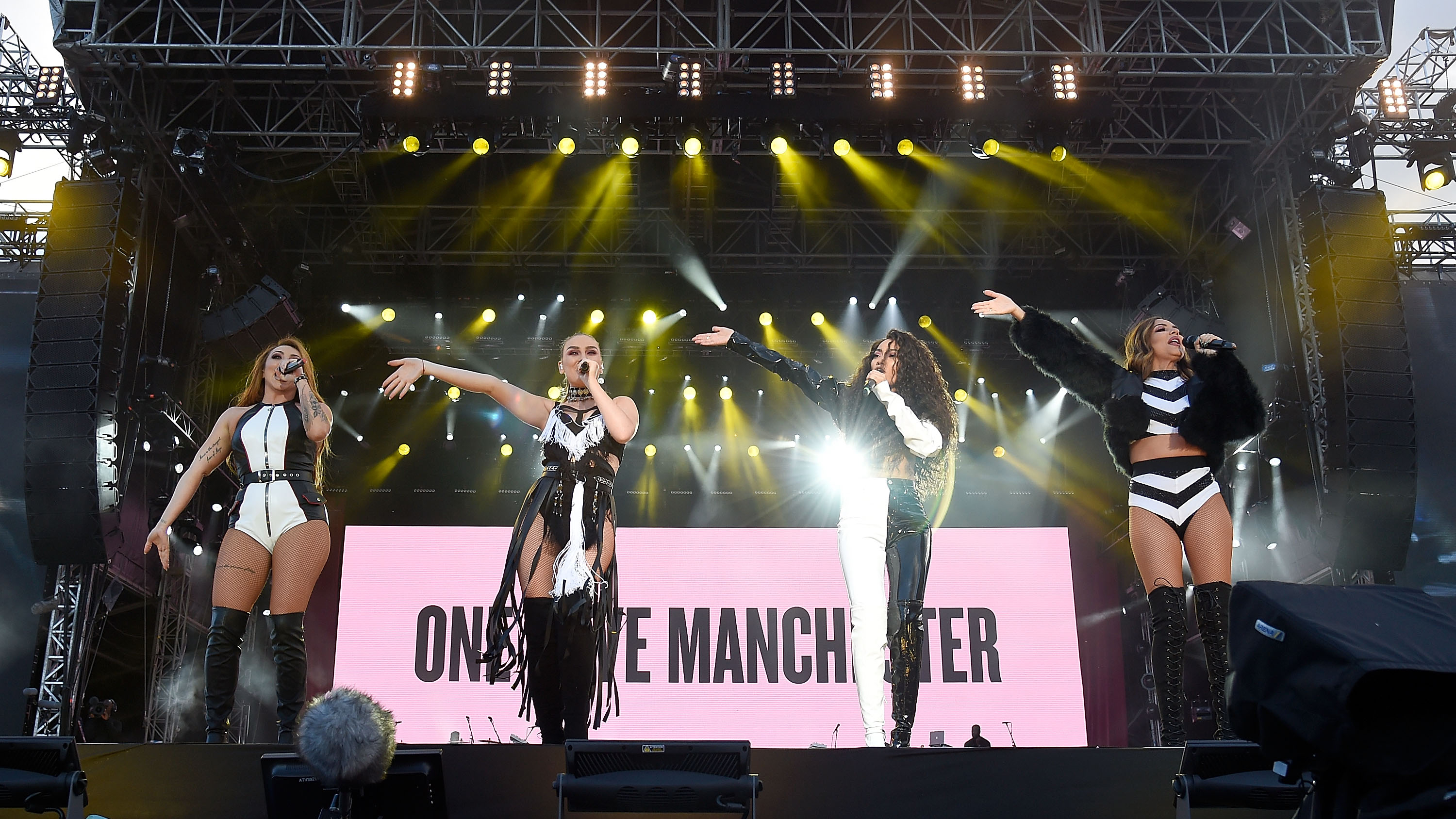

The story centers on an article published on MSN.com about Little Mix star Jade Thirlwall, incorrectly using a photo of another mixed-race band member, Leigh-Anne Pinnock.

This would be bad enough a mistake for a human editor to make – not just because of its terrible timing amid worldwide protests against racial injustice – but it takes on a whole new flavor when the automated processes behind this article come to light.

MSN.com doesn’t do its own reporting, preferring to repurpose articles from across the web and split resulting ad revenue with the original publisher, whose piece has been able to reach a wider audience. It may not be surprising, then, that Microsoft thought a lot of this process could be automated – not only sacking hundreds of staff during a pandemic, but also implementing an AI editor that automatically published news stories on MSN.com without any human oversight.

Not so intelligent after all

Racial bias in AI is a well-documented issue, with the huge data sets used to train artificial intelligence software often seeing those programs duplicate human prejudices – which, under the uneasy guise of algorithmic ‘objectivity’ can easily go overlooked by AI-focused organizations. (For a truly dystopian example, check out this psychotic AI trained with data sets from Reddit.)

But this error shows what can happen when an unfeeling AI is trusted with editorial oversight, something that requires empathy beyond an algorithmic sense of reader interest.

According to Microsoft, the issue wasn't in facial recognition so much as the AI reordering photos from the original article: "In testing a new feature to select an alternate image, rather than defaulting to the first photo, a different image on the page of the original article was paired with the headline of the piece. This made it erroneously appear as though the headline was a caption for the picture."

Sign up for breaking news, reviews, opinion, top tech deals, and more.

The most disconcerting thing about this story, though, is the extent of the role that Microsoft felt comfortable giving to an AI – with the ability to automatically publish stories without human curation, meaning no-one had the chance to prevent this mistake before it reached MSN.com’s readership.

We’ve also learned that, while human staff – a term we can’t believe we’re having to use – were quick to delete the article, the AI still had the ability to overrule them and publish the same story again. In fact, that’s basically what happened – with the AI auto-sharing news articles concerning its own errors, viewing them as relevant to MSN.com readers.

When contacted, a Microsoft rep relied that, “As soon as we became aware of this issue, we immediately took action to resolve it and have replaced the incorrect image.” We've yet to hear anything about these AI processes changing, though.

While AI software may seem like a handy replacement for newsroom roles, we’ve seen that AI applications aren’t ready to take editorial responsibility away from human hands – and attempting to rush to an AI-driven future is only going to hurt the reputation of both Microsoft and online reporting further.

Via The Guardian

Henry is a freelance technology journalist, and former News & Features Editor for TechRadar, where he specialized in home entertainment gadgets such as TVs, projectors, soundbars, and smart speakers. Other bylines include Edge, T3, iMore, GamesRadar, NBC News, Healthline, and The Times.