The future of PC graphics

We talk next generation graphics cores with AMD

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

What's next for graphics? Why, Graphics Core Next, of course. Thanks, AMD, for that nicely pallindromic way to start off a feature.

And also for talking about the successor to the current generation of Radeon graphics cards, which is due sometime next year.

The unveiling of GCN took place at June's Fusion Developer Summit. It's the first complete architectural overhaul of GPU technology it's risked since the launch of Vista.

That also, incidentally, makes it the first totally new graphics card design for AMD that isn't based on work started by ATI before it was purchased.

Vista, and specifically DirectX 10, called for graphics cards to support a fully programmable shader pipeline.

That meant doing away with traditional bits of circuitry that dealt with specific elements of graphics processing – like pixel shaders and vertex shaders – and replacing them with something more flexible that could do it all: the unified shader (see "Why are shaders unifi ed?", next page).

Schism

During the birth of DX10 class graphics, there was something of a schism between Nvidia and AMD.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

To simplify: the former opted for an interpretation of unified shader theory in its G80 GeForce chips that was quite flexible. Place a few hundred very simple processors in a large array, and send them one calculation (or, in some circumstances, two) a piece to work on until all the work is done.

It's a method that creates a bit of a nightmare for the set-up engine, but it's very flexible and for well written code that takes advantage of the way processors are bunched together on the board, dynamite.

In designing the G80 and its successors, Nvidia had its eye on applications beyond graphics. Developers could create GPGPU applications for GeForce cards written in C and more recently C++.

AMD/ATI, meanwhile, focused on the traditional requirements for a graphics card. Its unified shaders worked by combining operations into 'Very long instruction words' (VLIW) and sending them off to be processed in batches.

The basic unit in an early Nvidia DX10 card was a single 'scalar' processor, arranged in batches of 16 for parallel processing.

Inside an AMD one, it was a four way 'vector' processor and with a fifth one for special functions. Hence one name for the Radeon architecture: VLIW5. While the set-up sounds horrendous, it was actually designed to be more efficient.

The important point being that a pixel colour is defined by mixing red, green, blue and alpha (transparency) channels. So the R600 processor – which was the basis of the HD2xxx and HD3xxx series of cards – was designed to be incredibly efficient at working out those four values over and over again.

Sadly, those early R600 cards weren't great, but with time and tweaking AMD made the design work, and work well.

The HD4xxx, HD5xxx and HD6xxx cards were superlative, putting out better performance and requiring less power than Nvidia peers. Often cheaper too. But despite refinements over the last four years, the current generation of GeForce and Radeon chips are still recognisable as part of the same families as those first G80 and R600.

There have been changes to the memory interface (goodbye power hungry Radeon ring bus) and vast increases to the number of execution cores (1,536 on a single Radeon HD6970 compared to 320 on an HD2900XT), but the major change over time has been separating out the special functions unit from the processor cores.

Graphics Core Next, however, is a completely new design. According to AMD, its existing architecture is no longer the most efficient for the tasks that graphics cards are called to do.

New approach

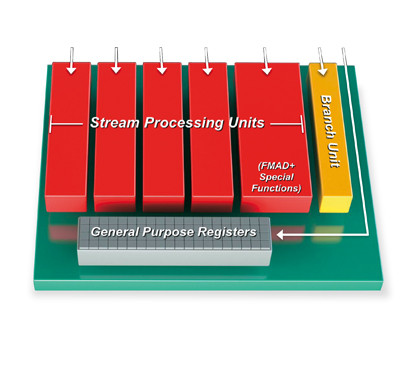

FUTURE GRAPHICS: VLIW5 has four vector processing units: one each for R, G, B and alpha

Proportionally, the number of routines for physics and geometry being run on the graphics card has increased dramatically in a typical piece of game code, calling for a more flexible processor design than one geared up primarily for colouring in pixels.

As a result, the VLIW design is being abandoned in favour of one that can be programmed in C and C++.

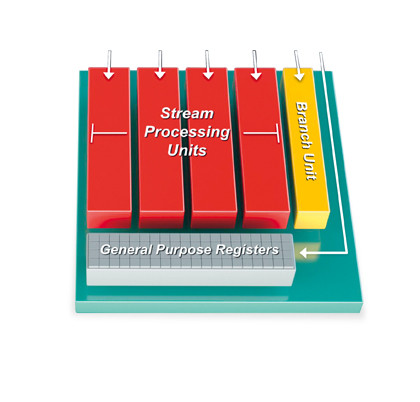

The basic unit of GCN is a 16 wide array of execution units arranged for SIMD (single instruction, multiple data) operations. If all that sounds familiar to G80 and on, it's because it is.

Cynically, this could be seen as a tacit acknowledgement that Nvidia had it right all along, and there's no doubt that AMD is looking at GPGPU applications for its next generation of chips. But there's more to it than that.

Inside GCN, these SIMD processors are batched together in groups of four to create a 'compute unit' or CU. They are, functionally, still fourway vector units (perfect for RGBA instructions) but are also coupled to a scalar processor for one off calculations that can't be completed efficiently on the SIMD units.

Each CU has all the circuitry it needs to be virtually autonomous, too, with an L1 cache, Instruction Fetch Arbitration controller, Branch & MSG unit and so on.

There's more than the CU to GCN, though. The new architecture also supports x86 virtual memory spaces, meaning large datasets – like the megatextures id Software is employing for Rage – can be addressed when they're partially resident outside of the on-board memory.

And while it's not – as other observers have pointed out – an out-of-order processor, it is capable of using its transistors very efficiently by working on multiple threads simultaneously and switching between them if one is paused and waiting for a set of values to be returned. In other words, it's an enormously versatile chip.

After an early preview of the design, some have noted certain similarities with Intel's defunct Larrabee concepts and also with the Atom and ARM-8 chips, except much more geared up for parallel processing.

INVENTIVE NAMING: GCN will still work with RGBA data, but boasts greater flexibility

"Graphics is still our primary focus," said AMD's Eric Demers during his keynote presentation on GCN, "But we are making significant optimisations for compute... What is compute and what is graphics is blurred."

The big question now is whether or not AMD can make this ambitious chip work. Its first VLIW5 chips were a disappointment, running hotter and slower than expected. So were Nvidia's first generation Fermi-based GPUs.

Will GCN nail it in one? We've got a while to wait to find out. The first chips based on GCN are codenamed Northern Islands and will probably be officially branded as Radeon HD7xxx. They were originally planned for this year, but aren't expected now until 2012.