Stephen Hawking warns of a future where Siri is in control

Now that's scary

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

It's hard to tell whether Stephen Hawking enjoyed seeing the new film Transcendence, which stars Johnny Depp as a rogue artificial intelligence who causes all sorts of havoc, but it's certain the movie got him thinking.

The well-known theoretical physicist, along with leading scientists Stuart Russell, Max Tegmark and Frank Wilczek, have penned an opinion piece in the UK's The Independent warning that the AI "arms race" may have dire consequences.

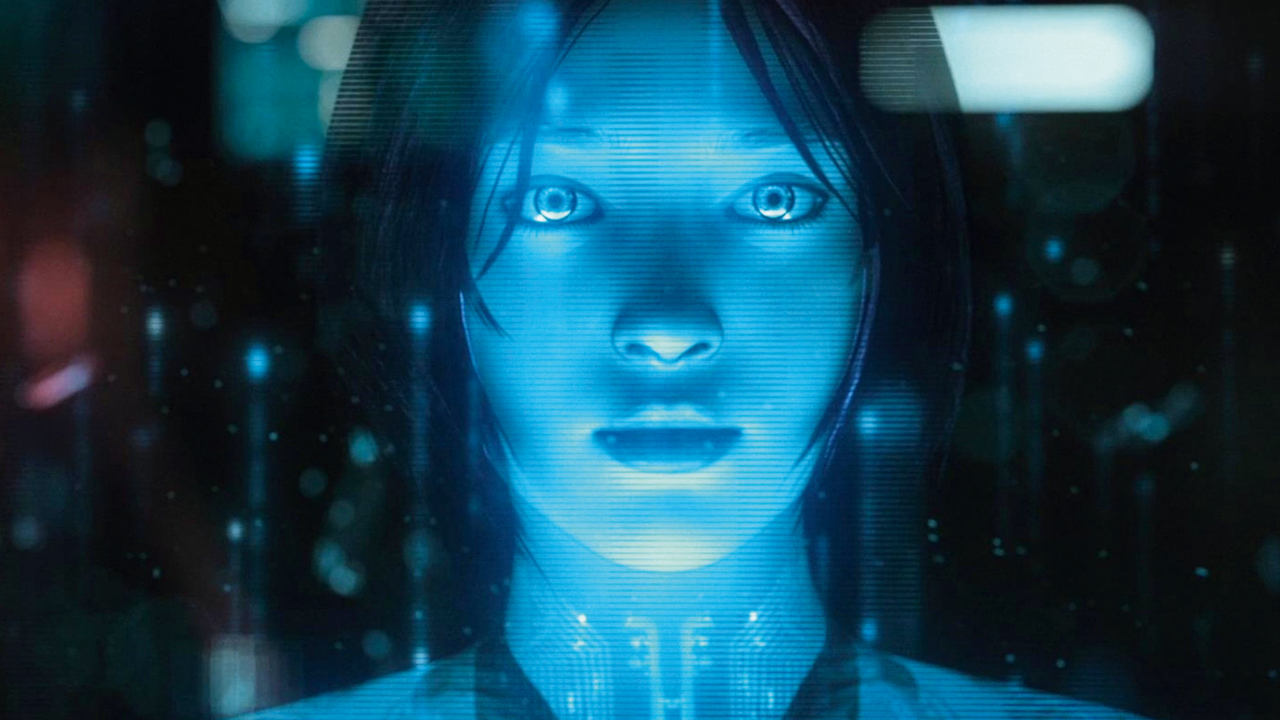

The group cites advancements like self-driving cars, the Jeopardy-winning computer Watson, and even Apple's Siri, Google Now and Microsoft's Cortana as examples of incredible technology that may not have been fully thought through.

"The potential benefits are huge," they write. "Success in creating AI would be the biggest event in human history."

Can you feel that 'but' coming?

"Unfortunately, it might also be the last," they continue. Uh-oh.

While it's physically possible to create computers more powerful than the human brain (and it's likely to happen in "the coming decades"), it may not be advisable, they argue.

Where we run into trouble is when AI gets so intelligent it starts continually improving its own design. It's a scenario that's been playing out in science fiction for decades, but Hawking and Co. warn it could really happen.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

"One can imagine such technology outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders and developing weapons we cannot even understand," the scientists write. "Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all."

Hope remains

Mercifully, they reason that humanity can "learn to avoid the risks."

Hawking names four organizations that are researching such things - the Cambridge Centre for the Study of Existential Risk, the Future of Humanity Institute, the Machine Intelligence Research Institute, and the Future Life Institute - but also asks a provocative question:

"If a superior alien civilization sent us a message saying, 'We'll arrive in a few decades,' would we just reply, 'OK, call us when you get here - we'll leave the lights on?' Probably not - but this is more or less what is happening with AI."

- Here's a solution - why not pit them against one another? It's Cortana vs Siri vs Google Now

Michael Rougeau is a former freelance news writer for TechRadar. Studying at Goldsmiths, University of London, and Northeastern University, Michael has bylines at Kotaku, 1UP, G4, Complex Magazine, Digital Trends, GamesRadar, GameSpot, IFC, Animal New York, @Gamer, Inside the Magic, Comic Book Resources, Zap2It, TabTimes, GameZone, Cheat Code Central, Gameshark, Gameranx, The Industry, Debonair Mag, Kombo, and others.

Micheal also spent time as the Games Editor for Playboy.com, and was the managing editor at GameSpot before becoming an Animal Care Manager for Wags and Walks.