From reading the advertising copy, you could be forgiven for thinking that SSDs have no downsides. Much faster than traditional spinning hard drives, far more resilient to being dropped, ultra quiet, it seems the only negative anyone can point to is the price.

In reality, there are a couple of issues you should be aware of when using SSDs, and why the operating system you use is suddenly very important.

When you use a PC day in, day out, you get used to how the machine as a whole runs with your particular mix of applications. Some of these applications could be compute-bound - that is, the bottleneck in using the app is determined purely by how quickly the CPU is able to run.

A great example of this is converting video from one format to another - from DVD to MP4 to play on your iPod Touch, for example. No matter what you do, the speed of the conversion is entirely down to the CPU; the disk sub-systems are well able to cope reading and writing data.

Some applications are different - they're I/O-bound. Your perception of the application's speed is governed by how quickly the disk subsystems read and write. An example of this is an application you don't really think of as such: booting up your PC.

When you boot your PC, the boot manager has to load various disparate drivers and applications into memory from your boot disk and set them all running. Modern OSes use hundreds of these programs, drivers, and services and applications that try to help you speed up your boot times often just concentrate on minimising what gets loaded during the boot process as a whole.

Disk delays

Get daily insight, inspiration and deals in your inbox

Get the hottest deals available in your inbox plus news, reviews, opinion, analysis and more from the TechRadar team.

Standard disk drives don't do brilliantly at I/O-bound applications. The reasons are essentially two-fold.

The first is that the head has to be positioned to the right track on the correct platter, ready for the correct sector to rotate round under the head. This is the seek time. Once positioned, the head has to wait for the right sector to appear (the rotational delay) so that it can read the data requested.

Another delay might be caused by the disk having to be spun up, because many systems - especially laptops running on batteries - stop the platters rotating after a period of no activity to conserve energy.

The first delay was significant in the early days of disk drives when it was in the order of half a second or so, but the seek time has been continually refined so that it's now around 10ms for standard desktop or mobile drives.

The second delay is directly proportional to the rotational speed of the platters. Over the years, the speed of drives has slowly increased, with standard mobile drives running at 5,400rpm and desktop drives at 7,200rpm - although high-end laptops (except, significantly, Apple's) tend to have 7,200rpm drives as standard these days. You can buy 10,000rpm drives for desktops, and some recommend using them for your boot drives.

For comparison, the drives found in iPod Classics are 4,200rpm drives (other current iPods just use Flash memory).

Another factor in the overall speed of disk drives is how quickly you can read data from the platter and get it into RAM. Here, coupled with the move to SATA interfaces from the older PATA interfaces, the rate is proportional to the density of data on the platter, with the density slowly but surely increasing annually.

Nevertheless, the speed of hard drive technology is bound by physics and mechanics. Yes, more data is being stuffed into smaller spaces, but the overall speed of the drive is still governed by the speed of the spinning platter.

In order to get more speed, manufacturers moved to different technology: flash memory.

The birth of the SSD

It had to happen at some point after flash memory became reliable and cheap enough: stuff as many flash memory chips as possible in a hard drive enclosure, add a controller and you remove the mechanical performance issues in one fell swoop. Enter the modern SSD.

The flash memory in an SSD is known as NAND-flash. There are two types: SLC (single-level cell) and MLC (multi-level cell).

In SLC, each cell of memory stores one bit, and in MLC, it usually stores two bits. MLC is generally cheaper to manufacture than SLC (each cell holds double the information, so the same number of cells will hold more data), and that's what we usually find in retail SSDs.

The value of the cell is obtained by testing the cell with some voltage. The SLC cell will respond to a certain voltage: at one level, the cell is assumed to hold 0; at another level the cell is assumed to hold 1. With MLC, the cell will respond to one of four different levels of voltage, which we can denote as 00, 01, 10, and 11.

Notice what this means: with MLC memory, to read a cell you have four times the number of tests to make, which takes longer. Nevertheless, we're talking orders of magnitude faster reads than the fastest disk drives can manage.

Except it's not that simple. The issue is that setting a cell to hold a value involves two different voltages too. There's the programming voltage, which essentially sets the cell to 0, and there's the higher erasure voltage, which sets the cell to 1.

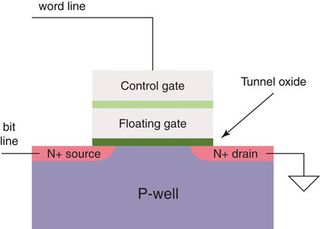

The programming and erasure voltages are higher than the read test voltages because they need to force electrons to tunnel over an oxide substrate between two gates.

FIGURE 1: Idealised structure of a flash cell. The floating gate stores the charge denoting 'on' or 'off '

Imagine the following scenario, which illustrates how these gates work: you have two jars of water connected by a pipe. The pipe has a tap and one jar is lower than the other. Fill the top jar. When the top jar is full, the system is assumed to store a 1. If you now open the tap (this doesn't take much work at all), the water drains down to the bottom jar (in SLC terms, this is programming the system). The system is now assumed to store a 0.

Now consider what you must do to set the system back to 1: you have to force the water back uphill and close off the tap, which is a lot of work (this is erasing the system in SLC terms).

In essence, when talking about NAND cells, programming is easy and erasing is hard.

Most Popular