Can machines imitate how humans think?

Back in the late '40s, Alan Turing, fresh from his success as part of the team that cracked the Enigma machine, turned his thoughts to machine intelligence. In particular, he considered the question: 'Can machines think?'

In 1950, he published a paper called Computing Machinery and Intelligence in the journal Mind that summarised his conclusions. This paper became famous for a simple test he'd devised: the Turing Test.

The problem he found in particular was the word 'think' in his original question. What is thinking and how do we recognise it? Can we construct an empirical test that conclusively proves that thinking is going on?

He was of the opinion that the term was too ambiguous and came at the problem from another angle by considering a party game called the Imitation Game.

Parlour games

In the Imitation Game, a man and a woman go into separate rooms. The guests don't know who is in each room. The guests send the subjects written questions and receive answers back (in Turing's day these were typewritten so that no clues could be found by trying to analyse the handwriting; today we can imagine email or Twitter as the medium).

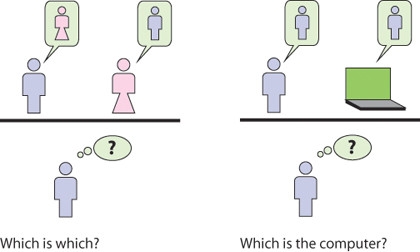

From the series of questions and answers from each subject, the guests try to work out who is the man and which the woman. The subjects try to muddy the waters by pretending to be each other. The first part of the picture below shows this game.

Turing wondered what would happen if we replaced the man or the woman with some machine intelligence. Would the guests guess the machine versus human more accurately than they would the man versus the woman? Turing's insight was to rephrase 'Can machines think?' into a more general 'Can machines imitate how humans think?' This is the original Turing Test, and is shown in the second part of the picture.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Over the years, this Turing Test has changed into the simpler test we know today: can we determine whether the entity at the end of a communications link is computer program or human, just by asking questions and receiving answers? It's still a variant of the Imitation Game, but now it's much simpler - possibly too simple.

The first program to try to pass the Turing Test was a program called ELIZA, a program that pretended to be an empathic or non-directional psychotherapist, written in the period 1964-1966. ELIZA essentially parsed natural language into keywords and then, through pattern matching, used those keywords as input to a script. The script (and the database of keywords and the subject domain) was fairly small, but nevertheless ELIZA managed to fool some people who used it.

The reason for using a psychotherapy as the domain is that it lends itself to being able to respond to statements with questions that repeat the statement ("I like the colour blue." "Why do you like the colour blue?") without immediately alerting the human that the therapist on the other end has no applicable real-world knowledge or understanding.

ELIZA was so successful that its techniques were used to improve the interaction in various computer games, especially early ones where the interface was through typed commands.

Chatterbots

Despite its simple nature, ELIZA formed the basis of a grand procession of programs designed and written to try to pass the Turing Test. The next such was known as PARRY (written in 1972, and designed to be a paranoid schizophrenic), and they spawned a whole series of more and more sophisticated conversational programs called chatterbots.

These programs use the same techniques as ELIZA to parse language and to identify keywords that can then be used to further the conversation, either in conjunction with an internal database of facts and information, or with the ability to use those keywords in scripted responses. These responses give the illusion to the human counterpart that the conversation is moving forward meaningfully.

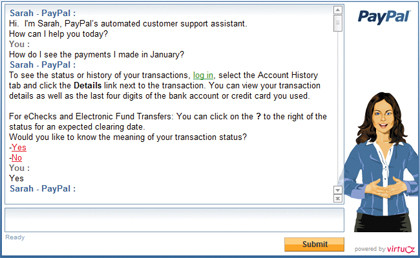

Consequently, provided the subject domain is restricted enough, chatterbots can and do serve as initial points of contact for support. For example, PayPal currently has an automated online 'customer service rep' it calls Sarah. Using the chat program is quite uncanny.

As you can see above, the answer to my question ("How do I see the payments I made in January") appeared instantly and the natural language evaluation processing is extremely efficient. Notice that the word 'payment' is recognised and the more accurate 'transaction' is used in the reply).

Such automated online assistants are available 24/7, and are helping reduce the loads on normal call centres. Aetna, a health insurer in the US, estimates that 'Ann', the automated assistant for its website, has reduced calls to the tech support help desk by 29 per cent.

Of course, there are downsides to chatterbots as well. It's fairly easy to write chatterbots to spam or advertise in chat rooms while pretending to be human participants. Worse still are those that attempt to cajole their human counterparts into revealing personal information, like account numbers.