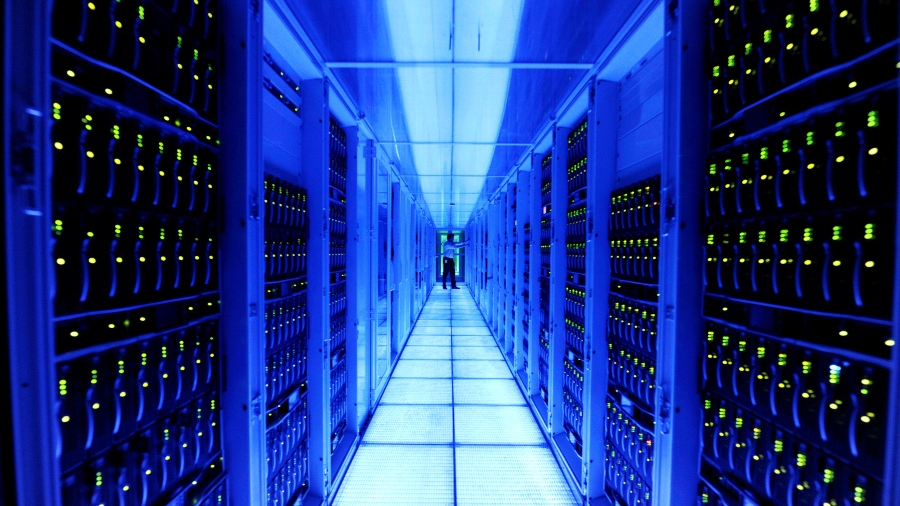

8 Data storage trends for 2018

Heightened regulations and increased cyber threats are making it more difficult for businesses to securely store data.

Software-defined storage (SDS) has quickly achieved adoption in the IT infrastructure of the modern data center due to its versatility in delivering faster and more flexible storage provisioning while lowering costs by leveraging off-the-shelf hardware and providing automated and optimized storage management capabilities.

According to International Data Corporation, the SDS market is projected to grow at a compound annual growth rate of 13.5 percent from 2017 to 2021, and it will increase in prominence in 2018 as data growth continues to explode, the complexity and cost to store and manage data increases, and the need for regulatory compliance is more highly prioritized.

As 2018 kicks off – and as data growth continues to explode, and the complexity and cost to store and manage data are becoming increasingly compounded both by prevalent cyberthreats and the intense scrutiny of regulatory compliance – here are eight key trends in data storage and management to keep an eye on in the coming year.

Further consolidation of functions

IT department managers will increasingly look for functional consolidation within single solutions to eliminate extra licensing costs as well as complex, time consuming and error-prone integrations.

Although storage and backup functions have been converging for some time, expect to see more security features being integrated into a single solution, as well.

This kind of consolidation and unification within a single solution allows for greater visibility across a broader ecosystem versus having to use many tools with a laborious, manager-of-manager-type approach.

Greater simplicity in product design

Ease-of-use features in data storage will continue to win out over complexity or integration, as more non-technical users are increasingly being expected to self-service their data and satisfy more of their needs.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

In the consumer market, everyone is getting used to using high-tech solutions like Amazon’s Alexa and Google Home’s virtual assistant devices, which are expressly designed to be simple and easy to use by the average person.

Enterprise IT is headed in much the same direction with out-of-the-box solutions that are straightforward and intuitive to use, which reduces the burden on IT administrators and enables greater user self-service.

The move to greater ease of use also enables organizations to hire more IT generalists who can respond to a more dynamic and diverse set of tasks and requests.

Increased use of encryption

The adoption of encryption for data at rest will more than double in 2018 as a way of increasing security and future-proofing storage against new stringent regulations and customer requirements.

Adoption will accelerate even faster with encryption solutions that are easy to manage and that don’t negatively affect user productivity.

Better data management

As new regulations take effect such as NIST SP 800-171 for U.S. defense contractors and the European Union’s General Data Protection Regulation (GDPR) for anyone storing personally-identifiable information, organizations will need a better way to understand precisely where they are storing regulated data and who is accessing it.

New regulations like these will motivate data owners to be much better stewards of their data to ensure the appropriate protection and reporting on data, the elimination of stale data and potentially the creation of value from data that could have otherwise been inadvertently lost or stranded.

Greater reliance on commercial software

In 2018, expect to see a return to commercial, off-the-shelf applications and solutions from open source, in-house supported efforts.

In the last decade, there was a surge of organizations trying to eliminate commercially-licensed software with open source software but organizational leaders are now realizing it is not a sustainable model because of the substantial challenges related to hiring and retaining technical expertise, increasing software complexity and higher security risks from vulnerabilities.

Except for the largest one percent of enterprises with the biggest IT budgets, organizations will be increasingly reviewing the costs associated with supporting open source software and realizing it has a higher total cost of ownership with unpredictable costs and challenges compared to commercially-licensed solutions.

Greater leveraging of workflow-enabling technologies

More organizations are starting to appreciate the value of an efficient IT infrastructure – and specifically, data storage – in accelerating and improving business operations and increasing top line revenue.

Adoption of technologies that eliminate client side changes or user workflow changes can improve employee productivity, reduce security threat vectors and create high organizational resiliency.

By leveraging technologies that have security and data protection baked in or by default, organizations can have better assurances that their data is protected. And, by having the best and easiest-to-use solution for the employee, you are eliminating the risks of creating a shadow IT.

Less enthusiasm for hyper-convergence

Over the past five years, hyper-converged systems have been getting a lot of attention but expect the excitement to start waning in 2018.

Organizations are now recognizing that hyper-converged solutions are great for small implementations and certain linear scaling workloads like virtual desktop infrastructure (VDI), but they aren’t capable of economically scaling to meet workloads with massive datasets because they don’t adequately scale resources such as compute, RAM and storage.

As machine learning that uses graphic processing units (GPUs) and field programmable gate arrays (FPGAs) becomes more commonplace, the issue of scalability will be further exacerbated.

More machine learning

2017 was a big year for machine learning and artificial intelligence, and we have seen machine learning affect the consumer market from within the data center through things like driverless cars, Amazon’s Alexa and online shopping.

Data storage and data management are critical to machine learning since the learning is based on the input or data processed by the learning model. A data set for training driverless car can be over 100 terabytes per day, which has created challenges for high speed data movement and data management.

Storage and cloud providers will be looking at more efficient ways for moving and storing data to support the learning process and IoT devices with machine learning. In 2018, we will continue to see machine learning deployed to help corporate IT for analytics and security, especially in the area of user entity behavior analytics as a way of detecting and stopping insider threats and preventing data loss.

- Jonathan Halstuch is the COO and co-founder of RackTop Systems

- Check out the best dedicated servers

Jonathan Halstuch is a U.S. intelligence community veteran and chief operating officer and co-founder of RackTop Systems, a provider of high-performance Software-Defined Storage embedded with advanced security, encryption and compliance. He is a great leader and passionate of technology.