The technology behind CERN: the hunt for the Higgs boson

How software is helping The Large Hadron Collider

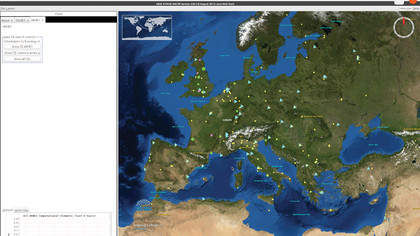

Beyond the mechanics of batch control for thousands of jobs submitted each day to the WLCG HEP, the process of carrying out the science on experimental data also requires an open-source approach. All of the collaborators within the experiment have a responsibility to make sure the software is constantly improving in its ability to spot significant events in the noise of a billion particles.

It's not impossible to be a physicist without an understanding of C++ and how to optimise code through the compiler, but it is a class taught as part of an undergraduate degree at Sussex and other HEP institutions.

"Our community has been open source since before it was really called open source," says Bird. "We use commercial software where we need to and where we can, but all of our own data analysis programs are written in-house, because there's nothing that exists to do that job."

The size and complexity of ATLAS and CMS means there are hundreds of software professionals employed to create and maintain code, but since many sites will collaborate on different parts of the experiment, tools such as SVN are vital for managing the number of contributions to each algorithm.

"You wouldn't be able to do it on a different operating system," Dr Salvatore says. "It's becoming easier to work with tools like SVN from within the operating system, and this is definitely one of the things you exploit, that you can go as close as possible to the operating system; while with proprietary systems the OS is hidden by several layers."

Managing the input of thousands of HEP academics is a challenge which calls for more than mere version control, though. "There's a bit of sociology, too," says Calafiura, "With over 3,000 collaborators, there's quite a bit of formality to decide who is a member of ATLAS, and who gets to sign the Higgs paper. So there's a system of credits. Traditionally, this work is either in the detector or contributing to the software. If someone has a good algorithm for tracking a particle's path through the detector, they spend a couple of years perfecting that algorithm and contribute it to the common software repository, and it becomes part of the official instructions.

"Up until 2010, the majority of contributions to the software came from physicists, except for the core area where professionals work. Then everything changed the moment real data started flowing in. In the last couple of years, physicists are using tools prepared by a much smaller community. I've been involved with High Energy Physics for most of my life, and every time an experiment starts producing data the vast majority of the community loses interest in the technical aspects."

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Often, research starts with physicists working from a theoretical basis to calculate what the results of an event in which a Higgs boson appears would look like. Once a scientist has come up with a probable scenario, they'll attempt to simulate it using Monte Carlo, a piece of grid-enabled software which attempts to predict what will happen when protons smash together. The results are compared to a database of real results to find those which bear further investigation.

The event that is suspected to show a Higgs particle comes from a dataset of around one quadrillion collisions. There are groups of scientists and software developers attached to each part of the 'core' computing resource, who govern the behaviour of the LHC and the basic pruning of data. The future

With LHC due to go offline at the end of the year for an upgrade, there have obviously been a lot of conversations within the upper echelons of the computing project as to where WLCG goes next in order to keep up with the increased requirements of high-energy experiments.

"When we started the whole exercise," says Bird, "we were worried about networking, and whether or not we would get sufficient bandwidth, and whether it would be reliable enough. And all of that has been fantastic and exceeded expectations. So the question today is 'how can we use the network better?' We're looking at more intelligent ways of using the network, caching and doing data transfer when the job needs it rather than trying to anticipate where it will be needed."

Inevitably, the subject of cloud computing crops up. Something like EC2 is impractical as a day-to-day resource, for example, because of the high cost of transferring data in and out of Amazon's server farms. It might be useful, however, to provision extra computing in the run up to major conferences, where there is always a spike in demand as researchers work to finish papers and verify results.

As most grid clusters aim for 90% utilisation under normal circumstances, there's not a lot of spare cycles to call into action during these times. "There's definitely a problem with using commercial clouds, which would be more expensive than we can do it ourselves," says Bird.

"On the other hand, you have technologies like OpenStack, which are extremely interesting. We're deploying an OpenStack pilot cluster now to allow us to offer different kinds of services, and it gives us a different way to connect data centres together - this is a way off, but we do try to keep up with technology and make sure we're not stuck in a backwater doing something no-one else is doing."

Similarly, GPGPU is a relatively hot topic among ATLAS designers. "Some academics, in Glasgow for example, are looking at porting their code to GPGPU," says GridPP's Gronbach, "but the question is whether the type of analysis they do is well suited to that type of processing. Most of our code is what's known as 'embarrassingly parallel', we can chop up the data and events into chunks and send each one to a different CPU, and it doesn't have to know what's going on with other jobs. But it's not a parallel job in itself; it doesn't map well to GPUtype processing."

There's also, says Bird, a lot of interest in Intel's forthcoming Larabee/Xeon Phi hardware. While there's much debate about how the world's largest distributed computing grid develops, then, what is certain is that CERN's work is going to carry on becoming more demanding every year.

Confirming the existence of the Higgs boson and its purpose is neither complete nor the only work of the Large Hadron Collider. This is just the first major announcement of - hopefully - many to come.

So, next time you look up at the stars and philosophically ponder about how it all began, remember that recent improvements to our levels of certainty about life, the universe and everything are courtesy of thousands of scientists, and The Semi-conductor tracker barrels for one unlikely penguin.