The BBC recently streamed 24-hour live HD Olympic events simultaneously from its sports website, alongside its normal digital broadcasts over the air.

Although I'm sure, like me, you have a vague appreciation of what streaming is - after all, watching movies and TV shows over the internet is all part and parcel of the 2012 always-on society - the truth is even more peculiar than you might expect. In a way, it's amazing it works at all.

The earliest reference to what we might recognise as 'streaming media' was a patent awarded to George O Squier in 1922 for the efficient transmission of information by signals over wires. At the time, broadcast radio was just starting up, and required expensive and somewhat temperamental equipment to transmit and receive.

Squier recognised the need to simplify broadcasting, and created a company called Wired Radio that used this invention to pipe background music to shops and businesses. Later he decided to ape the Kodak brand name by renaming the company Muzak. This was the first successful attempt to multicast media (that is, transmit one signal over a cable to several receivers simultaneously).

Digital streaming

That was pretty much it for broadcast (radio and TV) and multicast (Muzak) until the age of computers, especially personal computers. It wasn't until the late 1980s or early 1990s that computers had the hardware and software that was capable of playing audio and displaying video.

The main issues that remained were a CPU powerful enough to render video, and a data bus wide enough to transmit video data to the video adaptor and monitor, as well as the network bandwidth (this was the age where the best access to networks was through a 28.8Kb modem).

In fact, for a while the only option available was to download the media as a file from some remote server and play it once the file was fully downloaded.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Consider the problem: a PC usually had an XGA monitor with a resolution of 640 x 480 pixels at 16 bits per pixel. Video, though, was 320 x 240 pixels. At a video refresh rate of 24 frames per second, the data bus on the PC had to process 320 x 240 x 2 (bytes per pixel) x 24 bytes per second, which works out at about 3.5MB per second.

Several things had to come together before streaming media could happen. First of all, the video itself had to be compressed to reduce the footprint of the media file on disk. At 3.5MB per second, a one minute video would take up 200MB on the hard drive - an amount of space that frankly was not readily available on most PCs of the time.

The CPU had to be able to decompress the video data in real time and render frames at the correct frame rate. The data bus of the PC had to be able to handle transferring that amount of data to the video sub-system, and the latter had to be able to refresh the monitor at the correct frame rate. By the mid-1990s, the requisite stars had aligned.

Multicasting

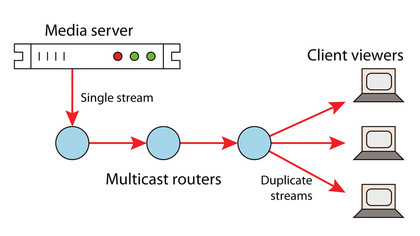

FIGURE 1: A multicast network distributes media with little bandwidth loss

In 1992, an experimental network was born: the Mbone. This was a virtual network super-imposed on the normal internet whose main purpose was multicasting.

Multicast in this scenario is a technology that allows data to be streamed efficiently from one server to several receivers simultaneously. An example of a situation that benefits from multicast is an internet radio station. Such a station will present a stream of music data that users can subscribe to, but all users will hear the same stream.

From the internet radio station's viewpoint, all it needs is a single low-bandwidth connection to the multicast backbone, and the rest of the transmission and eventual duplication of the data stream is done by the nodes in the internet. Increasing the number of listeners wouldn't impact the internet radio station too much at all.

The corresponding technology is known as unicast, and this is what we use when we watch a YouTube video or a movie online: one server sending a data stream over the internet to a single receiver, namely our PC.

To continue our example, an internet radio station wouldn't benefit from unicast since it would have to transmit a data stream to every listener. Increasing the number of listeners would require increasing the station's server and network capacities.

The issues with multicasting are several-fold. First of all, it requires special routers as nodes on the network to pass the single data stream on. It has to build up a tree of these special routers, so that it (or the network) can program those routers so that only a single data stream is passed between them. Obviously, only multicast routers can be linked in this tree.

This is generally known as tunnelling - the special routers tunnel the multicast data stream between them over the normal internet. Then, each receiver must be able to identify its nearest multicast router so that it can receive a unicast of the data stream from that router. The router acts as a duplicator of data – see Figure 1 above.

The other main issue was touched on in our example of an internet radio station: multicast poses problems with regard to paying for it, especially with regard to ISPs' costs. With a multicast internet radio station, the station's local ISP only passes through a single data stream, regardless of how many listeners there are. The data duplication is done by the routers that are geographically far from the transmitter.

Although Mbone was successful as a research project - it was even used to multicast a Rolling Stones concert at the Cotton Bowl in Dallas - it never really caught on publicly. These days it's mostly used for video conferencing.