Ultimate AMD PC: what to buy and how to build it

Are AMD CPU's really the weak link we've been led to believe?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

The time has come to give AMD another chance. Chuck out all your assumptions. Forget everything you've learned or read about its products.

In short, make today ground zero for your relationship with the world's alternative CPU supplier, and the only company that can flog you the complete end-to-end package of CPU, motherboard chipset and performance graphics.

We're not talking about turning AMD into a charity case, and this isn't about making big allowances, though anything that keeps AMD afloat and ensures there's more than one player in the processor market is extremely welcome.

It's about focusing on what really matters when it comes to PC performance and value for money. It's about not being distracted by often arbitrary benchmark results. It's about building a PC based on what it will actually do, not how it compares to something else.

The first assumption we want to challenge is that AMD's processors are the weak link. There's no question that Intel's CPUs are faster, but does that matter? For several years, Intel has been sandbagging its mainstream CPUs, so it clearly thinks there's only so much CPU performance you need.

A big part of this process is to revisit AMD CPUs in the context that matters to us: games. The thing about games is that you need a minimum level of performance, but don't benefit much from large multiples of that. In other words, dropping below a certain frame rate is very bad, but whether you have twice or 10 times that rate is fairly inconsequential. If you've got a bit of a margin, that's fine.

Moreover, as soon as you shift the emphasis to what's good enough rather than what wins in benchmark fisticuffs, you can take a much more balanced view of configuring your system. Suddenly, the merits of going end-to-end with AMD and pairing good-enough CPUs with excellent GPUs might make sense. It's a bold experiment.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Maybe it won't work and AMD's CPUs are the weak link after all. But with Intel locking down features and locking out joy of ownership, it's got to be worth a try.

Since we're asking you to reboot your relationship with AMD, it's only fair if we come clean too. We've probably been too keen to beat the Intel drum when it comes to PC performance. That's not because Intel doesn't make the fastest CPUs. By any sane and objective measurement, Intel's chips are the quickest, and the most powerful you can buy for a PC. We've haven't been wrong about them in that sense.

Put another way, the problem isn't that Intel doesn't make great products. It does, but almost everything about the way it markets and sells the things stinks. What's more, simple and objective measurements of raw CPU performance are only one part of the story here. Intel itself has tacitly conceded that CPU performance has become a non-issue.

That's why its mainstream processor models have topped out at four cores for the last four generations. There's only so much CPU performance most people need, and a modern quad-core chip does the job just fine.

That's why Intel's six-core PC processors are really server chips that in turn require a silly, overly complex server platform. Okay, it's not hard to think of a situation where sheer CPU grunt makes all the difference. If your PC's chief workload is crunching high quality video encodes, you want to throw as much money at the CPU as humanly possible. But be honest - does that really resemble how you usually use it?

A more realistic scenario involves a pretty mixed bag of applications and software, and if you're anything like us, quite a lot of games. Suddenly, the whole question of how you should specify your PC becomes more nuanced. How should you balance CPU and GPU in your overall budget? Are you gaining or losing from an overall platform perspective?

Things get even more complex when you throw Nvidia graphics into the mix. In fact, the latest Kepler generation of Nvidia graphics chips are a great example of how certain assumptions can distort the market.

But we'll come to that in a moment. First, let's revisit the pros and cons of AMD's current CPUs and have a little think about the reasons why they've fallen so far out of favour.

Much of it comes down to expectations that work on many levels. For starters, the computing industry has us trained into a state of perfect - you could say Pavlovian - expectation. Either Intel or AMD rings the bell of a new generation of chips, and we all drool in anticipation of mighty performance gains. Anything else is seen as a rank failure.

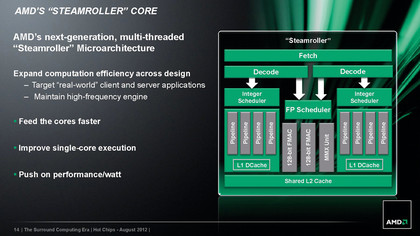

We don't just want a new chip to be better overall - we're offended if it seems to fall short in any area, and we're offended if it's not the fastest thing out there. Therefore, AMD's FX processors based on the Bulldozer architecture received a frosty reception at launch. Overall it's a step forward over AMD's Phenom II CPUs, but it's still a long way off Intel's finest.

Its greatest sin was to be slower in some areas - most notably single thread performance - than its Phenom II progenitor. In the context of PC technology any sort of step backward is awfully hard to stomach, but a new processor that was slower at crunching a single thread of code? Unforgivable.

This month, we want to comprehensively reassess that assumption and reboot the thinking process, because what matters isn't performance relative to Intel. What actually matters is whether AMD's chips are good enough. If they are good enough, we can then begin to look at all the other factors, like pricing and platform comparisons.

Consumer-friendly

Without doubt, there are plenty of reasons to like AMD chips. Reasons like AMD's support for existing customers by maintaining socket compatibility for as long as humanly possible. It just gives you more options when it comes to upgrading either your CPU or motherboard.

The way Intel does things - particularly with the transition from LGA1156 to LGA1155 - sometimes seems like change for change's sake; a cynical ruse to force you to buy a new motherboard and CPU together.

Don't forget, third-party chipsets no longer exist, so any Intel motherboard you buy means money trickling into Intel's coffers. Likewise, it's nice to have full ownership and control of the product you just paid handsomely for.

Increasingly, Intel is locking out access to features and functionality depending on price. Thus, some dual and quad-core processors have HyperThreading switched off, despite it being there in the hardware, and all but Intel's K Series and Extreme Edition chips have mostly locked or entirely locked CPU multipliers, putting the kybosh on overclocking. Meanwhile, all AMD FX processors are fully unlocked, and there aren't any features hidden away out for marketing reasons.

On the graphics side, of course, it's Nvidia that's providing the uncomfortable comparisons. AMD has generally been a lot more competitive when it comes to pumping pixels than it has crunching raw code, but there's still been the odd dropped ball, such as the Radeon HD 2000 and, to a lesser extent, 3000 series.

The association with AMD's ailing CPUs hasn't helped the branding effort either. That only looks worse when the competition seems to have the edge. When Nvidia's GeForce GTX 680 (the first card from the new Kepler generation) appeared, it seemed like there was something unnatural going on. Here was a relatively compact graphics chip with a transistor count much lower than we were expecting from a new flagship GPU at the time, and yet it had the measure of AMD's larger, much more complex Radeon HD 7970 in the benchmarks.

As it happens, the situation mirrored the comparison between AMD's Bulldozer chips and the Intel Core family of processors. In both cases, AMD's technology looked bloated and inefficient next to the competition. Perhaps that's why Nvidia's explanation for the performance of Kepler cards seemed so plausible.

In any case, it combined with that well-established shortfall on the CPU side to give the impression of AMD as an also-ran. Given the choice, you'd pick Nvidia.

The explanation for the efficiency of the Kepler generation seems plausible enough, too. This time around, Nvidia has focused on maximising graphics performance to the detriment of general purpose workloads running on its GPUs, otherwise known as GPGPU, so a whole hunk of transistors can be chucked out without hurting games performance. That's the theory.

The problem is, graphics performance is an awful lot more nuanced than CPU grunt. Broadly speaking, you can get a good idea of CPU performance with just a few tests, and it's unlikely you'll uncover anything nasty and surprising down the road.

For the most part, that's because CPUs are general purpose items. Yes, there is some separation of work loads when it comes to integer versus floating point performance, and some architectures can get caught out by things like cache performance and memory bandwidth, which will only show up in certain applications. But on the whole CPUs are as CPUs do.

Not so for graphics. The key difference is that GPUs are still effectively fixed function processors. Programmability has increased enormously compared to chips of a decade ago. But you'll still find things like texture units, rasterisers and render output units in modern GPUs.

What's more, even the most highly programmable units - the stream processors - aren't general purpose to anything like the same extent as a CPU core. The upshot of all this is massively more scope for inconsistent performance, at least in comparative terms.

So one GPU might be a monster at calculating pixel shaders at a certain precision level, but be really pants when it comes to tessellation. We don't want to give away too much at this stage. But let's just say that there are areas where Nvidia's decision to hack out a lot of hardware from the Kepler generation suddenly makes itself apparent where it matters: in games.

Locked out

The final major piece of the puzzle is the underlying platform - the motherboard and its chipset, in other words. It's both good and bad news that third party chipsets essentially no longer exist.

You'll need an AMD chipset to go with your AMD CPU, and the same applies to Intel hardware. Less choice is less confusing, but on the Intel side of the equation it adds to the sense of a lack of competition.

Here again, Intel's awful marketing rears its hideous head. The result is chipsets with features arbitrarily locked out. For instance, Intel only allows overclocking with certain chipsets. Combine that with the limited number of overclockable CPU models and you find yourself completely backed into a corner when it comes to building an Intel rig, with very little choice over models and prices.

Intel's track record for supporting existing customers is awful, too. It recently announced that it had finally cracked the problem of support for the all-important TRIM command for SSDs used in RAID arrays, and there was much rejoicing. Then we learned the software update would only be made available for the latest 7 Series chipsets. We're pretty sure that there's nothing in the hardware to prevent the update being made available for, say, 6 Series chipsets. It's just Intel's way.

We're not convinced Intel is really pushing the envelope when it comes to chipset features, either. Okay, Intel deserves credit for pushing through PCI Express 3.0 on the 7 Series chipsets, but why did it take so long to include USB 3.0 natively?

And what the rubbery duck is going on with Intel's SATA 6Gbps support? Here in the cold, harsh light of 2012, you still only get two out of six SATA ports with 6Gbps support with most 7 Series chipsets (with the exceptions you only get one). And this in an environment in which SATA 6Gbps, much less the slower 3Gbps standard, is already holding back performance with the fastest current SSDs.

Meanwhile, AMD has been supporting 6Gbps SATA across the board with many chipsets for the past two generations. Admittedly, AMD has yet to include USB 3.0 on its mainstream desktop PC chipsets. For now, only its chipsets for the A Series Fusion processors get native USB 3.0 treatment.

Then there's the end-to-end argument. Much to Intel's chagrin, only AMD can offer a full solution for your PC: a decent CPU, a decent motherboard chipset and decent graphics. Intel still can't do the graphics. That's why AMD has been bigging up the overall platform proposition of late, claiming there's a tangible benefit to running AMD CPUs and GPUs together.

Much of the reason for that strategy is the simple fact of the relatively weak links that are its CPUs, but it doesn't mean there's no truth to it.

Ultimately, all of this adds up and all of it matters. If, for instance, Intel didn't lock down overclocking to a small handful of PC processors. If it didn't lock down overclocking to certain chipsets. If it supported its existing customers more fully. If it was pushing the envelope of mainstream PC performance and features instead of sandbagging. If it sold its wares based on merit rather than marketing nonsense. If all of that was true, we wouldn't be here, begging you to reconsider AMD.

As we've said before, Intel has great products. But Intel does lock down its products, sneer at existing customers and sandbag on an increasingly epic scale. Just as important is the question of whether AMD's CPUs are good enough rather than how they compare to Intel products. If they are, suddenly there's a very strong case for choosing them.

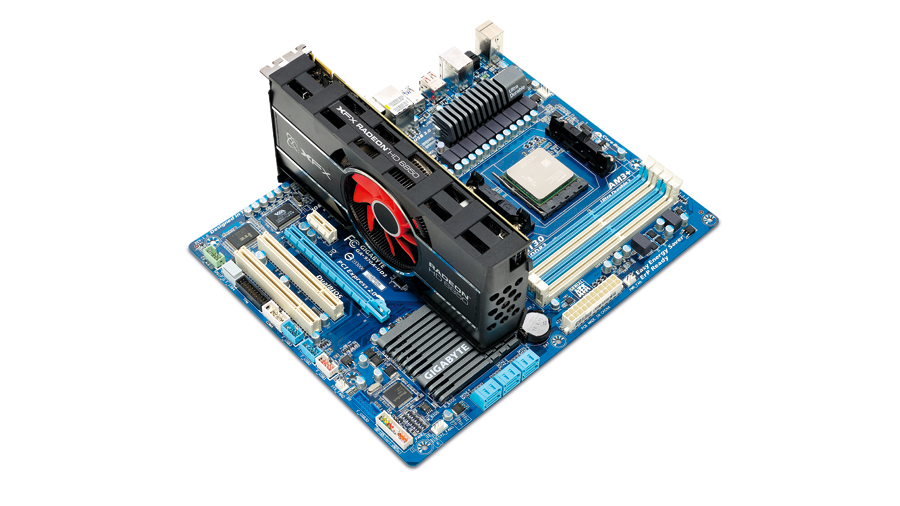

Admittedly, we're not dealing with any new products here. We've taken four-core, six-core and eight-core versions of AMD's FX chip, and matched them with AMD motherboards and graphics. All are well known quantities, but that's the point - maybe it's our attitudes and not AMD's products that are really in need of a refresh. Let's find out.

Technology and cars. Increasingly the twain shall meet. Which is handy, because Jeremy (Twitter) is addicted to both. Long-time tech journalist, former editor of iCar magazine and incumbent car guru for T3 magazine, Jeremy reckons in-car technology is about to go thermonuclear. No, not exploding cars. That would be silly. And dangerous. But rather an explosive period of unprecedented innovation. Enjoy the ride.