This hardware enthusiast wanted to build the world's worst graphics card with 128KB ROM - but couldn't manage to drop to a VGA resolution

The entire build was ugly and essentially pointless

- Crude GPU design showed random glitches whenever the system attempted memory writes

- iNapGPU struggled with environmental noise from simple USB cables

- A 12MHz counter overclocked to 20MHz caused constant instability

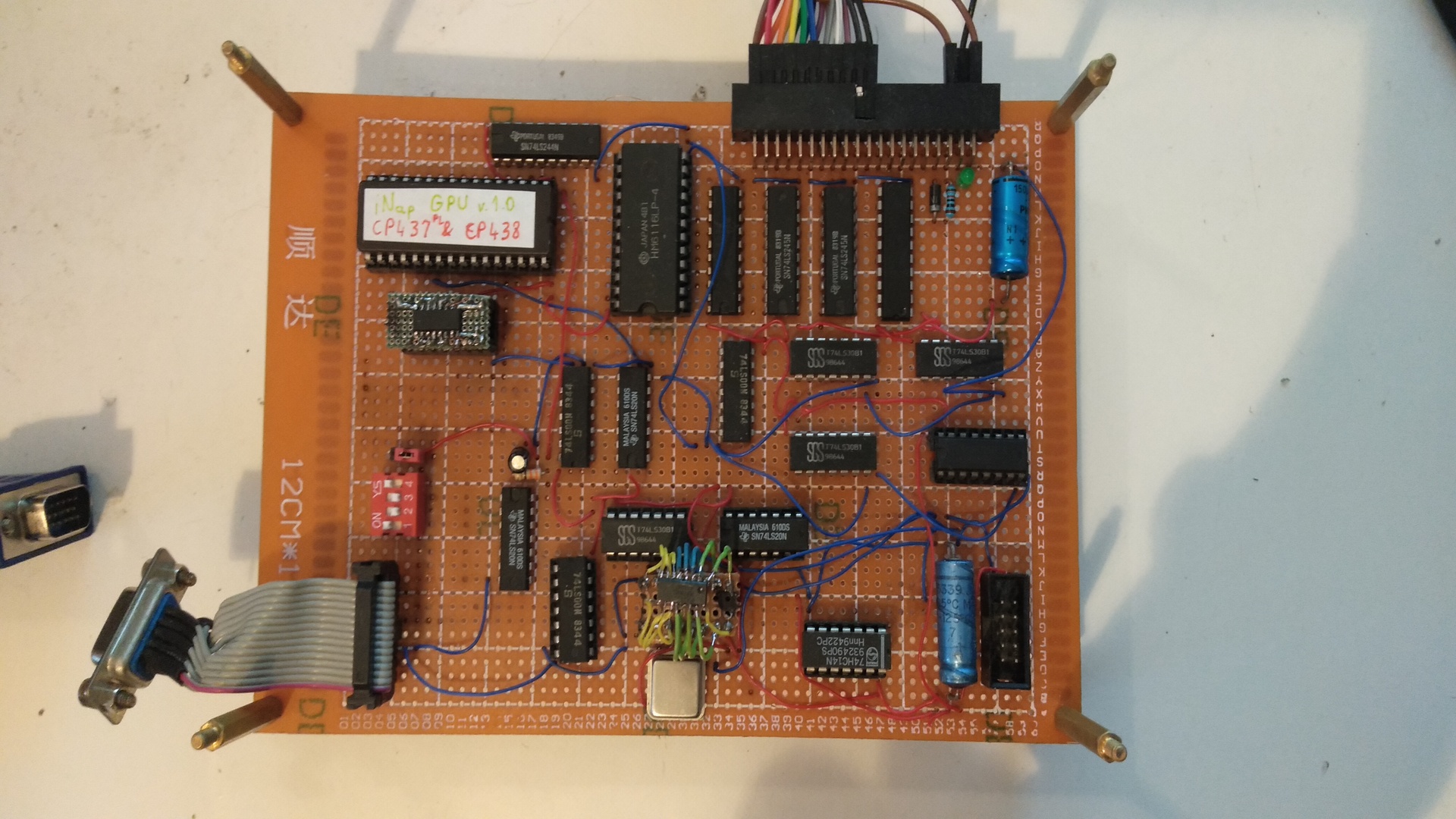

An obscure project on GitHub shows how a hardware hobbyist tried to construct what he called the “second world’s worst video card,” a text-mode graphics card using only TTL gates.

Working under the handle Leoneq, he released the “iNapGPU” repository to document his experiment.

His goal was to outdo Ben Eater’s “world’s worst video card” by making something even less practical.

A minimal design that still exceeded true VGA limits

Despite deliberately using crude methods, he could not reduce the output below a basic VGA resolution.

The project specifications list VGA output at 800 x 600 (actually SVGA) @60Hz, with an accessible resolution of 400 x 300 in monochrome.

The hardware was built from 21 integrated circuits, including counters, NAND gates, and an EPROM working with a small SRAM.

By treating a 1-Mbit EPROM as a 1-bit memory, Leoneq could load up to four character sets of 255 characters each.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

However, using tri-state buffers and a basic counter arrangement led to visual artifacts and poor stability.

Even when using a low-capacity memory and avoiding a microcontroller, the design still could not degrade to something below VGA.

Leoneq admitted that the assembly process was awkward, relying on 0.12mm wire on a protoboard rather than a printed circuit board.

He described the result as terrible and warned others to “use fpga instead” to avoid similar frustrations.

The HSYNC timer was driven by a 12-bit counter rated for only 12MHz at 15V, yet he pushed it to 20MHz to double Ben Eater’s pixel clock.

He compared only the “ones” of counter outputs instead of full numbers, a shortcut that introduced repeated signals without breaking the display.

The unconventional approach kept the card functional, but it also revealed timing errors and unstable output.

This was never a viable graphics card because image glitches occurred whenever it wrote to memory, as it could not write and read simultaneously.

Also, environmental noise, even from a nearby USB cable, distorted the display.

In addition, the characters lacked clarity due to ROM power and read-time limitations, while unexplained lines appeared in the background.

Leoneq openly labeled the image as ugly and described the entire effort as a “huge waste of time.”

Although the project demonstrated that a crude collection of TTL gates could generate a usable VGA signal, it also shows why modern designers prefer programmable logic like FPGAs.

Leoneq’s repository provides conversion tools and test code for Arduino Mega, but the effort seems more like a technical joke than a practical product.

You might also like

- These are the best NAS devices around

- We've also rounded up the best cloud storage platforms on offer

- Microsoft unveils advanced AI cooling which lowers heat, and cuts energy use

Efosa has been writing about technology for over 7 years, initially driven by curiosity but now fueled by a strong passion for the field. He holds both a Master's and a PhD in sciences, which provided him with a solid foundation in analytical thinking. Efosa developed a keen interest in technology policy, specifically exploring the intersection of privacy, security, and politics. His research delves into how technological advancements influence regulatory frameworks and societal norms, particularly concerning data protection and cybersecurity. Upon joining TechRadar Pro, in addition to privacy and technology policy, he is also focused on B2B security products. Efosa can be contacted at this email: udinmwenefosa@gmail.com

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.