How Google produces relevant search results

Search engines can seem like magic. You type what you're looking for into a text box, and – ping! – it appears mere milliseconds later. Of course, we all know that Google relies on more than a magic wand to produce relevant search results; but what exactly does it use instead?

Well, any search engine has three distinct phases: spidering the web to find and read web pages, indexing the web pages found and responding to user queries to produce a set of ranked results.

In this article we're not going to talk at all about the spidering activities, but you can imagine it as a special program that reads a web page, analyses the source HTML and then follows the URL links found. The pages are then stored and further analysed.

Indexing is an interesting algorithm. The purpose of indexing the web pages found through spidering is to produce fast and accurate search results. If it's not fast, the user will go elsewhere; if it's not accurate, then ditto.

This is where Google out-did its competitors at the start: it was faster (all the way from loading the page to the production of the results) and it was more accurate (due to its use of the PageRank algorithm). Once the search engine has indexed a huge number of pages, it can start to respond to search queries.

The index must be built in such a way that the search engine can produce a list of web pages that satisfy the query, and rank the results – speedily – so that the most applicable and relevant results are shown first. So the index is of paramount importance, with the ranking algorithm being equally significant.

After all, before Google and PageRank, there were other search engines like AltaVista that knew how to index. It was Google's ranking that changed everything. The standard type of data structure for text indexes is known as an inverted file. Suppose we have a set of text documents. The first stage is to analyse the content by parsing the text in order to identify the individual words (or tokens) in the text.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Once we've identified the words, writing a parser is pretty simple. We can use something like a state machine (or automaton) or regular expressions (which admittedly were designed for this kind of text parsing task). But what are we actually looking for?

The simple, intuitive answer is 'the bits in between the white space'. But that simple answer reveals a difference between how we humans scan text and how the computer does. For example, take another look at the first sentence in this paragraph. Implementing this definition as a program (and using square brackets to delimit the tokens here), we would see words like [simple,], ['bits] and [space.'].

Since that's a little too basic, we might refine the definition to 'words are the bits in between the white space and punctuation'. But again, we fall into a trap: for the first sentence in this paragraph, this definition would now produce the words [that] and [s] for the word 'that's'.

So probably the best answer – at least for the purposes of indexing English text – is to write a parser that, when it sees a punctuation character, checks the character after the punctuation mark.

If it's a letter, then it's most likely to be an abbreviation like 'that's' or 'it's', and it should be included in the word. Even better, these days documents will contain things like email addresses and URLs. These can be viewed as 'words' in their own right, and this word-parser algorithm would work with them too.

Of course, for other languages, parsing words could require other algorithms depending on issues like the character set being used (ideographic text, for example, would be interesting) or the grammar rules for the language. In parsing the text, it's likely that we'll find duplicate words.

For a simple index, such as the one we're using here, all we need to know is whether a particular document includes a given word. We're not particularly interested in whether the document uses that word once, twice or a thousand times. After all, when we get to displaying the list of search results, the user will just click on a given link and go to that particular URL to browse the document.

In our parsing exercise, then, we should ignore duplicates and aim for a list of words used. The next step is to throw out the words that have no searchable meaning because every document will use them. Examples of these stop words (as they're called) are 'the', 'a', 'is', 'I', 'in' and so on (and those last three words too!).

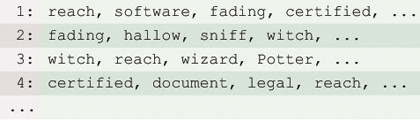

Every application of a text indexing algorithm will use its own set of stop words, and these lists will be built up and maintained at runtime from the content being seen. After all that is done, we'll have a table of document names with a list of words for each document, as shown in Figure 1.

Figure 1: A forward index lists all of the words that are found in a particular document

Here I've named the documents as numbers, and in practice we'd have another table that cross referenced these numbers to the actual URL for the document. The next step is to invert this table; after all, for a search we're going to be given a word, and we have to identify the documents that contain this word.

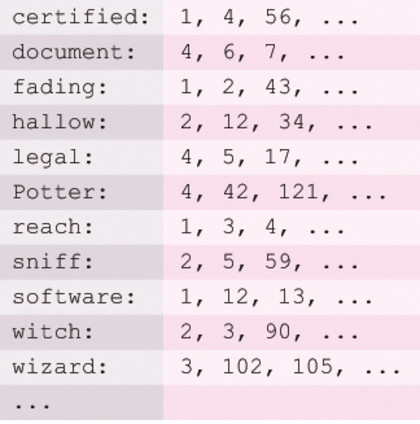

The word should be the key, and at the moment the table is keyed by document number. What we end up with is a table as shown in Figure 2. The table is keyed by word, and the data for each record in the table is a list of document numbers. This structure is known as an inverted file. Starting from a table of words per document, we end up with a table of documents per word.

Figure 2: This inverted index is a list that shows which documents contain a certain word