Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Forget the space race to Mars - the real technological arms race is taking place in super-cooled bunkers across the globe as countries compete to take the crown of fastest supercomputer on Earth.

Just like the scramble to get to the moon in the '60s, the pride of nations is at stake and the rewards in terms of fringe technology benefits have the potential to be huge.

Supercomputers allow us to discover new drugs, predict the weather, fight wars for us and make us money on the stock market. However, these are just the more common applications; there really is no data-analysing task that can't be solved much faster when the brute power of these computing machines is unleashed.

Being the owner of the most supercomputing power really does give you an edge over the rest of the world, so it's no wonder people pay attention to who is leading the field. So who is pushing the supercomputing boundaries, what technologies are they employing and is this technology race more politics than science? We investigate...

Supercomputers are defined as the fastest computers at any given time, and they are big business. They provide the power to dramatically reduce research time, to accurately simulate and model real life systems, and to help design products perfectly suited for their environment.

Nations that invest in supercomputers can quickly reap the benefits in terms of technological advances, not to mention the kudos of being at the very forefront of technologies that are already changing the world we live in.

There is a definite pride in being the best in the supercomputing sphere - a pride America enjoyed since 2004. It was therefore big news state-side when China's Tianhe-1A, based at the National Supercomputer Centre in Tianjin, took the supercomputing crown from America's Jaguar system at Oak Ridge National Laboratory in November 2010.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

For years the US had enjoyed its status as the leader in the field, and despite losing the top spot, it could take some solace from the fact that the Tianhe-1A was built from US technology - specifically Nvidia GPUs.

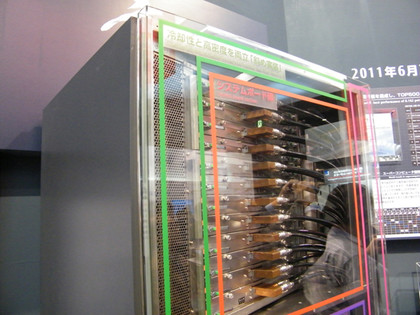

That all changed when Japan knocked the US down to third place in June 2011 with its K Computer, built from Fujitsu's SPARC64 VIIIfx processors. It had been seven years since Japan last held the title of fastest supercomputer on Earth (in 2004 with NEC's Earth Simulator), and when the K Computer took pole position, it did so in style.

It harnessed more processing power than the next five supercomputers combined despite the fact it wasn't even yet completed. It was commissioned by the Japanese Ministry of Education, Culture, Sports, Science and Technology with the aim of breaking the 10 petaflop barrier (the K in its name refers to the Japanese word 'kei', which means 10 quadrillion).

In June 2011 it comprised just 672 computer racks of its planned 800, but with 68,544 CPUs operational it still scored a LINPACK benchmark of 8.162 petaflops (quadrillion floating-point operations per second). By November 2011 it had reached its goal, with 864 server racks and 88,000 CPUs it achieved a LINPACK benchmark of 10.51 petaflop/s (93 per cent of its theoretical peak speed of 11.28 petaflops).

A supercomputing power shift

Suddenly the east's dependence on western technology looked shaky, and America's dominance was under threat. Despite still owning the majority of the supercomputers on the top 500 list (255 in June 2011, to be exact), America's claim to being the supercomputer power was further questioned in October 2011 with the announcement of China's new supercomputer, the Sunway BlueLight MPP.

This wasn't because it was the most powerful - it's thought to be about the 15th fastest machine in the world - but because it was built entirely from Chinese-made processors, making it the first Chinese supercomputer that doesn't use Intel or AMD chips.

The arrival of the Sunway realises China's dream to sever its reliance on US technology. This isn't just rhetoric, but a stated ambition of the Chinese government.

What's more, the Sunway's small size and relative energy efficiency compared to other supercomputers in the top 20 have surprised top computer scientists, and some commenters believe this is a sign that China is about to leapfrog its competitors with the next iterations of its own technology.

China's Sunway BlueLight MPP is built from ShenWei SW-3 1600 chips (16-core, 64-bit MIPS-compatible (RISC) CPUs) that are thought to be a few generations behind Intel's current chips, but with the large funding base provided by the Chinese government, it's unlikely that new versions of these chips are far away.

ShenWei CPUs are believed to be based on China's Loongson/Godson architecture, which China has been working on since 2001. Some people believe this family of chips was reverse engineered from the US-made DEC Alpha CPU.

This may be true, but the nice thing about reverse engineering products is that it allows you to apply hindsight to your design - to redesign it and remove all those hangovers and unnecessary artefacts from previous iterations - hence their better energy efficiency and smaller size.

With the US seemingly losing headway in the supercomputing world, its government has been left asking how you determine the best supercomputer in the world. These supercomputer world rankings in term of power are all very well, but a lot of people, including President Obama, have pointed out that it isn't what your supercomputer can do, but what you do with it.

They argue that supercomputing isn't just about raw power, but how that power is used, how much electricity it consumes, and how fast it really is at bringing about real world solutions to problems.

Who dares to judge?

So who judges which supercomputer takes the crown, and what criteria do they use? Most people are happy to be guided by the Top 500 List of Supercomputers.

The Top 500 project was started in 1993, and ever since then has produced a list of the most powerful computing devices twice a year to "provide a reliable basis for tracking and detecting trends in high-performance computing".

Assembled by the University of Mannheim in Germany, the Lawrence Berkeley National Laboratory in California and the University of Tennessee in Knoxville, it uses the LINPACK benchmark to decide which machine has the best performance.

Introduced by Jack Dongarra, the LINPACK benchmark tests the performance of a system by giving it a dense system of linear equations to solve and it is measured in floating point operations per second (flop/s) - as in, how many calculations per second it can perform. The higher the flop/s, the better the computer.

It has been agreed that this isn't the best way to measure power. Not only is it measuring only one aspect of the system, but two machines with the same processor can get different results: the performance recorded by the benchmark can be affected by such factors as the load on the system, the accuracy of the clock, compiler options, version of the compiler, size of cache, bandwidth from memory, amount of memory, and so on.

However, this is true of most benchmarks. The Top 500's focus on just one measure led President Obama's Council of Advisors on Science and Technology to argue that the US's position in supercomputing shouldn't be decided by this single metric.

In a report in December 2010 it stated: "While it would be imprudent to allow ourselves to fall significantly behind our peers with respect to scientific performance benchmarks that have demonstrable practical significance, a single-minded focus on maintaining clear superiority in terms of flops count is probably not in our national interest."

A new benchmark?

Dongarra agreed with the Whitehouse report, and is keen to start using another benchmark he originally designed for DARPA, called the High Performance Computing Challenge (HPCC). HPCC completes seven performance tests to measure multiple aspects of a system. As yet, it has not been adopted by the Top 500.

Another benchmark, the Graph 500 benchmark, has also been introduced by an international team led by Sandia National Laboratories. Its creators describe it as complementary to the one used by the Top500 organisation that helps to rank data intensive applications.

However, because it produces just one number based on a single aspect of supercomputing, the Graph 500 may be open to the same criticisms the LINPACK benchmark is facing. The Top500 project recognises the limitations in the single algorithm they use, and it does its best to ensure they don't allow people to take advantage of the fact.

The website carries a disclaimer that the Top 500 list only looks at general purpose machines - ones that can handle a variety of scientific problems. So, for example, any system specifically designed to solve the LINPACK benchmark problem or have as its major purpose the goal of a high Top 500 ranking will be disqualified.

Despite these concerns, the TOP500 is generally accepted as being a reliable guide as to which supercomputer leads the world. But why are so many countries striving towards the crown of best supercomputer?

We spoke to the LINPACK and HPCC benchmark creator Jack Dongarra, who is currently the professor at University of Tennessee's Department of Electrical Engineering and Computer Science and part of a group from the University of Tennessee, Oak Ridge National Laboratories, and asked him why supercomputers are so important and what they can offer the nations who possess them.

"Supercomputing capability benefits a broad range of industries, including energy, pharmaceutical, aircraft, automobile, entertainment and others," he explained. "More powerful computing capability will allow these diverse industries to more quickly engineer superior new products that could improve a nation's competitiveness. In addition, there are considerable flow-down benefits that will result from meeting the hardware and software high performance computing challenges. These would include enhancements to smaller computer systems and many types of consumer electronics, from smartphones to cameras."

Real world benefits

Image credit: NCCS.gov

Dongarra went on to explain the real world benefits of supercomputing in product design:

"Supercomputers enable simulation - that is, the numerical computations to understand and predict the behaviour of scientifically or technologically important systems - and therefore accelerate the pace of innovation. Simulation has already allowed Cummins to build better diesel engines faster and less expensively, Goodyear to design safer tires much more quickly, Boeing to build more fuel-efficient aircraft, and Procter & Gamble to create better materials for home products. Simulation also accelerates the progress of technologies from laboratory to application. Better computers allow better simulations and more confident predictions. The best machines today are 10,000 times faster than those of 15 years ago, and the techniques of simulation for science and national security have been improved."

Another person who believes in the need for supercomputing is Kevin Wohlever, director of supercomputing operations at Ohio Supercomputer Centre. He's been involved with supercomputing for almost 30 years, working for vendors like Cray Research, Inc. We asked him whether the China versus US supercomputer race is a matter of national pride, or if there's there something more important at play.

"It is both a matter of pride and something deeper. If you look back 10-15 years, the same questions were being asked with the Japanese versus US supercomputer race. There is obvious national pride. There is vendor pride for the company that designs and builds the fastest computer. There are marketing benefits from being the company or country that has and provides the fastest computer in the world.

"There are also significant benefits from the proper applications running on top end computers," he adds. "Modelling the financial markets and making trades just that split second faster than someone else can make a lot of money for someone. There are also implications for everything from weather modelling, designing cars, planes, boats and trains, to finding new drugs, modelling chemical reactions and such. The right tools in the right hands can make a big difference in the level of security anyone can feel, whether that happens to be in their job or their life."

The third leg of science Gerry McCartney, Vice President for Information Technology at Purdue University and CIO Oesterle Professor of Information Technology, also agrees that there needs to be more emphasis on developing supercomputers, and not just to be able to say you are the best:

"I would say - and I'm not alone - that high-performance computing, computational modelling and simulation is now the 'third leg' of science and engineering, alongside the traditional legs of theory and experimentation. So having inferior computing is exactly like having inferior labs or other research tools. It has a direct relation to the amount, quality and speed of developments, both basic science and technological developments, which advance knowledge, and science and technological developments that drive economic growth. There's a lot more at stake than bragging rights."

With this in mind we asked McCartney, who oversees the biggest campus supercomputer in the world, how important he felt supercomputing is in the political sphere today. "Probably not important enough," he told us. "In the US, as well as the UK, it is facing the same problem as science, medical and other types of research generally. The economy is down, budgets are tight nationally and, in the US, at the state level. We're looking for ways to spend less, not places to spend more. Certainly, we should find ways to leverage efficiencies and spend money wisely. But we need to think long term in doing so. Developments today, like atomic-scale silicon wires, may not pay off big for a decade. But they will never pay off if you don't have the tools to begin."

However, despite these monetary constraints, the US is not standing still. IBM and Cray are already developing 20 petaflop machines, and despite President Obama's Council of Advisors on Science and Technology council stating in December 2010 that, "Engaging in a [supercomputing] 'arms race' could be very costly, and could divert resources away from basic research," America's 2012 budget has earmarked $126 million for the development of exascale supercomputing, the next major milestone in supercomputer research.

This compares with the mere $24 million set out specifically for supercomputing in the previous budget. However, it's positively dwarfed by Japan's plans to invest up to $1.3 billion in developing its own exascale computer by 2020.

Exascale computing

Exascale computing is a big dream in the supercomputing world - producing a machine with 1,000 times the processing power of the fastest supercomputer currently operational. Most pundits are predicting the breakthrough by the end of the decade, but there are various real world constraints that need to be overcome first.

Technically there is nothing stopping someone assembling the hardware to create an exascale computer today. The problems are how much power it would consume, its mammoth size, and the heat it would produce. Even today's high-performance computers would melt in minutes if you took away the cooling fans used in their ice-cold bunkers, due to the heat produced by their operation.

It seems China's new home-grown Sunway BlueLight MPP computer is leading the way in energy efficiency. According to its released specifications its power requirement is one megawatt. In comparison, its predecessor, the Tianhe, built using US technology, consumes four megawatts when running, and America's Jaguar around seven megawatts.

That's a considerable saving in both power and running costs for a machine that's about 74 per cent as fast as the Jaguar, especially when you consider that each megawatt translates to about a million dollars a year in electricity costs alone. Running Japan's K computer at its June peak of 8.162 petaflops took 9.89 megawatts. That's roughly $10 million a year.

The leap to exascale may seem a big one to make by 2020, but it will almost definitely be needed to wade through the amount of data that will be stored by then. Eight years ago there were only about five exabytes of data online. In 2009, that much data was going through the internet in a month.

Currently, the estimates stand at about 21 exabytes a month. That's not to mention all the sources of data stored elsewhere, all ripe for analysis - loyalty cards in supermarkets, CCTV footage, credit card data, information from weather tracking satellites, tidal flows, river drainage and star mapping, to name but a few - nowadays, data is being generated and stored everywhere, producing endless seas of statistics to wade through.

Supercomputers can, and do, turn these swathes of data into useful information, analysing trends, comparing data sets, finessing product design, producing hyper-realistic simulations of systems. Exascale supercomputing will allow us to analyse our world as never before.

Looking at current research, it seems there may be many paths to reach the exascale dream, and some of them are being explored already. To find better ways to achieve better data speeds or more energy efficient supercomputers, research centres are starting to build their supercomputers out of more unusual components.

For example, the San Diego Supercomputer Centre (SDSC) has built a supercomputer that uses Flash storage (specifically 1,024 Intel 710 series drives), allowing it to access data up to 10 times faster than with traditional spinning hard drives. At its unveiling in January, Michael Norman, the director of the SDSC, said: "It has 300 trillion bytes of flash memory, making it the largest thumb drive in the world."

It's an impressively powerful thumb drive though, ranking 48th on the Top500. In case you're wondering, the computer is called Gordon. Yes that's right, Flash Gordon.

Alternative supercomputers

If you can build a supercomputer from solid-state drives, why not from mobile phone chips? By using low-powered Tegra CPUs, typically found powering mobile phones and tablets, the Barcelona Supercomputer Centre in Spain hopes to reduce the amount of electricity needed to run its new supercomputer.

There's a deal with Nvidia in the offing, and it hopes to produce a high-performance computer by 2014 that runs with a 4-10 fold improvement in energy efficiency over today's most energy-efficient supercomputers.

Or how about building a cloud-based virtual supercomputer from the servers you have lying around idle - servers that could be a supercomputer today, and something else tomorrow. A supercomputer for hire, if you will.

Amazon did just that in the autumn of 2011, building a virtual super computer out of its Elastic Compute Cloud, the worldwide network of data centres it uses to provide cloud computing power to other businesses across the world. Amazon's global infrastructure is so vast that it could run a virtual supercomputer - one fast enough to be placed as the 42nd most powerful supercomputer in the world - without affecting any of the businesses using its data centres for their own means.

Nowadays you don't need to physically build a supercomputer, you just need to hire enough virtual servers to get the compute power you need to run your project, and for a fraction of the price it would cost to build your own.

This form of distributed supercomputing is something the Chinese could easily reproduce, and if they were to connect all their different supercomputing complexes together and then run them as a virtual supercomputer, they could easily outpace anything the US could produce by a factor of 100.

The future of supercomputing

However, these are just ways of employing technologies that already exist in a commercial form. The truly exciting research is based on technologies only just being tested in the lab.

It's in the development sphere that various technology buzzwords and phrases are bandied about, all of which could lay the foundation for the next generation of supercomputing. These include optical information processing, atomic-scale silicon wires and quantum computing.

Optical processing

Purdue University is working on two of these technologies alone. The first concerns optical information processing - where light instead of electricity is used to transmit signals, much like in a fibre optic cable. However, although fibre optic cables can transmit a lot of data, the need to translate the data back into electronic signals at either end of the cable this slows down the data transmission considerably (and opens the data up to cyberattack).

The optical processing dream is for the information carried by the light to be transmitted directly into the computer, and Purdue thinks they may have found a way to do just that. A team based there has succeeded in making a 'passive optical diode' that transmits optical signals in only one direction.

As associate professor of electrical and computer engineering Minghao Qi explains: "This one-way transmission is the most fundamental part of a logic circuit, so our diodes open the door to optical information processing."

Made from two tiny silicon rings measuring 10 microns in diameter (about one-tenth the width of a human hair), they are small enough to fit millions of them on a computer chip. As well as speeding up and securing fibre optic data transmission, these optical diodes could speed up supercomputers if they were used to connect the processors inside the computer.

As Graduate Research Assistant Leo Varghese points out, "The major factor limiting supercomputers today is the speed and bandwidth of communication between the individual superchips in the system. Our optical diode may be a component in optical interconnect systems that could eliminate such a bottleneck."

Another breakthrough made by Purdue University, in conjunction with the University of New South Wales and Melbourne University, is the smallest wire ever developed in silicon. By inserting a string of phosphorus atoms in a silicon crystal, the research teams created a silicon wire one atom high and four atoms wide, with the same current-carrying capability as copper wires. This could pave the way to the creation of nanoscale computational devices and make quantum computing a reality.

With size being such an issue in the world of supercomputing, this research shows what could be the ultimate limit of downscaling using silicon leading to the smallest possible silicon-based supercomputers.

Going too fast?

With all these new technologies being employed, are we already chasing computing power for the sake of just being the best rather than thinking of practical applications?

We asked Purdue University's Gerry McCartney if supercomputing is going too fast for people to keep up with. "You have to have people who know how to build, operate and maintain these systems and, more importantly, how to work with researchers to make it as easy as possible for them to get their research done using these tools," he answered.

"There's no surplus of qualified people. They're in great demand. As a university supercomputing centre, we help teach students by involving them as student employees with important roles. These students end up with multiple job choices in the industry. But our motives aren't entirely altruistic. We're able to attract some of them to our expert professional staff after they graduate."

We asked Kevin Wohlever of the Ohio Supercomputer Centre if there are problems with training people to know how to use these emerging computing technologies. He said it's more a problem with educating companies on what's available.

"I think that supercomputing is going too fast for some industries and companies to keep up with. The rate of change in technology overall is very fast. Very good and smart people are able to look at the new technology and take advantage of it to solve today's problems. I think that the issue is that acceptance and use of the new technology by the companies and people higher up in the organisations, or that have a nice profit stream from current technology, suppresses the use or widespread adoption of new technology. We do need to get some better standards in place to adopt new technology faster. I think we need to show that this is an exciting field to get into. It may not have the financial benefits of some technology or social technology companies, but there is still exciting work to be done."

This excitement is clear throughout the supercomputing industry, and whatever the politics, it's clear that supercomputers are more and more necessary in our world of high-speed calculation. If the western world were to lose its dominance of supercomputing, the consequences would most likely mean it would fall behind in many other areas of science.

However, this seems an unlikely occurrence - the race is on, and it's likely to be never-ending. When the prize is just to be the fastest computer in the world, there's always another contender just around the corner.

The true benefits of this continued race will be seen by the consumers, as these supercomputing leviathans pave the way for the computer technologies that we'll find in our homes, cameras, tablets and phones a mere few years later, not to mention the scientific breakthroughs that faster computing speeds will make possible.

It's unlikely that any government will fail to recognise the importance of supercomputing, and even if that is just due to political reasons of pride and competition between nations, technology will still be the winner.