Google's most powerful supercomputer ever has a combined memory of 1.77PB - apparently a new world record for shared memory multi-CPU setups

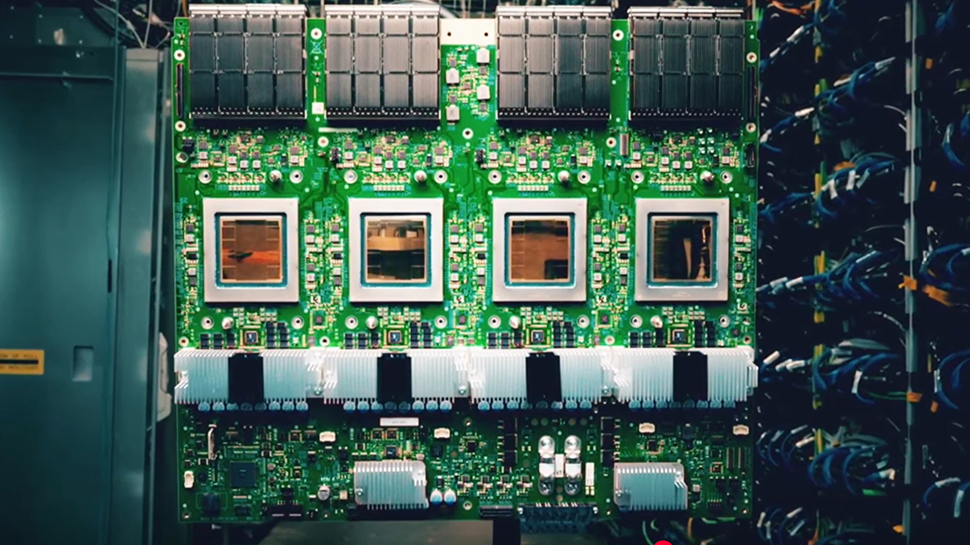

Ironwood TPU is already deployed in Google Cloud data centers

- Google’s Ironwood TPU scales to 9216 chips with record 1.77PB shared memory

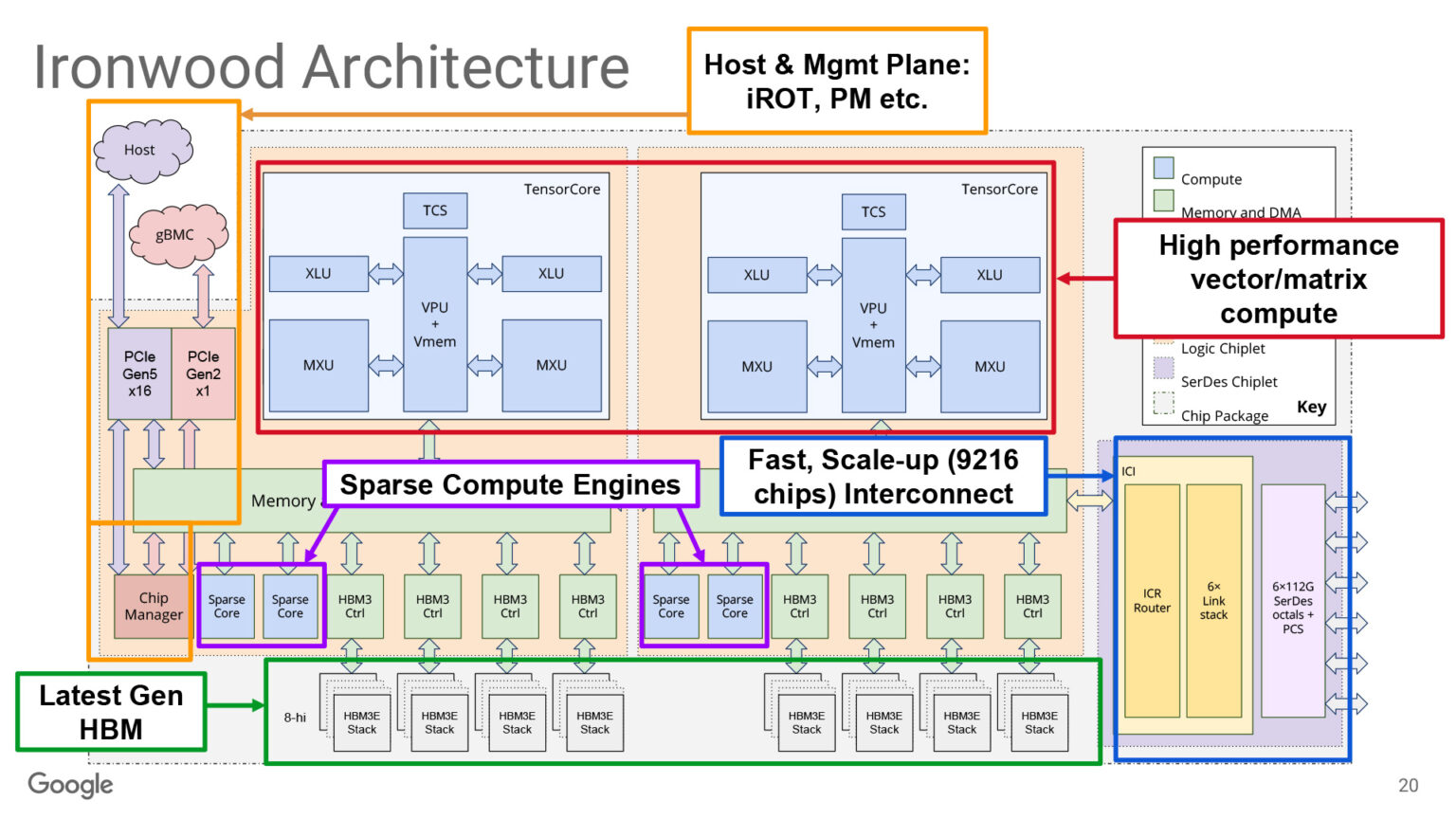

- Dual die architecture delivers 4614 TFLOPs FP8 and 192GB HBM3e per chip

- Enhanced reliability cooling and AI assisted design features enable efficient inference workloads at scale

Google closed out the machine learning sessions at the recent Hot Chips 2025 event with a detailed look at its newest tensor processing unit, Ironwood.

The chip, which was first revealed at Google Cloud Next 25 back in April 2025, is the company’s first TPU designed primarily for large scale inference workloads, rather than training, and arrives as its seventh generation of TPU hardware.

Each Ironwood chip integrates two compute dies, delivering 4,614 TFLOPs of FP8 performance - and eight stacks of HBM3e provide 192GB of memory capacity per chip, paired with 7.3TB/s bandwidth.

1.77PB of HBM

Google has built in 1.2TBps of I/O bandwidth to allow a system to scale up to 9,216 chips per pod without glue logic. That configuration reaches a whopping 42.5 exaflops of performance.

Memory capacity also scales impressively. Across a pod, Ironwood offers 1.77PB of directly addressable HBM. That level sets a new record for shared memory supercomputers, and is enabled by optical circuit switches linking racks together.

The hardware can reconfigure around failed nodes, restoring workloads from checkpoints.

The chip integrates multiple features aimed at stability and resilience. These include an on-chip root of trust, built-in self test functions, and measures to mitigate silent data corruption.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Logic repair functions are included to improve manufacturing yield. An emphasis on RAS, or reliability, availability, and serviceability, is visible throughout the architecture.

Cooling is handled by a cold plate solution supported by Google’s third generation of liquid cooling infrastructure.

Google claims a twofold improvement in performance per watt compared with Trillium. Dynamic voltage and frequency scaling further improves efficiency during varied workloads.

Ironwood also incorporates AI techniques within its own design. It was used to help optimize the ALU circuits and floor plan.

A fourth generation SparseCore has been added to accelerate embeddings and collective operations, supporting workloads such as recommendation engines.

Deployment is already underway at hyperscale in Google Cloud data centers, although the TPU remains an internal platform not available directly to customers.

Commenting on the session at Hot Chips 2025, ServeTheHome’s Ryan Smith said, “This was an awesome presentation. Google saw the need to create high-end AI compute many generations ago. Now the company is innovating at every level from the chips, to the interconnects, and to the physical infrastructure. Even as the last Hot Chips 2025 presentation this had the audience glued to the stage at what Google was showing.”

You might also like

- New Blackwell Ultra GPU series is the most powerful Nvidia AI hardware yet

- We've rounded up the best workstations around for your biggest tasks

- On the move? These are the best mobile workstations on offer

Wayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.