What is Google Duplex? The world's most lifelike chatbot explained

The Google Assitant AI won’t enslave us, but it might help us fix our hair

Update: Google Duplex has finally started rolling out, but so far only to a small number of Pixel users in select cities.

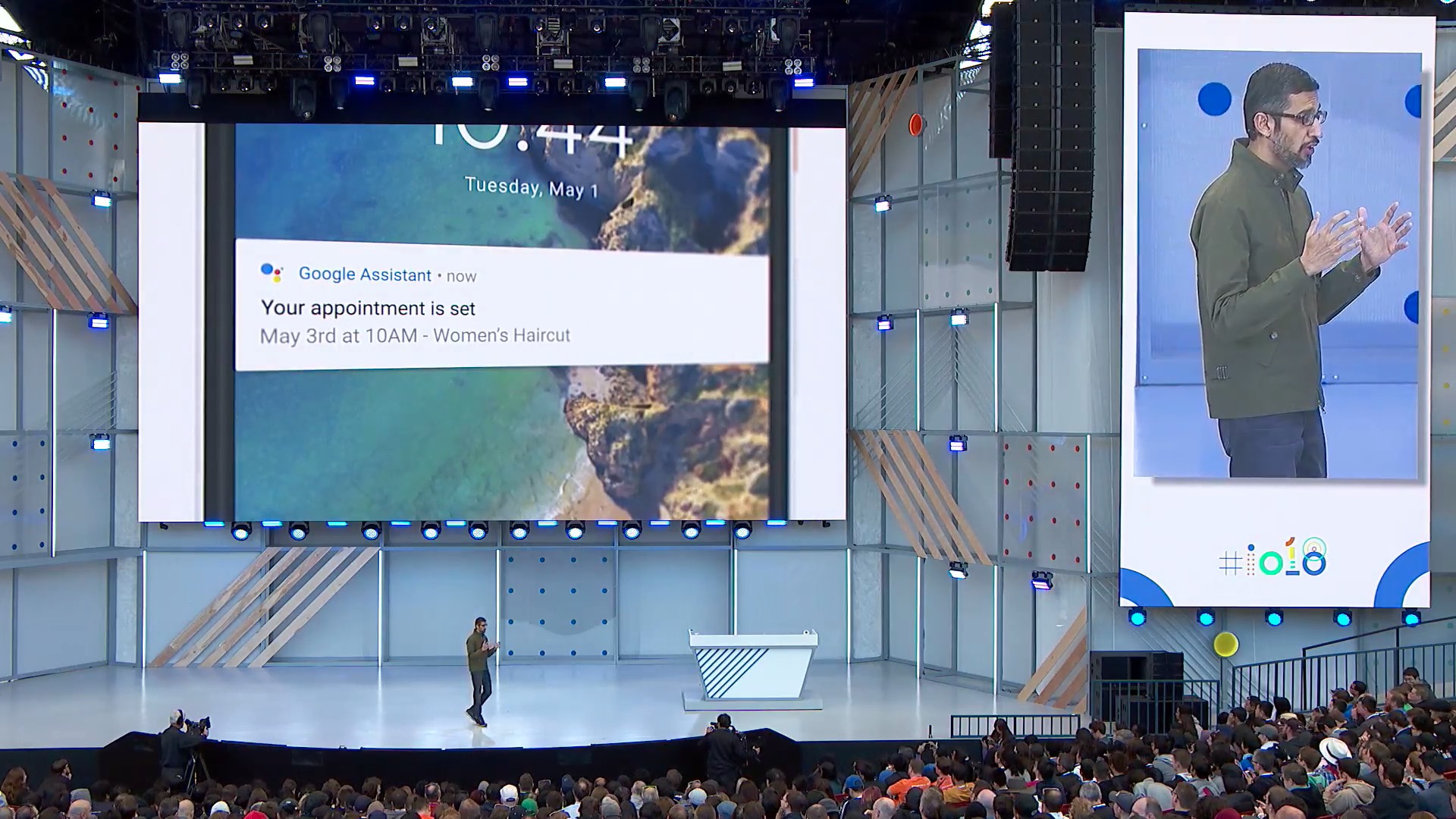

Google announced all kinds of goodies at this year’s Google IO, and one of the most interesting was Google Duplex.

It’s an artificial intelligence agent that can make phone calls for you – and we don’t mean dialing the number. We mean it has actual conversations with real life people.

If you haven’t already seen the demo, please watch it below by skipping to 1 hour, 55 minutes on the video from Google's Keynote. We recommend you watch it as it's amazing.

Now, we know that keynote videos are the Instagram photos of the tech world: what you see is highly polished, carefully selected and often vastly different to what you can expect in reality... but Duplex could be really useful.

What is it?

Google Duplex isn’t designed to replace humans altogether. It’s designed to carry out very specific tasks in what Google calls "closed domains". So for example you wouldn’t ask Google Duplex to call your mum, but you might ask it to book a table at a restaurant.

What can it do?

Initially Google Duplex will focus on three kinds of task: making restaurant reservations, scheduling hair appointments and finding out businesses’ holiday opening hours.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Why does it sound like a human?

Partly because Google reckons it’s the most efficient way to get the information, especially if there are variables and interruptions, and partly because if you got a phone call from the Terminator you’d probably hang up.

How does it work?

The full details are over at the Google AI Blog, but here’s the executive summary: Google Duplex enables you to get information that isn’t on the internet.

Google Duplex is the missing link between the Google Assistant and any business, because it enables the Assistant to get information that isn’t available digitally. For example, you might want to know a business’s holiday opening hours but they haven’t listed it on their website, or you might want to know if a shop has a particular item in stock and it doesn’t have online stock availability.

So Duplex does what you’d do. It phones up and asks for the information it needs.

From a tech perspective, Google Duplex uses a recurrent neural network (RNN) built using TensorFlow Extended (TFX). There’s a really good introduction to RNNs here. What RNNs like the one powering Duplex can do is process sequential, contextual information, and that makes them well suited to machine learning, language modelling and speech recognition.

When you make a request, the Google Assistant will hand it over to Google Duplex to carry out; if it’s within Duplex’s abilities it’ll get on with it. If it isn’t, it will either tell the Assistant it can’t do it or refer the job to a human operator.

What’s so clever about it?

Duplex talks like a normal person, and that makes it a natural – and natural-sounding – extension to the OK Google functionality we already know. Let’s stick with our restaurant example.

With Duplex, we could say “OK Google, find me a table for Friday night” and the Google app would then call restaurants on your behalf. Not only that, but it would have conversations – so if you wanted a table for around 7:30 but there wasn’t one, it could ask what times were available and decide whether those times fit your criteria. If not, the Google app would call another restaurant. Similarly if you wanted to arrange a meeting with Sarah, the Google app could call Sarah (or Sarah’s AI) to talk through the available time slots and agree which one would be best.

The key here is that this is all happening in the background. You tell Google to do something and it goes and does it, only reporting back after the task is complete.

Isn’t it creepy?

It is a bit, and it’s prompted some discussions online already: should AIs tell us that they’re AIs when they phone us up?

However, the answer from Google's perspective is seemingly yes, as the company has since confirmed that Google Duplex will identify itself at the start of a call, so while it might sound human you won't be in any doubt that you're talking to a machine.

Who’s it for?

The benefits for people with hearing difficulties are obvious, but it can also overcome language barriers: you might not know the local language, but Google Assistant does – so it can converse in a language you don’t speak.

And it can be asynchronous, so you can make the request and then go offline while Google Duplex gets on with the job: it will report back when you’re online again. That’s useful in areas of patchy connectivity, or if you’re just really, really busy.

What’s it capable of?

That’s a very good question. Right now, our personal digital assistants are more about the digital than the assistance: you can ask them to turn up the lights or tune the radio, but they can’t book your car in for a service, make a dental appointment or any of the many other bits of tedious admin we spend so much time doing. Imagine the hours we’d save if we didn’t have to spend countless hours on the phone to change the tiniest little detail in a form, answer a simple customer service question or find out why our broadband is on the blink again.

Or, you know, it could go bad and refuse to open the pod bay doors.

2001 jokes aside, we can easily imagine Duplex-style agents tricking us into answering the kind of robocalls we currently terminate. And we can also imagine scams using this kind of technology to try and get your online banking details.

Duplex – and artificial intelligence generally – is a good example of how technology is morally neutral, neither good nor bad. As Microsoft also showed us this week there’s enormous potential to do good.

But if it’s possible to misuse this tech, someone will find a way to do it.

When can I get Google Duplex?

Google has started rolling Duplex out to a small number of Pixel owners in "select cities." The company hasn't confirmed which cities, but it's likely to be New York, Atlanta, Phoenix, and San Francisco, as those cities are where Google Duplex was previously trialed.

Initially Google Duplex only works for restaurant reservations, but expect the other features and a wider roll out to happen eventually.

Contributor

Writer, broadcaster, musician and kitchen gadget obsessive Carrie Marshall has been writing about tech since 1998, contributing sage advice and odd opinions to all kinds of magazines and websites as well as writing more than twenty books. Her latest, a love letter to music titled Small Town Joy, is on sale now. She is the singer in spectacularly obscure Glaswegian rock band Unquiet Mind.