HPE is building a rapid AI supercomputer powered by the world's largest CPU

New AI supercomputer will be built around a wafer-sized processor with 850,000 cores

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Hewlett Packard Enterprise (HPE) has announced it is building a powerful new AI supercomputer in collaboration with Cerebras Systems, maker of the world’s largest chip.

The new system will be made up of a combination of HPE Superdome Flex servers and Cerebras CS-2 accelerators, which are powered by the monstrous Wafer-Scale Engine 2 (WSE-2) processor.

The nameless supercomputer is expected to go live later this summer at the Leibniz Supercomputing Center (LRZ) in Bavaria, providing researchers with a new resource to help accelerate research projects on topics ranging from medical imaging to aerospace engineering.

New AI supercomputer

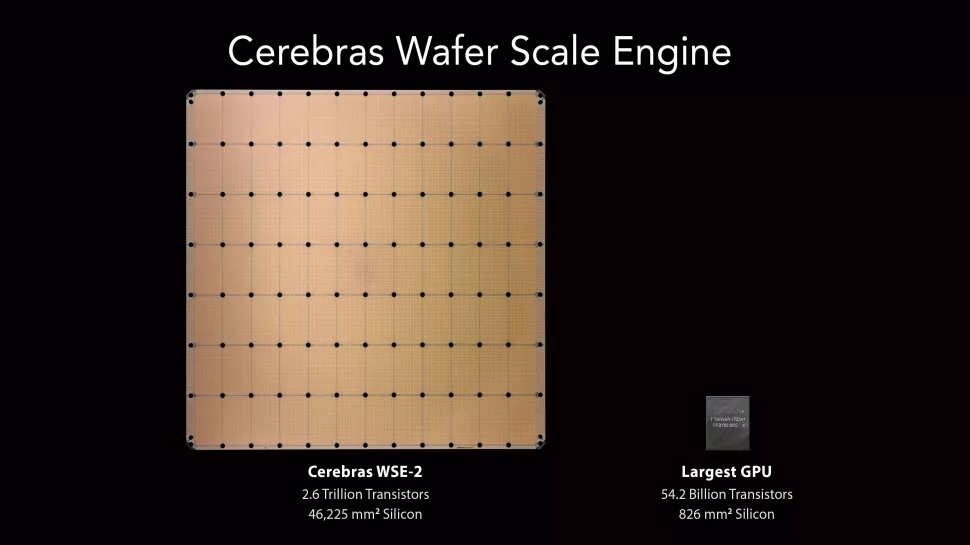

Unveiled by Cerebras in April last year, the WS2-E is designed expressly to accelerate AI training and inference workloads. The chip houses a staggering 2.6 trillion transistors and 850,000 AI cores spread across 46,225 mm(2) of silicon, supposedly delivering the AI performance of hundreds of GPUs.

The wafer-sized chip also boasts 40GB of on-chip memory and 20PB/s of memory bandwidth, which allows for all parameters of large-scale AI models to be held on-chip at the same time, speeding up computation.

The launch of the new system in Germany will mark the first time the WSE-2 chip has been deployed inside a European supercomputer.

"We founded Cerebras to revolutionize compute," explained Andrew Feldman, CEO and Co-Founder of Cerebras Systems. "We’re proud to partner with LRZ and HPE to give Bavaria’s researchers access to blazing fast AI, enabling them to try new hypotheses, train large language models and ultimately advance scientific discovery."

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

The arrival of the new system has also been celebrated by researchers at the LRZ, who say the machine will significantly increase the speed with which they can conduct important AI and general-purpose HPC workloads.

"Currently, we observe that AI compute demand is doubling every three to four months with our users,” said Prof. Dr. Dieter Kranzlmüller, Director of the LRZ.

“With the high integration of processors, memory and on-board networks on a single chip, Cerebras enables high performance and speed. This promises significantly more efficiency in data processing and thus faster breakthrough of scientific findings.”

Joel Khalili is the News and Features Editor at TechRadar Pro, covering cybersecurity, data privacy, cloud, AI, blockchain, internet infrastructure, 5G, data storage and computing. He's responsible for curating our news content, as well as commissioning and producing features on the technologies that are transforming the way the world does business.