Sandy Bridge and AMD Fusion: hybrid chips explained

The CPU/GPU hybrid chip has come of age

There are three big advantages to hybrids. The first is that they're self-evidently very cost effective to manufacture compared to separate chips for the CPU and graphics and all the circuitry between.

CORE I3: The first Sandy Bridge CPUs have an Intel HD 2000 performing the graphical grunt - or lack thereof

For similar reasons, they also require less power - there are fewer components and interconnects that need a steady flow of electrons, and even on the chip die itself Sandy Bridge and Fusion allow more sharing of resources than last year's Pinetrail and Arrandale forebears, which put graphics and CPU on two separate cores in the same package.

Finally, slapping everything on one piece of silicon improves the performance of the whole by an extra order of magnitude. Latency between the GPU and CPU is reduced - a factor very important for video editing - and both AMD and Intel are extending their CPU-based abilities for automatic overclocking to the GPU part of the wafer too.

It goes without saying that these advantages will only be apparent if you actually use the GPU part of the chip. But you can have the best of both worlds: switchable graphics such as Nvidia's Optimus chipsets are well established in notebooks already, including the MacBook Pro. These flick between integrated and discrete chips depending on whether or not you're gaming, in order to save battery life or increase framerates.

That's not really a technology that will find much footing on the desktop, where the power saving would be rather minimal for the effort involved, but that's not to say there are no opportunities there. A 'silent running' mode using the on-die GPU would be invaluable for media centres and all-in-one PCs, for example.

More importantly for us, once every PC has integrated graphics there's every likelihood that some GPU-friendly routines that are common in games - such as physics - could be moved onto there by default. It'd be better than the currently messy approach towards implementation of hardware acceleration for non-graphics gaming threads.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Can't do it all

That scenario, however, depends on an element of standardisation that may yet be lacking. Sandy Bridge contains an HD 3000 graphics core which, by all accounts, is Intel's best video processor to date.

HD 3000 is very good at encoding and decoding HD video, plus it supports DirectX 10.1 and OpenGL 2.0. That means it can use the GPU part of the core to accelerate programs such as Photoshop, Firefox 4 and some video transcoders too.

The problem is that, as has become almost traditional, Intel is a full generation behind everyone else when it comes to graphics. It's still living in a Vista world, while we're all on Windows 7. Don't even ask about Linux, by the way, as there's no support for Sandy Bridge graphics at all there yet (although both Intel and Linus Torvalds have promised it's coming).

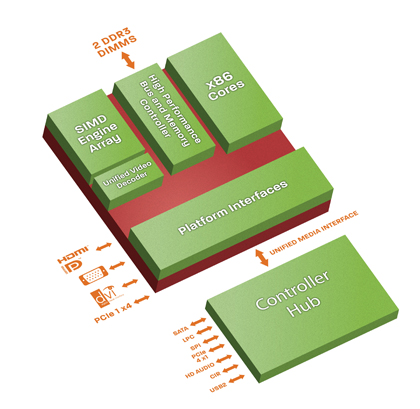

Fusion, by comparison, has a well supported DirectX 11-class graphics chip based on its current Radeon HD6000 architecture. That means it supports the general purpose APIs OpenCL and DirectX Compute. Sandy Bridge doesn't - which may delay take up of these technologies for the time being.

THE TIMES ARE A CHANGING: This is arguably the biggest change to PC architecture in two decades

Conversely, Sandy Bridge does feature the new Advanced Vector Extensions (AVX) to the SSE part of the x86 instruction set. These are designed to speed up applications such as video processing by batching together groups of data, and won't be in AMD chips until Bulldozer arrives later this year.

You'll need Windows 7 SP1 to actually make use of AVX, mind you. But by the time applications that use them appear, most of us will be running that OS revision or later.

Gaming performance

All this is academic if you're just interested in playing games. A quick look at the benchmarks will show you that while the HD 3000 is better than its predecessors for gaming, it's still not much fun unless you're prepared to sacrifice a lot of image quality.

By comparison, the first desktop Fusion chips - codenamed Llano and based on the current K10 architecture - will have more in the way of graphics firepower. But an 80 pipeline Radeon core is still only half the power of AMD's most basic discrete card, the HD6450. We wouldn't recommend one of those, either.

Feedback from games developers we've spoken to has been mixed so far. On the whole, they have broadly welcomed hybrids, and some have gone as far as saying that Sandy Bridge will meet minimum graphics specifications for a number of quite demanding games. Shogun 2 and Operation Flashpoint: Red River are good examples of this.

Ultimately, though, developers are interested in hitting these system requirements because it opens up a large market for their games among laptop owners. As far as innovation goes, the cool kids still code at the cutting edge.

What of the future?

You might be tempted to point out that it's early days yet, and CP-GPU hybrids - for want of a better phrase - are still in their infancy. Give it a couple more years and the discrete graphics business could be dead.

John Carmack himself recently noted that he didn't see any big leaps for the PC's graphical prowess in the near future. That gives combination processors time to catch up, doesn't it?

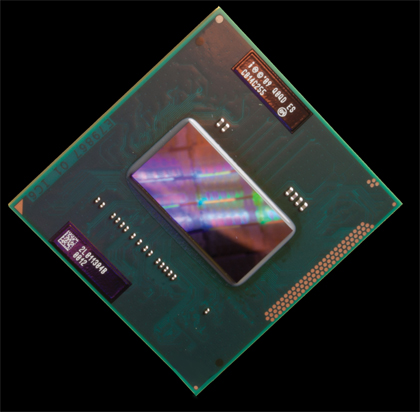

Well, here are some numbers to convince you otherwise. Moore's Law says the number of transistors that can be cost effectively incorporated inside a chip will double every two years. A four-core Sandy Bridge processor with integrated HD 3000 graphics packs around 995 million transistors. According to Intel's oft-quoted founder, that means that we could expect CPUs in 2013 to boast 2,000 million transistors on a single die.

SANDY SILICON: Sandy Bridge is eminently suited to heavy-lifting tasks such as video and photo-editing

Sounds like a lot, until you realise that 2009's Radeon HD5850 already has a transistor count of 2,154 million - which would all have to be squashed onto a CPU die to replicate the performance levels.

By applying Moore's Law, then, it'll be at least 2015 to 2016 before you'll be able to play games at the resolution and quality you were used to 18 months ago. What are the odds of seven-year-old technology being considered more than adequate as an entry level system? Not high, are they?

By that reasoning hybrid processors are going to struggle to ever move up into the 'mainstream' performance class. You see, even though graphics engine development has slowed down considerably in recent years, games are still getting more demanding. Even World of Warcraft needs a fairly hefty system to see it presented at its best these days. Just Cause isn't a spectacular looker, but it can strain a respectable system beyond breaking point if you turn all the graphical settings up and the pretty bits on.

We're also finding new ways to challenge our hardware: multi-monitor gaming is now so common it's included in Valve's Steam Survey. That means higher resolutions and more anti-aliasing, neither of which are cheap in processing terms.

And even if Moore's Law were somehow accelerated so that in five years' time integrated GPUs could be cutting edge, a 4,000 million transistor chip is an enormous undertaking that would be expensive to get wrong. Yields would have to be exceptionally good to make it financially viable - it's a much safer bet to produce separate parts for the top performance class.

What's more, high graphics chips are incredibly different to CPUs in terms of manufacture and operating environment. They're almost mutually exclusive, in fact.

A Core i7, for example, consumes less power and runs much colder than a GeForce GTX570. Could you get the same graphics power without the 100ºC-plus heat? Unlikely.

The tolerance for error is also much lower - if a single pixel is corrupted on screen because the GPU is overheating, no-one notices, but if a CPU repeatedly misses a read/write operation you have a billion dollar recall to take care of.

The GPU is dead

It would be lovely to be proved wrong, though. If a scenario arises where we can get the same sort of performance from a single chip - with all the overclocking features - that we currently enjoy from the traditional mix of discrete GPU and dedicated CPU, then we'd be first in the queue to buy one.

Right now, though, both Nvidia and AMD reported growth in their graphics divisions during 2010, despite the appearance of Intel's Arrandale hybrids early in the year. Perhaps this is the more likely outcome: it's easy to envision a world in, say, a decade or so, when the market for PC 'separates' is similar to the hi-fi one.

Because after a century of miniaturisation and integration there, valve amps are still the apeothesis of style and performance. In other words, a CPU/GPU hybrid chip may be good enough one day, but it won't be the best. And that is what we will always demand.