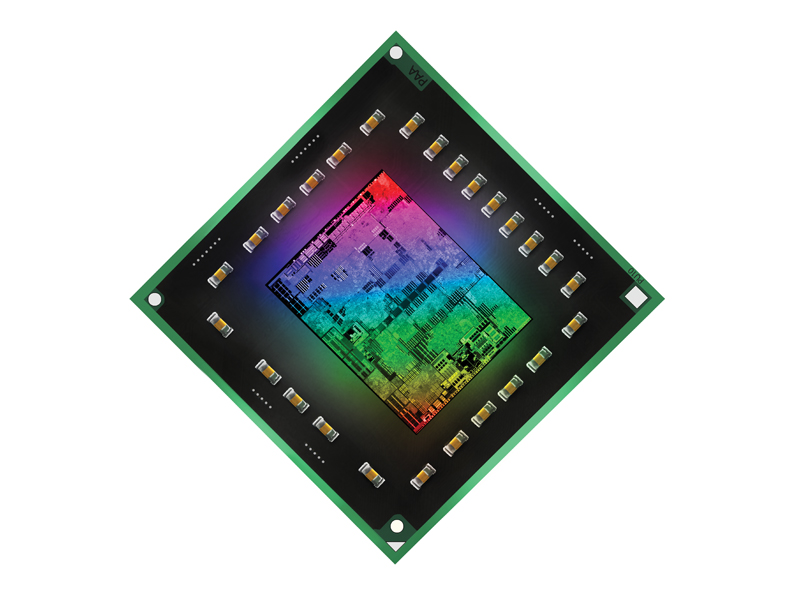

Sandy Bridge and AMD Fusion: hybrid chips explained

The CPU/GPU hybrid chip has come of age

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

We recently reviewed one of AMD's latest Fusion processors as part of the Asus E35M1-M Pro motherboard. Like Intel's recent Sandy Bridge chips, they combine a programmable graphics engine onto the same piece of silicon as the CPU. It's a modern minor miracle in metal.

Like the first wave of Intel's CPU/GPU hybrids, these initial Fusion chips from AMD cleverly and cautiously avoid underwhelming us by targeting netbooks, where performance expectations are pretty low. For Intel, it was bolting a rudimentary graphics core onto an Atom to create Pinetrail.

AMD's new Bobcat CPU architecture is more forward looking, but by going up against Pinetrail and low-end notebooks, it looks perhaps better than it is.

AMD will follow the trail that has been forged here, though. Over the last 12 months, Intel has moved up the value chain with its CPU-die graphics. Arrandale brought the technology to Core i3 and Core i5 chips, and while the launch of Sandy Bridge at CES may have been spoiled by the swift recall of compatible motherboards, it was still briefly triumphant.

Faster and cheaper than the outgoing Nehalem architecture, Sandy Bridge is eminently suited to heavy lifting tasks that can harness the parallel pipelines of GPUs, such as video and photo-editing. The implications for mobile workstations alone are a little mind numbing, and more than one commentator believes it augurs the death of the discrete graphics card.

The single silicon future isn't quite upon us, however. Without wanting to put a timescale on things, the chances are PC Format readers will still be buying add-in GPUs for a while yet, for one reason and one reason only. Integrated graphics of any sort are and always have been rubbish for gaming.

Fusion and Sandy Bridge may still have that new chip smell about them, but they've been in the public eye for a long time. Fusion was formally announced to the world in 2006, around the same time that AMD purchased graphics firm ATI. Sandy Bridge appeared on Intel's roadmaps a year or so later.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Familiarity should not breed contempt, though. It's hard not to be excited by what is arguably the biggest change to PC architecture for 20-plus years. This new approach to chip design is such a radical rethink of how a computer should be built that AMD has even come up with a new name for it: goodbye CPU, hello Accelerated Processing Unit (APU).

While we wish AMD the best of luck trying to get that new moniker to bed in, it is worth digging around on the company's website for the Fusion launch documents. The marketing has been done very well - the white paper PDF does an excellent job of explaining why putting graphics onto the processor die makes sense, and you can find it in a single click from the front page.

By comparison, Intel's site is more enigmatic and downplays the role of on-die graphics. The assumption seems to be that those who care already know, but most consumers are more interested in the number of cores a chip has.

The next big thing?

That's fair enough: computers are commodities now and a simple 'Intel Inside' has always been the company's best way of building its brand. It also works as a neat way of avoiding hubris.

In the world of PC tech, it's hard to work out what the next big thing will be and what is going to blow.

BATTLE LINES ARE DRAWN: Nvidia has some challenging times ahead

The CPU/GPU hybrid seems like a no-brainer, but a lot has changed in graphics since 3dfx first introduced the masses to the 3D video co-processor. It's been 15 years since Voodoo boards began to transform the sprite-based world of PC gaming, and with almost each new generation there's been some new feature that's promised to change the world.

Some of these breakthroughs have been welcome, and rightly gone on to achieve industry standard status. Routines for anti-aliasing, texture filtering and hardware transform and lighting were early candidates for standard adoption, and we now take it for granted that a new GPU will have programmable processors, HD video acceleration and unified shaders.

Other innovations, though, haven't found their way into the canon. At various times we've been promised by graphics vendors that the future lies in technologies that never picked up strong hardware support, such as voxels, tiling, ray tracing and variously outmoded ways of doing shadows.

Then there are those fancy sounding features that seem to have been around forever but still aren't widely used, such as tessellation, ring buses, GPU physics and even multicard graphics.

On a minor - related - note, hands up if you've ever used the digital vibrance slider in your control panel. Thought not.

All of which is not to say that Fusion and Sandy Bridge won't catch on. According to the roadmaps, it's going to be pretty hard to buy a CPU that doesn't have the graphics processor built in by the end of this year.

First-generation Core architecture chips are rapidly vanishing from Intel stockists, while AMD is expected to introduce a mainstream desktop combination chip, codenamed Lano, this summer with a high performance pairing based on the new Bulldozer CPU sometime in 2012.

If you buy a new PC by next spring it'll almost certainly have a hybrid processor at its heart.