Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

All being well, IBM plans to enter its Watson computer into the US gameshow Jeopardy! in 2010. In order to win, the machine will not only have to understand the questions, but dig out the correct answers and speak them intelligibly.

After all the broken promises from the over-optimistic visionaries of the '50s and '60s, are we finally moving towards a real-life HAL? If we are, why has it taken so long?

We caught up with some of the leading minds in artificial intelligence to find out. We've also looked at some of the most amazing milestones in the field, including a robot scientist that can make discoveries on its own.

CLEVER WATSON: IBM is so confident in Watson's abilities that the machine will be entered into the US game show Jeopardy!

The roots of AI

It's been 41 years since Stanley Kubrick directed 2001: A Space Odyssey, but even in 2009 the super-intelligent HAL still looks like the stuff of sci-fi.

Despite masses of research into artificial intelligence, we still haven't developed a computer clever enough for a human to have a conversation with. Where did it all go wrong?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

"I think it's much harder than people originally expected," says Dr David Ferrucci, leader of the IBM Watson project team.

The Watson project isn't a million miles from the fictional HAL project: it can listen to human questions, and even respond with answers. Even so, it's taken us a long time to get here. People have been speculating about 'thinking machines' for millennia.

The Greek god Hephaestus is said to have built two golden robots to help him move because of his paralysis, and the monster in Mary Shelley's Frankenstein popularised the idea of creating a being capable of thought back in the nineteenth century.

Once computers arrived, the idea of artificial intelligence was bolstered by early advances in the field.

The mathematician Alan Turing started writing a computer chess program as far ago as 1948 – even though he didn't have a computer powerful enough to run it. In 1950, Turing wrote 'Computing Machinery and Intelligence' for the journal Mind, in which he outlined the necessary criteria for a machine to be judged as genuinely intelligent.

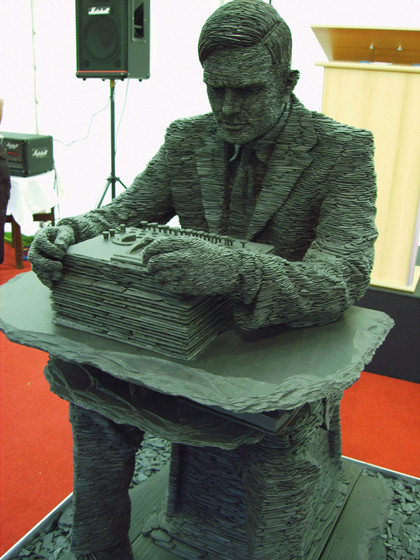

THE TURING TEST: Alan Turing (seen in this statue at Bletchley Park) brought us the Turing Test, still the holy grail of AI researchers

As you may well know, this was called the Turing Test, and it stated that a machine could be judged as intelligent if it could comprehensively fool a human examiner into thinking the machine was human.

The Turing Test has since become the basis for some of the AI community's challenges and prizes, including the annual Loebner Prize, in which the judges quiz a computer and a human being via another computer and work out which is which. The most convincing AI system wins the prize.

Turing also gave his name to the annual Turing Award, which Professor Ross King, who heads the Department of Computer Science at Aberystwyth University, described as the computing equivalent of the Nobel Prize.

Turing aside, there were also plenty of other advances in the 1950s. Professor King cites the Logic Theorist program as one of the earliest milestones. Developed between 1955 and 1956 by JC Shaw, Alan Newell and Herbert Simon, Logic Theorist introduced the idea of solving logic problems with a computer via a virtual reasoning system that used decision trees.

Not only that, but it also brought us a 'heuristics' system to disqualify trees that were unlikely to lead to a satisfactory solution.

Logic Theorist was demonstrated in 1956 at the Dartmouth Summer Research Conference on Artificial Intelligence, organised by computer scientist John McCarthy, which saw the first use of the term 'artificial intelligence'.

The conference bravely stated the working principle that 'every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it'.

The AI revolution had kicked off with a bang, and these impressive early breakthroughs led many to believe that fully fledged thinking machines would arrive by the turn of the millennium. In 1967, Herman Khan and Anthony J Wiener's predictive tome The Year 2000 stated that "by the year 2000, computers are likely to match, simulate or surpass some of man's most 'human-like' intellectual abilities."

Meanwhile, Marvin Minsky, one of the organisers of the Dartmouth AI conference and winner of the Turing Award in 1969, suggested in 1967 that "within a generation ... the problem of creating 'artificial intelligence' will substantially be solved".

You can see why people were so optimistic, considering how much had been achieved already. But why are we still so far from these predictions?