Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

The post PC age. The term may have been coined by Steve Jobs and caused Steve Ballmer some difficult moments in interviews, but we're not worried about corporate point-scoring - we're excited about the possibilities beyond the desktop.

Once, personal computing simply meant having access to a machine you could call your own, and you could do so without needing to spend a fortune. Today, it has become much more personal.

That might sound like boardroom rhetoric, but if you consider how computing has changed since Windows ruled all, it's undeniable. Today, people don't just rely on a PC linked to a socket in the wall for internet access. Instead we depend on gadgets like smartphones, tablets and laptops.

These portable devices allow us to access our data wherever and whenever we want. That's the modern essence of personal computing - your data, your way, anywhere, any time. We're going to explore the technologies that the world's biggest tech firms are cooking up to make personal computing even more immediate and powerful.

We're not interested in the pie-in-the-sky musings of futurologists and professional guessers, though. We've commissioned our team of crack writers to dig around and uncover technologies and products that are nearing completion. We'll look at the next chips Intel hopes will push computing to newer heights while frugally sipping battery power. We'll explore the graphics technologies that Nvidia believes will let your smartphone play games with dazzle and finesse previously reserved for desktop PCs.

Finally, we'll investigate new storage technologies, cutting-edge screens and other innovations in mobile technology that will soon be arriving in your hand.

The internet of things

Website: www.theinternetofthings.eu

Sign up for breaking news, reviews, opinion, top tech deals, and more.

What is it?

The internet used to be just about connecting computers to share information, but it 's grown into something much bigger. In 2008 there were more devices (not just computers and phones) connected to the internet than there were people on Earth. The internet of things is about the staggering potential of putting absolutely everything online.

Why is it important?

Imagine linking everything to the internet, from pacemakers to roads. Now consider what would be possible if those objects could all broadcast data too. Suddenly we would have a much greater understanding of the world around us, and a wealth of new data that could be used to improve our lives. Entire cities would be networked, with older infrastructure being upgraded and incorporated to work alongside the new.

How does it work? Under IPv4, the world was running out of the IP addresses necessary for devices to connect to the internet. Some countries had even exhausted their supply. IPv6 – the next generation internet protocol – will enable 340 trillion, trillion, trillion devices to connect to the internet. That's 100 devices for every atom on Earth.

IPv6 makes the internet of things possible, and was a result of technologists striving towards that goal. Cisco estimates that by 2020 there will 50 billions devices online, all broadcasting and receiving data.

NTV processors

Website: www.intel.com

What is it?

A serious x86 processor capable of running at up to 10 times the efficiency of today's CPUs, with power consumption as low as 2mW and the ability to scale from 280mV when running at 3MHz, all the way up to 1.2v at 1GHz.

Why is it important?

Improved energy efficiency is vital for the growth of the computing continuum, not just in terms of HPC servers, but also in terms the devices in your pocket.

How does it work?

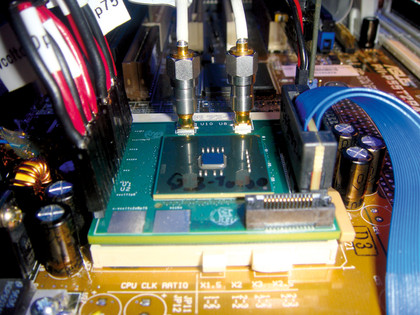

Back in September 2011, Intel showed off a prototype x86 CPU so low-powered that it could be powered by a solar cell the size of a postage stamp. This was its first introduction of the Claremont platform and its vision for near-threshold voltage (NTV) processors.

The threshold voltage is the voltage required for a given transistor to switch into an on state. Current CPU designs operate at several times the threshold voltage in order to make gains in performance. If you run a processor at close to the threshold voltage, you make significant gains in efficiency - Intel is claiming 5-10 times.

Another by-product of operating this close to the threshold voltage is that the processor can operate at a wide dynamic range of operating frequencies.

Nvidia GeForce GRID

Website: www.nvidia.com

What is it?

GeForce GRID is the name given to a new GPU capable of powering game streaming servers with twice the power efficiency of the current crop. It allows the servers to move from one game each to around eight streams per server.

Why is it important?

Again, such energy efficiency is going to be vital for a growing market like game streaming, which relies on such high performance and power-hungry servers. It will also mean the user needs only a H. 264 decoder (like a modern tablet, for example) in order to play full PC games.

How does it work?

Cloud gaming services have been around for a while, but due to weak network structure, and twice the latency and lag of a home console experience, it has yet to take off.

One of the main improvements that has come from the Nvidia GeForce GRID has been the slashing of latency between the user and the back end. One of the ways it has done this is by shifting the capture and encoding part of the stream onto the GPU itself. That means that once the card has finished displaying the frame, it's ready to be fired across the network without having to go anywhere near the CPU.

The latency you experience with a home console is around 160ms, with the first generation of cloud gaming services being almost double that. Nvidia says that with Gaikai and GeForce GRID, latency will come down to almost 150ms. The home console is in trouble.

Conflict-free microprocessor

Website: http://supplier.intel.com

What is it?

There are four materials classed as 'conflict minerals' in today's microprocessors. One of these, tantalum, is processed from coltan. This is mainly found in the Democratic Republic of Congo, where profits from smuggling finance the military regime.

Why is it important?

Profits from the sale of these materials have been linked to human rights atrocities in the eastern region of the DRC. Intel hopes that removing this money will help stop the violence.

How does it work?

When the issue first arose, Intel released a statement that it was "unable to verify the origin of the minerals which are used in our products." It then started mapping and tracing the supply chain for all the four main conflict minerals: tantalum, tungsten, gold and tin.

Flash forward to 2012 and Intel claims to have the supply line mapped to 90 per cent, and is working to ensure that in 2012 it will be able to demonstrate that its microprocessors are verified as conflict-free for tantalum. The goal is that, by the end of 2013, it will be able to say for sure that it is manufacturing the world's first microprocessor validated as entirely conflict-free for all four metals.

System on a chip

Web: www.amd.com www.intel.com www.nvidia.com

What is it?

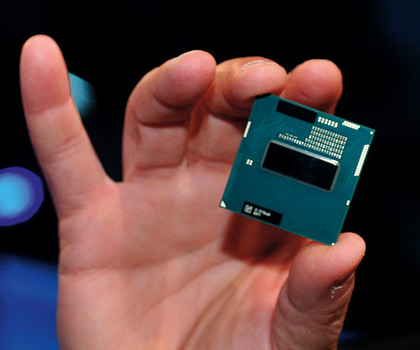

The holy grail for chip manufacturers and designers has long been a full computer system shrunk down to fit on a single chip. We have been getting closer and closer, and will soon be closer still.

Why is it important?

It's all about energy consumption and efficiency. Instead of having myriad chunks of fixed-function silicon doing different things and only then talking to each other, having a single chip capable of doing everything is far more efficient.

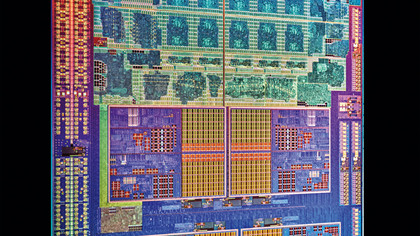

How does it work?

These chips are a fusion of a traditional CPU and GPU, putting both bits of silicon in the same processor die. This is far more efficient than using discrete GPU and CPU silicon, though as yet the performance isn't up there with its separated brethren.

AMD will be the first to be using its latest GPU and CPU technology in its upcoming Kaveri platform in 2013 for a real performance APU.

Intel has taken a slightly different approach, essentially making its top processors close to system-on-a-chip parts with the Ivy Bridge CPUs. These have an improved DX11 GPU on-die along with the fastest consumer CPU parts around. The upcoming Haswell parts will include an even greater focus on graphics performance.

Nvidia isn't to be left out either, with its forthcoming Project Denver. Nvidia CEO Jen-Hsun Huang promised that it will be a serious gamer's chip with a full Nvidia GPU as part of the package. It's likely to be ARM-based, not x86, but could be another compelling SoC option going forward.

Memristor

Website: www.memristor.org

What is it?

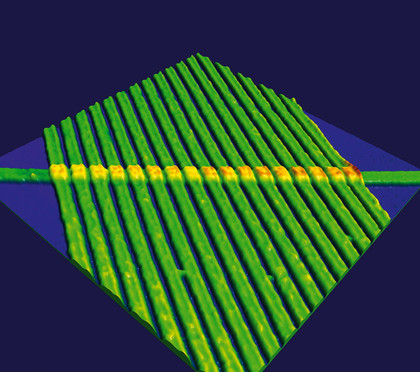

A memristor is a 'memory resistor' - a resistor that 'remembers' the previously held resistance.

Why is it important?

The memristor was theorised in 1971 by Leon Chua, but it wasn't until 2008 that it was actually produced by HP Labs. The race is now on to make memristors in an affordable way, as they could potentially replace NAND flash memory.

How does it work?

The theory behind the memristor is straightforward enough - when current flows through it one way, the resistance increases. Flow current through it the other way and it decreases. When no current is flowing, it retains the last resistance that it had.

As memristors don't need a charge to hold their state, they are ideal for non-volatile operations and are a potential replacement for existing NAND flash. This is made more tempting by the fact that memristors don't need much current to operate, and can be run at far faster speeds than existing NAND flash.

There is a problem though - they're expensive to manufacture. Recent research by UCL happened upon a way of making silicon-oxide memristors using existing manufacturer technologies, which should be significantly cheaper than the big labs.

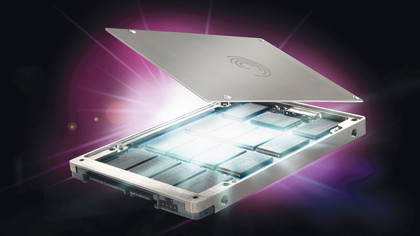

Memory Modem

What is it?

Memory Modem technology takes many of the ideas used in the world of communications and uses them to improve the reliability and throughput of NAND flash memory, as used in SSDs.

Why is it important?

SSD prices have fallen significantly in recent months. This has been driven by an abundance of manufacturers and improved manufacturing for the NAND flash used in SSDs, but without new technologies, these price reductions will plateau.

How does it work?

The announcement that Seagate is collaborating with DensBits to produce cheaper solid state drives for the desktop and enterprise market will hopefully shift the industry as a whole to produce even more affordable SSDs, which should mean they become truly ubiquitous. While we've seen pricing of SSDs tumble this year, this freefall isn't expected to continue due to the inherent costs of manufacturing NAND chips.

Technologies are needed that maximise existing NAND chips, and this is exactly what the agreement between DensBits and Seagate should enable. DensBits Memory Modem tech will find its way into several of Seagate's storage technologies, including three bits/cell (TLC) consumer devices and two bits/cell (MLC) enterprise offerings.

Memory Modem technology enables cells to contain more bits, and lets drives use smaller process nodes. In other words, the SSDs can use cheaper NAND flash, but operate at competitive speeds.

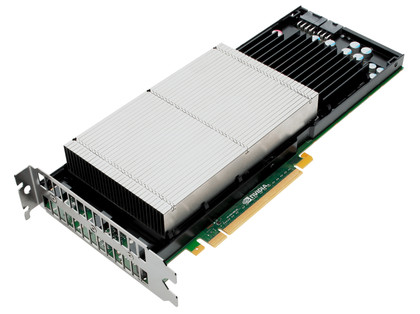

GPU supercomputer

Website: www.nvidia.com

What is it?

Nvidia's latest release might look like just another graphics card, but the intended application of Tesla K20 is far removed from powering the latest games. This is a graphics processor unit designed to help Nvidia reclaim the mantle of the world's most powerful computer.

Why is it important

Nvidia's GPUs are already used by Oak Ridge National Laboratory in Tennessee, but this upgrade should see the supercomputer leapfrog into first place as the fastest in the world.

How does it work?

In order to claim the number one spot in the supercomputer hall of fame (www.top500.org), Nvidia needed to redesign its GPUs significantly. Not only did it need to produce a more powerful GPU, it needed to make a more efficient one at the same time.

It has done this with its latest GPU, the Tesla K20 (codename KG110) - slowing the operating frequency, but also cramming more CUDA cores into each chip. 19,000 of these new GPUs will be used to upgrade the existing Titan supercomputer, which is housed in the US Department of Energy 's biggest science laboratory.

Nvidia claims that this upgrade will equate to an eightfold improvement in performance, capable of handling 25,000 trillion floating point operations per second. It should also mean that Titan is twice as fast as the world's second fastest supercomputer, Fujistu's K Computer in Japan.

DDR4

Website: www.jedec.org

What is it?

The replacement for the existing DDR3 memory standard found in our machines.

Why is it important?

As our systems get faster, the amount of data we shift around increases, which puts a load on the system buses and memory architecture. We can't simply stick with DDR3 and hope for the best.

How does it work?

The goal for DDR4 isn't a million miles away from the original aim for DDR3 - to improve performance over the previous technology while reducing power requirements. This latter point is achieved by setting the power requirement for DDR4 at 1.2V, as opposed to DDR3's 1.5V.

There are DDR3 memory modules available at less than 1.5V, but DDR4 is expected to follow suit, and low voltage memory running at 1.05V is likely to appear too.

When it comes to increasing the performance of DDR4, the specification doesn't see a tremendous change in the prefetch buffers, instead relying on an increase in the operating frequency to garner speed improvements.

The first DDR4 modules will have an operating frequency of 1,600MHz, though given that DDR3 already lays claims to such speeds, faster modules should be available too. The upper limit of the specification defines a 3,200MHz frequency, but it may be a while before modules running this fast are released.

John Carmack's virtual reality

Website: www.idsoftware.com

What is it?

A virtual reality headset that Doom and Quake creator John Carmack pieced together in his spare time. It uses an 'affordable' optics kit, a 6-inch screen and a software layer to drive the display, giving you the impression of old-school virtual reality.

Why is it important?

Despite a promising stab at the mainstream in the late 80s, virtual reality was limited by the hardware of the time. When the likes of graphics supremo John Carmack start getting excited though, it's worth taking a look to see where it could lead.

How does it work?

Virtual reality, if you don't remember it the first time around, works in a similar way to stereoscopic 3D. Each eye sees a slightly different image, which you then interpret as a 3D space. When it comes to VR, instead of polarising glasses, you use a helmet containing two displays. The movement of your head is recorded as an input, and is transferred to the virtual world.

VR resurfaced when John Carmack demonstrated his pet project on the subject behind closed doors at this year's E3, complete with a working headset and game. The headset comes in kit form, but the optics are good enough to make it a compelling experience.

Carmack's id Software is looking re-release Doom III later this year, and the game will support VR helmets, including the software layer needed to make those optics work when attached to a standard screen.

- 1

- 2

Current page: Next-gen computing: defining the future

Next Page Next-gen computing: defining the future