Samsung has a crazy idea for AI that might just work: add a processor inside RAM

Samsung 512GB CXL-PNM card helps to solve one of the biggest cost and energy sinks in computing

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Samsung is framing its latest foray into the realm of processing-in-memory (PIM) and processing-near-memory (PNM) as a means to boost performance and lower the costs of running AI workloads.

The company has dubbed its latest proof-of-concept technology, which it unveiled at Hot Chips 2023, as CXL-PNM. This is a 512GB card with up to 1.1TB/s of bandwidth, according to Serve the Home.

It would help to solve one of the biggest cost and energy sinks in AI computing, which is the movement of data between storage and memory locations on computing engines.

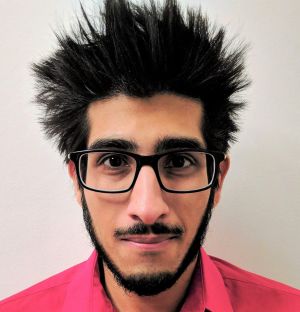

Samsung CXL-PNM

Samsung’s testing shows it’s 2.9 times more energy efficient than a single A-GPU, with a cluster of eight CXL-PNMs 4.4 times more energy efficient than eight A-GPUs. This is in addition to an appliance fitted with the card emitting 2.8 times less CO2, and boasting 4.3 times more operation efficiency and environmental efficiency.

It relies on compute express link (CXL) technology, which is an open standard for a high-speed processor-to-device and processor-to-memory interface that paves the way for more efficient use of memory and accelerators with processors.

The firm believes this card can offload workloads onto PIM or PNM modules, which is something it’s also explored in its LPDDR-PIM. It will save costs and power consumption, Samsung claims, as well as extend battery life in devices by preventing the over-provisioning of memory for bandwidth.

Samsung’s LPDDR-PIM boosts performance by 4.5 times versus in-DRAM processing and reduces energy usage by using the PIM module. Despite achieving an internal bandwidth of just 102.4GB/s, however, it keeps computing on the memory module and there’s no need to transmit data back to the CPU.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Samsung has been exploring technologies like this for some years, although the CXL-PNM is the closest it has come to date to incorporate it into what might soon become a viable product. This also follows its 2022 HBM-PIM prototype.

Made in collaboration with AMD, Samsung applied its HBM-PIM card to large-scale AI applications. The addition of HBM-PIM boosted performance by 2.6%, while increasing energy efficiency by 2.7%, against existing GPU accelerators.

The race to build the next generation of components fit to handle the most demanding AI workloads is well and truly underway. Companies from IBM to d-Matrix are drawing up technologies that aim to oust the best GPUs.

More from TechRadar Pro

- The best DDR5 RAM: our top high-performance memory picks

- The best graphics cards: top GPUs for all budgets

- Samsung is about to boost your CPU memory in a big way

Keumars Afifi-Sabet is the Technology Editor for Live Science. He has written for a variety of publications including ITPro, The Week Digital and ComputerActive. He has worked as a technology journalist for more than five years, having previously held the role of features editor with ITPro. In his previous role, he oversaw the commissioning and publishing of long form in areas including AI, cyber security, cloud computing and digital transformation.