AMD unveils puzzling new MI355X AI GPU as it acknowledges there won't be any AI APU for now

AMD claims 1.2X-1.3X inference lead over Nvidia's B200 and GB200 offerings

- MI355X leads AMD's new MI350 Series with 288GB memory and full liquid-cooled performance

- AMD drops APU integration, focusing on rack-scale GPU flexibility

- FP6 and FP4 data types highlight MI355X’s inference-optimized design choices

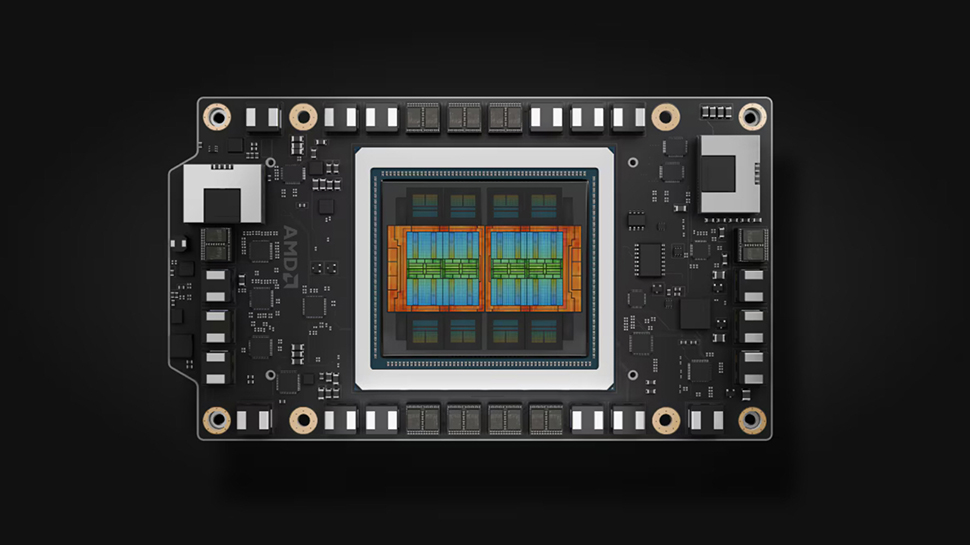

AMD has unveiled its new MI350X and MI355X GPUs for AI workloads at its 2025 Advancing AI event, offering two options built on its latest CDNA 4 architecture.

While both share a common platform, the MI355X stands apart as the higher-performance, liquid-cooled variant designed for demanding, large-scale deployments.

The MI355X supports up to 128 GPUs per rack and delivers high throughput for both training and inference workloads. It features 288GB of HBM3E memory and 8TB/s memory bandwidth.

GPU-only design

AMD claims the MI355X delivers up to 4 times the AI compute and 35 times the inference performance of its previous generation, thanks to architectural improvements and a move to TSMC’s N3P process.

Inside, the chip includes eight compute dies with 256 active compute units and a total of 185 billion transistors, marking a 21% increase over the prior model. Each die connects through redesigned I/O tiles, reduced from four to two, to double internal bandwidth while lowering power consumption.

The MI355X is a GPU-only design, dropping the CPU-GPU APU approach used in the MI300A. AMD says this decision better supports modular deployment and rack-scale flexibility.

It connects to the host via a PCIe 5.0 x16 interface and communicates with peer GPUs using seven Infinity Fabric links, reaching over 1TB/s in GPU-to-GPU bandwidth.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Each HBM stack pairs with 32MB of Infinity Cache, and the architecture supports newer, lower-precision formats like FP4 and FP6.

The MI355X runs FP6 operations at FP4 rates, a feature AMD highlights as beneficial for inference-heavy workloads. It also offers 1.6 times the HBM3E memory capacity of Nvidia’s GB200 and B200, although memory bandwidth remains similar. AMD claims a 1.2x to 1.3x inference performance lead over Nvidia’s top products.

The GPU draws up to 1,400W in its liquid-cooled form, delivering higher performance density per rack. AMD says this improves TCO by allowing users to scale compute without expanding physical footprint.

The chip fits into standard OAM modules and is compatible with UBB platform servers, speeding up deployment.

“The world of AI isn’t slowing down - and neither are we, " said Vamsi Boppana, SVP, AI Group. "At AMD, we’re not just keeping pace, we’re setting the bar. Our customers are demanding real, deployable solutions that scale, and that’s exactly what we’re delivering with the AMD Instinct MI350 Series. With cutting-edge performance, massive memory bandwidth, and flexible, open infrastructure, we’re empowering innovators across industries to go faster, scale smarter, and build what’s next.”

AMD plans to launch its Instinct MI400 series in 2026.

You may also like

Wayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.