How data mining works

Learn about the growing practice of data mining

How data mining works

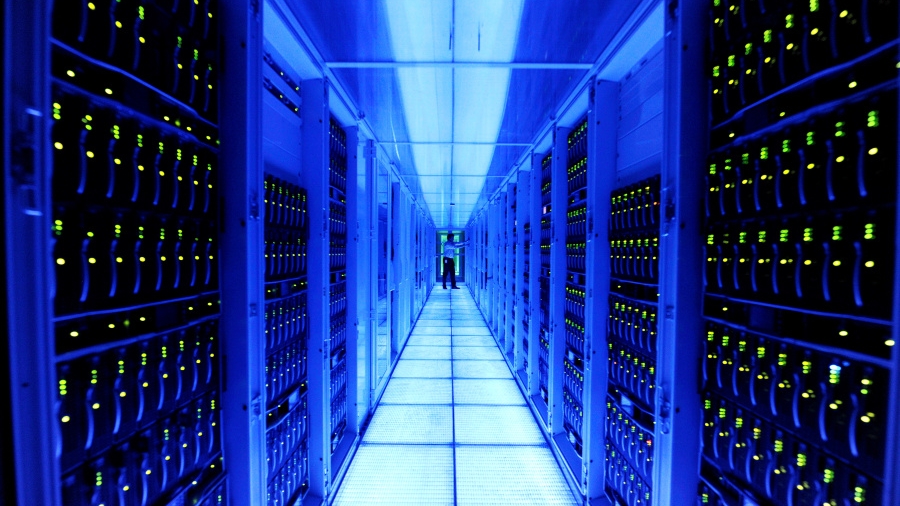

Every time you shop, you leave a trail of it behind. Same, when you surf the web, put on your fitness tracker or apply for credit at your bank. In fact, if we could touch it, we'd be drowning it. The data we produce every single day, according to IBM, totals an unfathomable 2.5 quintillion bytes (that's '25' followed by 17 zeros). We're producing it so fast that its estimated 90% of data in the world right now was created in just the last two years. This 'Big Data' is a global resource worth billions of dollars and every business and government wants their hands on it – and for good reason.

Data is the digital history of our everyday lives – our choices, our purchases, who we talk to, where we go and what we do. We've previously looked at the Internet of Things (IoT) and how 'pervasive computing' will radically alter the way we live. You can guarantee IoT will lead to an even greater explosion of data generation and capture – thanks to the boom in cloud storage, we're already putting away this stuff as fast as we can go.

But data on its own is pretty useless – it's the information we extract from the data that can do everything from forewarn governments of possible terror threats, to predict what you'll likely buy next time at your local fruit-and-veg. The sheer volume of data available is well beyond human ability alone to decipher and needs computer processing to handle it – that's where the concept of 'data mining' comes in.

Machine learning

Actually, 'data mining' is really the buzzword for a fascinating area of computing called 'machine learning', which itself is an offshoot of Artificial Intelligence (AI). Here, computers use special code functions or 'algorithms' to process the mountains of data and generate or 'learn' information from it.

It's a booming area of pioneering research at the moment, which also incorporates mathematical techniques first discovered more than 250 years ago.

In one regard, data mining has a bit of a shadow cast over it, with growing ethical concerns about privacy and how information mined from data is used. But it's not all 'terror plots and shopping carts' – data mining is heavily used by the sciences for everything from weather prediction to medical research, where it's been used to predict recurrence of breast cancer and find indicators for the onset of diabetes.

Stanford University's Folding@Home disease research project is data mining on a global scale you can get involved in, searching for cures to cancer, Parkinson's disease and Alzheimer's.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Essentially, machine learning is about finding patterns in data, learning 'rules' that allow us to make decisions and predictions, or finding links or 'associations' between factors in situations or applications.

Get the free software

Now you might think machine learning is done in labs with banks of computers, mountains of cloud storage and expensive purpose-built software. You'd be right, but it's also something you can do at home – what's more, a decent amount of machine learning software is available free. Popular examples like 'Hadoop' or 'R' provide powerful frameworks for processing mountains of data, but they can be a little daunting to use, first-time out. And like Holden versus Ford or Android versus iOS, it's a field with passionate supporters of different software.

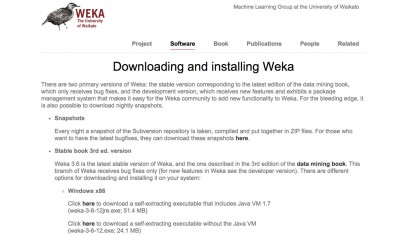

One app commonly used for learning the basics is WEKA, developed by New Zealand's University of Waikato. Like Hadoop, it's built using the Java programming language, so you can run it on any Windows, Linux or Mac OS X computer. It's not perfect, but its graphical user interface (GUI) certainly helps.

How machine learning works

Machine learning starts with what's called a 'dataset' representing a situation you want to learn – think of it as a spreadsheet. You have a series of measures or 'attributes' in columns, while each row represents an example or 'instance' of the thing or 'concept' you want to learn.

For example, if we're looking for indicators of the onset of diabetes, those attributes could include a patient's body mass index (BMI), their blood-glucose levels and other medical factors. Each instance would contain one patient's set of attributes. In this situation, the dataset would also have a result or 'class' attribute, indicating if the patient developed diabetes or not.

If another patient presents for diagnosis and we want to know if they're at risk of diabetes, machine learning can develop the rules to help predict that likelihood, based on dataset learning and that person's measured medical attributes.

What do rules look like?

One of the seriously cool tools we love at TechRadar is IFTTT (If This Then That) - a program that combines social network services to perform linked functions. As the name suggests, it works on the simple 'if-then' programming statement that 'if an event occurs, then go do something'.

Basic rules in machine learning are along the same lines – if an event X occurs, the result is Y. Or it could be a series of events – if X, Y and Z occurs, the result is A, or A, B and C.

These rules tell us something about the concept we want to learn. But just as important as what the rules tell us is how accurate they are. Rule accuracy reveals how much confidence we can have in the rules to give us the right result.

Some rules are excellent – they get the right answer every time, others can be hopeless and some, in-between. There are also added complications – what's called 'overfitting', where a set of rules work perfectly on the dataset they were learned from, but perform poorly on any new examples or instances given to them. These are all things that machine learning – and the data scientists using it – must consider.

Basic algorithms

There are dozens of different machine learning functions or 'algorithms', many of them quite complex. But there are two simple examples you can learn quickly called 'ZeroR' and 'OneR'. We'll use the WEKA app to show them, but also calculate them by-hand to see how they work.

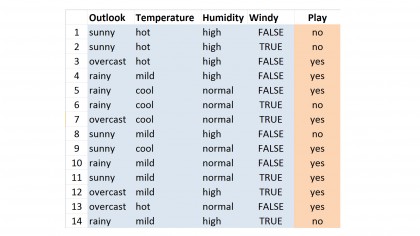

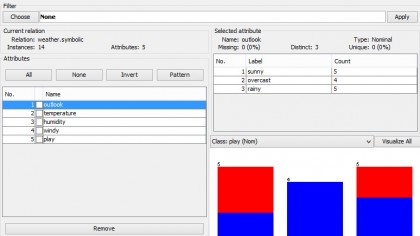

The WEKA package includes a number of example datasets, one being a very small 'weather.nominal' dataset, containing 14 instances of whether golf is played on a particular day, given a series of weather events at the time. There are five measures or 'attributes' – outlook, temperature, humidity, windy and play. This last one is the output or 'class' attribute, which says whether golf was played (yes) on that day or not (no).

Zeroing in

ZeroR is the world's simplest data mining algorithm – well, it's a bit rude to call it an 'algorithm' because it's so simple, but it provides the baseline accuracy level that any proper algorithm will hope to build on.

It works like this: check out the weather data in the image above, look at that 'play' class attribute and count up the number of 'yes' and 'no' values. You should find nine 'yes' values and five 'no'. The proportion of 'yes' values is nine out of 14 instances. That means if we get another instance and we want to predict whether golf will be played or not, we can just say 'yes' and be right nine times out of 14 or 64.2% of the time. In other words, ZeroR simply chooses the most popular class attribute value.

You can test this out in WEKA – make sure you have the Java Run-time Engine (JRE) installed on your PC, then download WEKA, install it and launch the app. Click on the 'Explorer' icon to launch the learning window. WEKA uses a modified CSV (comma-separated variable) format called ARFF and you'll find example datasets in the /program files/weka-3-x/data subfolder. In the Explorer window, click on the Open File button and choose the 'weather.nominal' dataset. Next, click on the Classify tab and 'ZeroR' should be already shown in the Classifier textbox next to the Choose button. Click on the radiobutton next to 'Use training set' under 'Test Options' on that left-side control panel and finally, press the Start button.

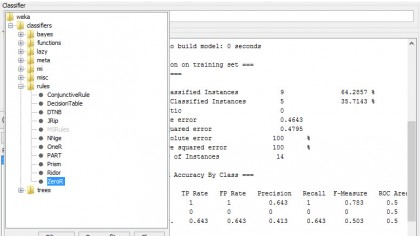

Almost instantly, you'll get the results on the Classifier Output window. Scroll down and you'll see ZeroR defaults to choosing the 'yes' class value and later, 'Correctly Classified Instances' showing '9' and '64.2857%' next to it. Bottom-line, WEKA has just done the same thing we did before – it counted up the 'yes' and 'no' class values and chose the most common.

One rule to rule them all

ZeroR gives us a 64.2% base-level learning accuracy in this example, but it'd be nice to do a bit better than that. That's where the OneR algorithm comes in. It's called a 'classification rule learner', in that, given what it learns from a training dataset, it generates rules that allow us to determine or 'classify' the result of a future instance.

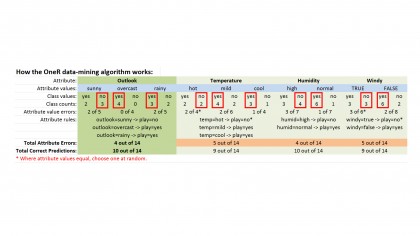

If you look at the OneR table above, you can see how it works – each weather dataset attribute has a small number of possible values. For Outlook, they are 'sunny', 'overcast' and 'rainy'. For temperature, it's 'hot', 'mild' and 'cool' and so on. We create a separate list for each attribute value and then count how many times each value occurs in an instance by noting the number of 'yes' and 'no' results we get.

For example, going through the 14 instances, you can see when five instances where the outlook is sunny, giving us two 'yes' and three 'no' results. Likewise, 'outlook = overcast' gets four 'yes' votes and zero 'no' results. We then do likewise for all of the other attributes.

Next, we count up the errors – these are the smaller counts for each attribute value, so again, for 'outlook = sunny', the 'yes' count is only two; for 'outlook = overcast', the 'no' count is zero, for 'outlook = rainy', it's two and so on. The red boxes on the table show the most popular class values for each attribute value and it's from these that we make our first set of 'Outlook' rules:

Outlook = sunny -> Play = no Outlook = overcast -> Play = yes Outlook = rainy -> Play = no

Again, we do likewise for the other attributes. What we're doing is taking the most popular class value for each attribute value and assigning it to that attribute-value pair to make a rule, so for this example, outlook being 'sunny' leads to play being 'no' and so on. Next, we repeat this for each of the other three attributes. After that, we add up those 'error' counts for each attribute value, so Outlook is 2 + 0 + 2 totaling 4 out of 14 (4/14). For temperature, we get 5/14, 4/14 for Humidity and 5/14 for Windy.

Now, we choose the attribute with the smallest error count. Since in this example we have two attributes with error count of 4 out of 14 (Outlook and Humidity), you can choose either - we've gone with the first one, the 'Outlook' attribute ruleset above.

This now becomes our 'OneR' (one-rule) classification rule set. Using this rule on the training dataset, it correctly predicts 10 out of 14 instances or just under 71.5%. Remember, ZeroR gave us 64.2%, so OneR gains us greater accuracy, which is what we want.

Using the new rule

Let's say we're given a new instance – the outlook is rainy, temperature is mild, humidity is high and windy is false. What is 'play' – will golf be played or not? Our OneR rule says if the outlook is rainy, play is 'no', so that's our answer – for this instance, it's very likely (about 71.5%) there's no golf happening today.

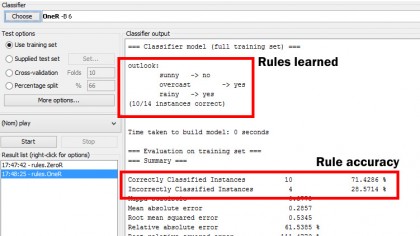

Run the OneR classification test in WEKA by clicking the Choose button and selecting 'OneR' from the 'Rules' list. Press the Start button and you'll see the same list of rules, the number of correctly classified instances at ten and a percentage of 71.4286. That's exactly what we calculated before.

Tip of the iceberg

Sure, we're not going to make millions or save the world by predicting which days golf will be played based on weather events, but if you're a meteorologist determining if current weather conditions could lead to a massive hailstorm, data mining techniques (admittedly more complex than we've seen here) can help with those answers.

Machine learning is a boom area of computer research around the world, aiming to make sense of the 'death by data' overload of information surrounding us. We've barely scratched the surface here, but next time you hit the internet or go shopping, you'll hopefully have a better idea of what happens to the data we generate.