"You really want every person to have their own dedicated GPU": OpenAI becomes Nvidia's biggest cheerleader as its president calls for a 10-billion GPU bonanza - but no mention of the petawatt-scale electricity it would require

Global resource limits cast doubt on visions of universal GPU allocation

- OpenAI envisions personal GPUs, echoing Bill Gates’ computer-on-every-desk dream

- Ten billion GPUs would overwhelm electricity grids already struggling with demand

- Powering billions of GPUs in data centers requires petawatt electricity

OpenAI President Greg Brockman has outlined a future where AI tools run constantly, even when their users are asleep.

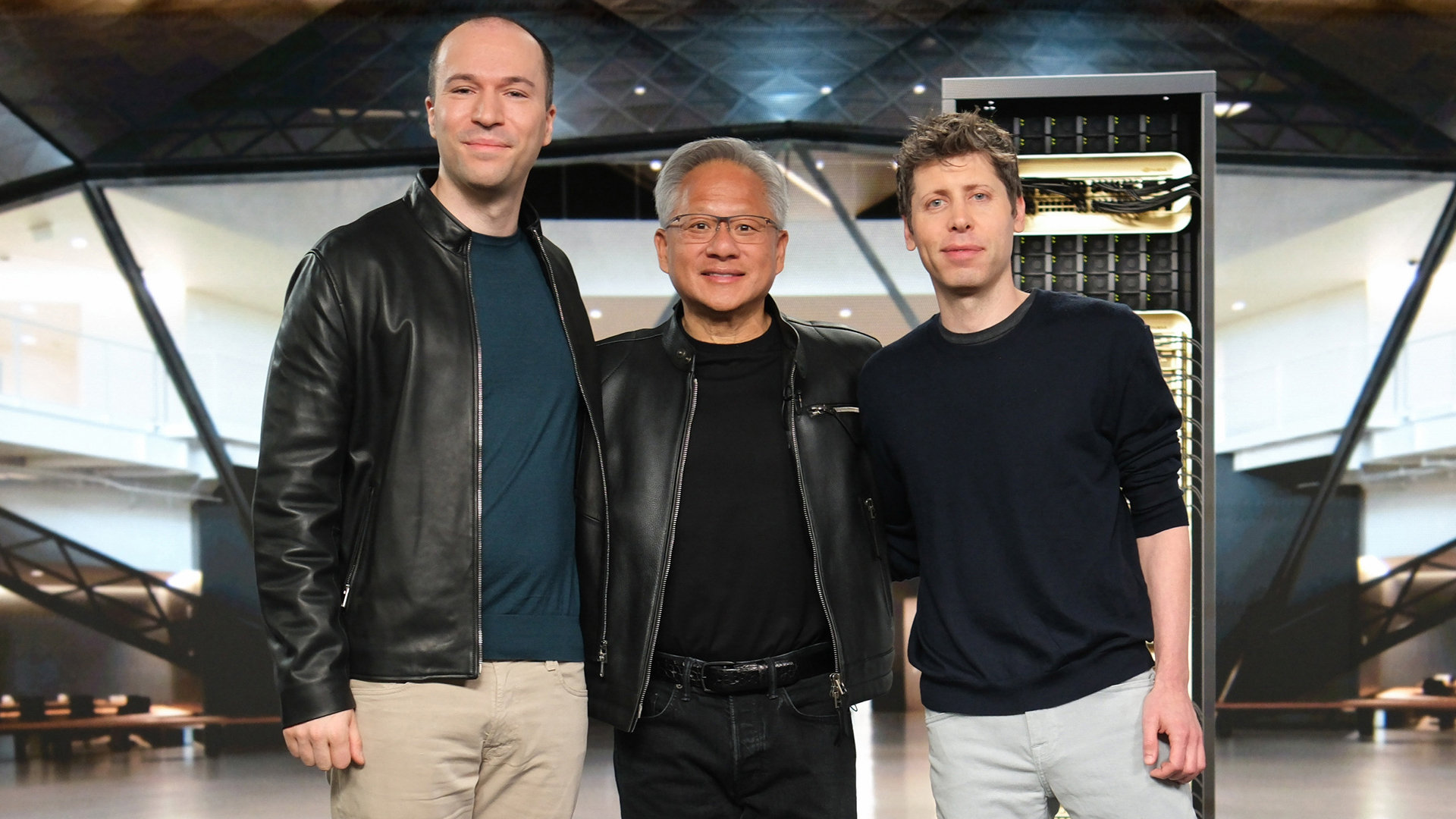

In a CNBC interview alongside Nvidia CEO Jensen Huang and OpenAI CEO Sam Altman, Brockman said the world will eventually require "10 billion GPUs" to sustain this vision.

He framed the demand as part of a broader trajectory where "the economy is powered by compute," suggesting that compute resources could become as central as currency.

Dedicated GPUs for everyone

Brockman went further, arguing, "you really want every person to have their own dedicated GPU."

The idea echoes earlier technological ambitions, such as Bill Gates’ prediction in the 1990s of a computer on every desk.

Back then, the notion was both celebrated and ridiculed, yet today computing devices have become near ubiquitous.

Brockman’s framing of a GPU for every individual fits into that lineage, although critics may wonder whether the comparison is premature given global resource constraints.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

While Nvidia has grown into the undisputed supplier of the GPU hardware for large-scale AI models, the numbers being discussed are staggering.

Altman compared the Nvidia-OpenAI partnership to the Apollo program, citing its unprecedented scale.

Yet missing from these projections is any detailed discussion of the energy footprint such infrastructure would demand.

Brockman also spoke of looming "compute scarcity," implying that the supply of GPUs could become a global choke point.

The role of Nvidia’s products has already entered sensitive geopolitical terrain, especially in trade disputes between the United States and China.

If GPUs become de facto economic units in a compute-driven economy, this scarcity could deepen both market and diplomatic tensions.

Although the rhetoric surrounding always-working AI and agentic systems is grand, the feasibility remains uncertain.

A system that grants every person a dedicated GPU would strain manufacturing capacity, energy production, and distribution channels.

Without clear answers to these challenges, the vision risks sounding less like a roadmap and more like an aspirational pitch.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

You might also like

- These are the best AI website builders around

- Here's our roundup of the best AI phones

- The data crisis: why the future of AI depends on fixing the foundations

Efosa has been writing about technology for over 7 years, initially driven by curiosity but now fueled by a strong passion for the field. He holds both a Master's and a PhD in sciences, which provided him with a solid foundation in analytical thinking. Efosa developed a keen interest in technology policy, specifically exploring the intersection of privacy, security, and politics. His research delves into how technological advancements influence regulatory frameworks and societal norms, particularly concerning data protection and cybersecurity. Upon joining TechRadar Pro, in addition to privacy and technology policy, he is also focused on B2B security products. Efosa can be contacted at this email: udinmwenefosa@gmail.com

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.