Samsung's biggest rival and Sandisk have come together to standardize High Bandwidth Flash memory - which could mean better AI performance for everyone

Sandisk and SK Hynix push for scalable AI performance through hybrid memory stacks

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

- Sandisk and SK Hynix propose flash-powered high-bandwidth memory to handle larger AI models

- High Bandwidth Flash could store far more data than DRAM-based HBM for AI workloads

- Energy savings from NAND’s non-volatility could reshape AI data center cooling strategies

Sandisk and SK Hynix have signed an agreement to develop a memory technology which could change how AI accelerators handle data at scale.

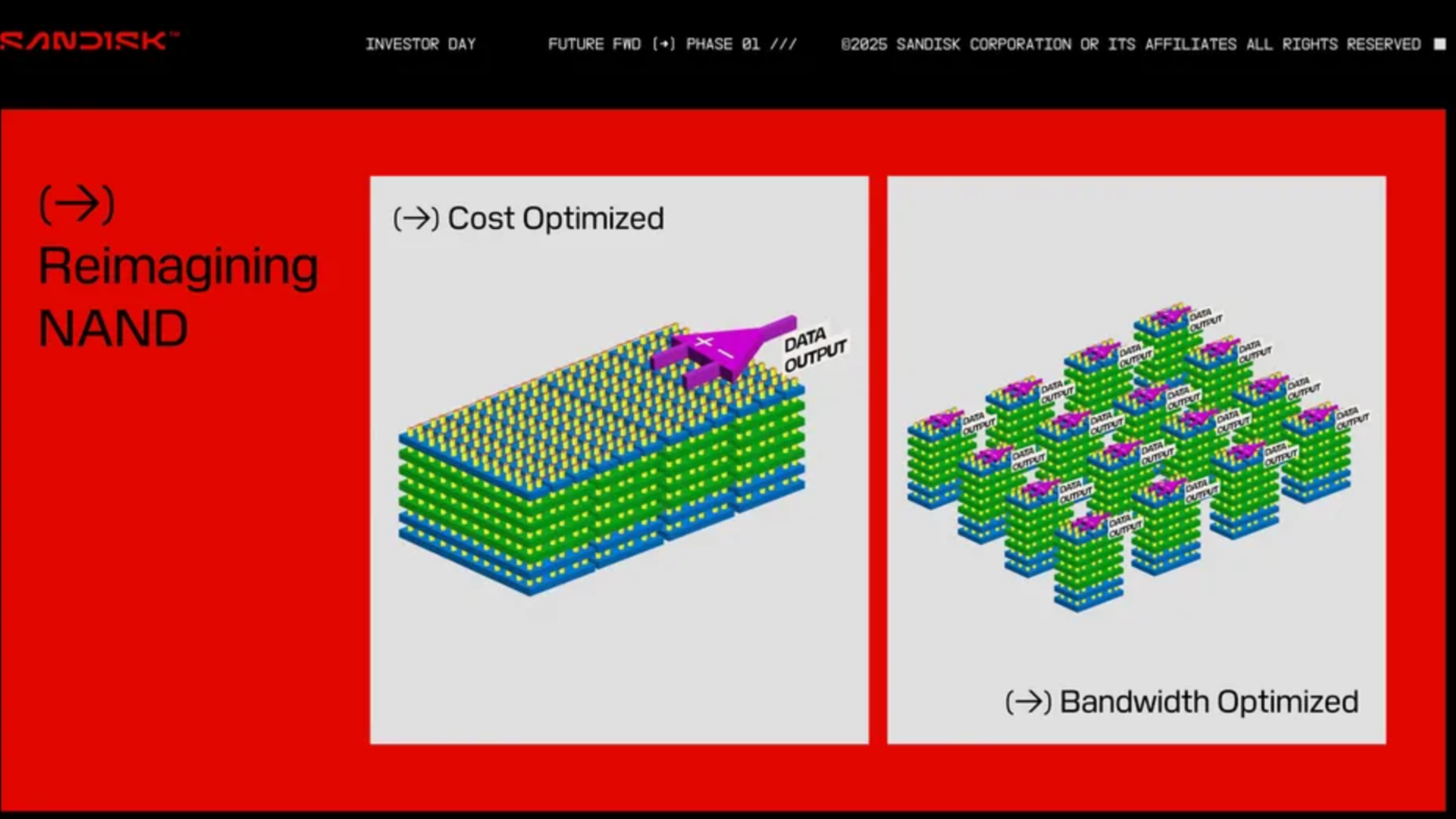

The companies aim to standardize “High Bandwidth Flash” (HBF), a NAND-based alternative to traditional high-bandwidth memory used in AI GPUs.

The concept builds on packaging designs similar to HBM while replacing part of the DRAM stack with flash, trading some latency for vastly increased capacity and non-volatility.

AI memory stacks to handle larger models at lower power demands

This approach allows HBF to provide between eight and sixteen times the storage of DRAM-based HBM at roughly similar costs.

NAND’s ability to retain data without constant power also brings potential energy savings, an increasingly important factor as AI inference expands into environments with strict power and cooling limits.

For hyperscale operators running large models, the change could help address both thermal and budget constraints that are already straining data center operations.

This plan aligns with a research concept titled “LLM in a Flash,” which outlined how large language models could run more efficiently by incorporating SSDs as an additional tier, alleviating pressure on DRAM.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

HBF essentially integrates that logic into a single high-bandwidth package, potentially combining the storage scale of the largest SSD with the speed profile needed for AI workloads.

Sandisk presented its HBF prototype at the Flash Memory Summit 2025, using proprietary BiCS NAND and wafer bonding techniques.

Sample modules are expected in the second half of 2026, with the first AI hardware using HBF projected for early 2027.

No specific product partnerships have been disclosed, but SK Hynix’s position as a major memory supplier to leading AI chipmakers, including Nvidia, could accelerate adoption once standards are finalized.

This move also comes as other manufacturers explore similar ideas.

Samsung has announced flash-backed AI storage tiers and continues to develop HBM4 DRAM, while companies like Nvidia remain committed to DRAM-heavy designs.

If successful, the Sandisk and SK Hynix collaboration could create heterogeneous memory stacks where DRAM, flash, and other persistent storage types coexist.

Via Toms Hardware

You might also like

- Forget talking to a human ever again - Apple is rolling out AI chatbots in its customer service app

- These are the best business laptops available to buy right now

- And you should take a look at the best office chairs we've tried

Efosa has been writing about technology for over 7 years, initially driven by curiosity but now fueled by a strong passion for the field. He holds both a Master's and a PhD in sciences, which provided him with a solid foundation in analytical thinking.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.