Samsung and AMD made a revolutionary SSD together - then it was left to wither in the shadows and nobody knows exactly why

Computational storage drives looked attractive on paper so what happened next?

- Samsung used AMD's Xilinx FPGA to power its SmartSSD storage device

- It promised to reduce enterprises' reliance on servers

- Computational storage devices, however, have faded just as Generative AI surged

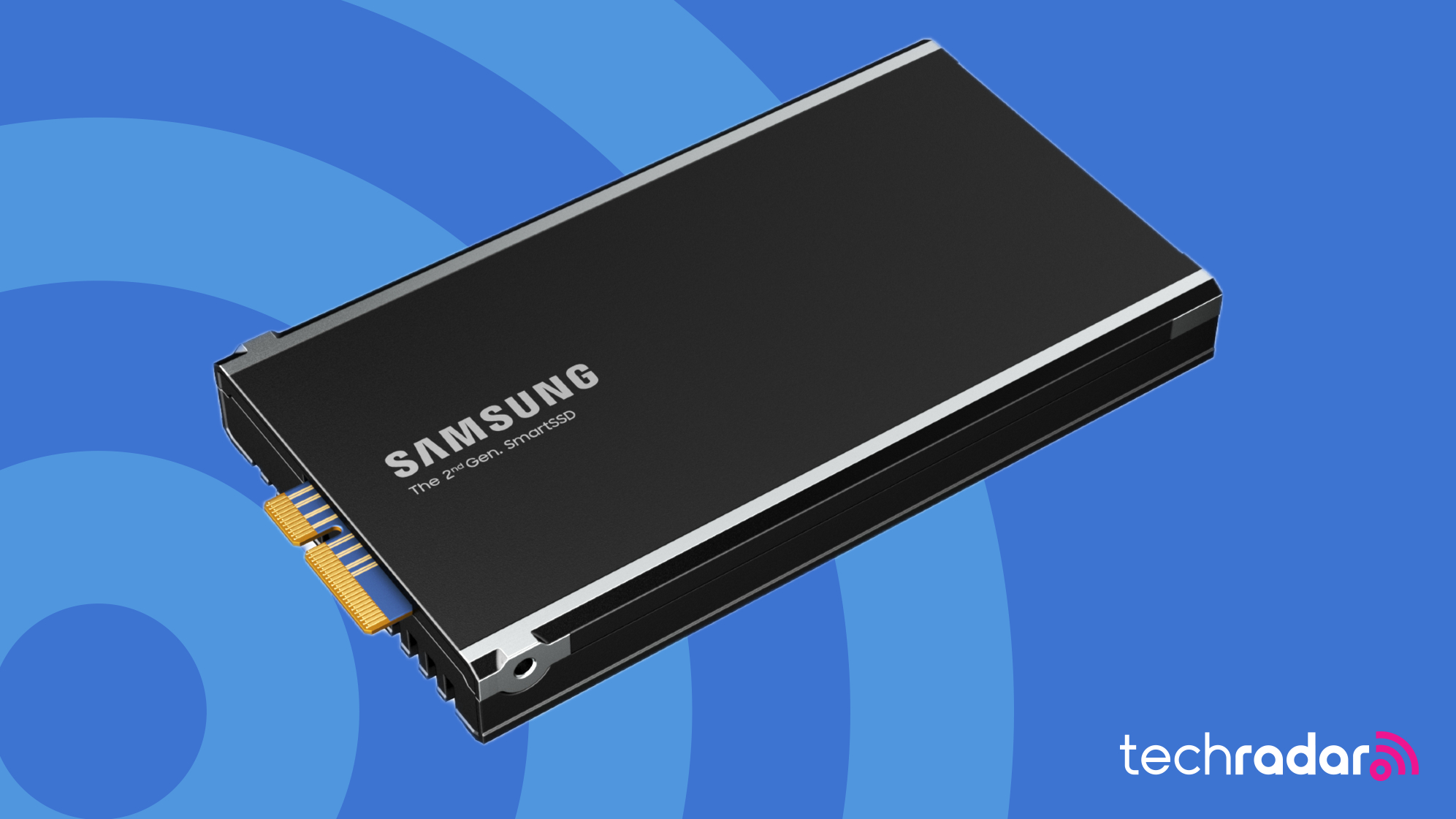

Samsung came up with the concept of a SmartSSD back in 2018, before generative AI kicked off. This computational storage drive would power server-less computing, bringing compute closer to where data is stored. SmartSSD had NAND, HBM and RDIMM memory sitting next to a FPGA accelerator in the SSD itself. That FPGA was built by Xilinx, which AMD purchased in October 2020.

Fast forward to 2025 and the SmartSSD has all but disappeared from Samsung’s portfolio. You can still buy them from Amazon (and others) under the AMD Xilinx brand (rather than Samsung’s) for $517.70 with a 3.84TB capacity.

The fact that it is a Gen3 SSD and the novel but complicated nature of the hardware made it a difficult sell. Then came the double whammy of COVID-19 and AI; the latter, more than anything else, is probably why Samsung gave up on CSDs.

Generative AI demanded another kind of computational resource that CSDs simply couldn’t deliver then and while LLMs need SSDs, storage capacity, rather than compute features, was what it was all about.

Put it simply, CSD represented an interesting but niche market, one that’s closer to traditional servers. It was nice but didn’t have the explosive growth potential of AI-related hardware. That’s why I think Samsung mothballed it after its second generation, despite the company positing that “the computational storage market has great potential” in 2022.

What’s next for CSD?

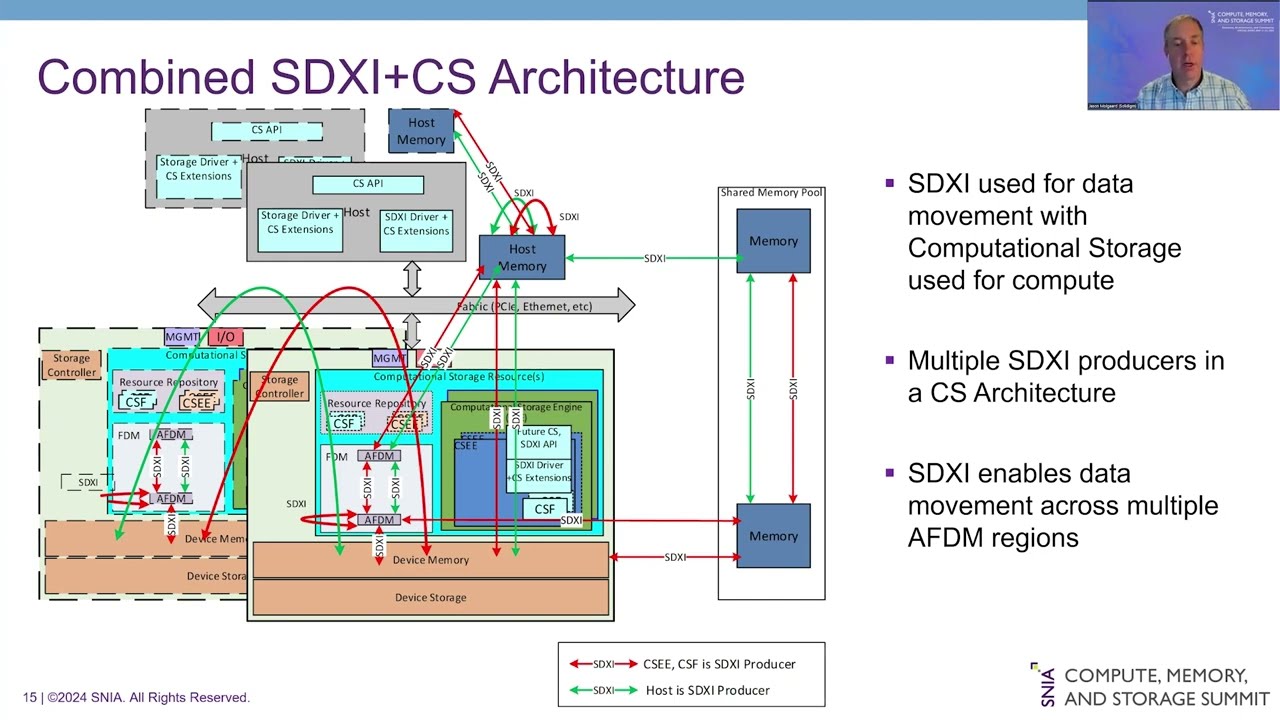

The dedicated page on SNIA’s website, the group that oversees the standardization of computational storage, shows little progress since the launch, in October 2023, of a CS API. A video released in 2024 by the co-chairs of SNIA’s CS technical working group mentions a version 1.1 that is under development.

One of its staunchest proponents, Scaleflux, changed its “about us” page to omit computational storage in its entirety. Instead, it focusses on delivering products that use CS under the hood. Its CSD5000 enterprise SSD, for example, has a physical capacity of 122.88TB but a logical capacity of 256TB (with a compression ratio of approximately 2:1) mentioned in the small print. That is achieved using onboard compute.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Given the growing importance of AI inference, it would make sense to have some of it done as close as possible to where the data lives, that is on the SSD. With ASICs (application-specific integrated circuits) getting more popular thanks to hyperscalers (Google, Microsoft) and AI companies (OpenAI), the market for enterprise inference-friendly AI SSDs - especially at the edge - could open up sooner rather than later.

You might also like

- These are the best NAS devices around

- Largest SSDs and hard drives of 2025

- We've rounded up the best cloud storage services on offer

Désiré has been musing and writing about technology during a career spanning four decades. He dabbled in website builders and web hosting when DHTML and frames were in vogue and started narrating about the impact of technology on society just before the start of the Y2K hysteria at the turn of the last millennium.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.