After Sandisk, D-Matrix is proposing an intriguing alternative to the big HBM AI puzzle with 10x better performance with 10x better energy efficiency

Memory wall challenges drive new approaches in AI acceleration design

- D-Matrix shifts focus from AI training to inference hardware innovation

- The Corsair uses LPDDR5 and SRAM to cut HBM reliance

- Pavehawk combines stacked DRAM and logic for lower latency

Sandisk and SK Hynix recently signed an agreement to develop “High Bandwidth Flash,” a NAND-based alternative to HBM designed to bring larger, non-volatile capacity into AI accelerators.

D-Matrix is now positioning itself as a challenger to high-bandwidth memory in the race to accelerate artificial intelligence workloads.

While much of the industry has concentrated on training models using HBM, this company has chosen to focus on AI inference.

A different approach to the memory wall

Its current design, the D-Matrix Corsair, uses a chiplet-based architecture with 256GB of LPDDR5 and 2GB of SRAM.

Rather than chasing more expensive memory technologies, the idea is to co-package acceleration engines and DRAM, creating a tighter link between compute and memory.

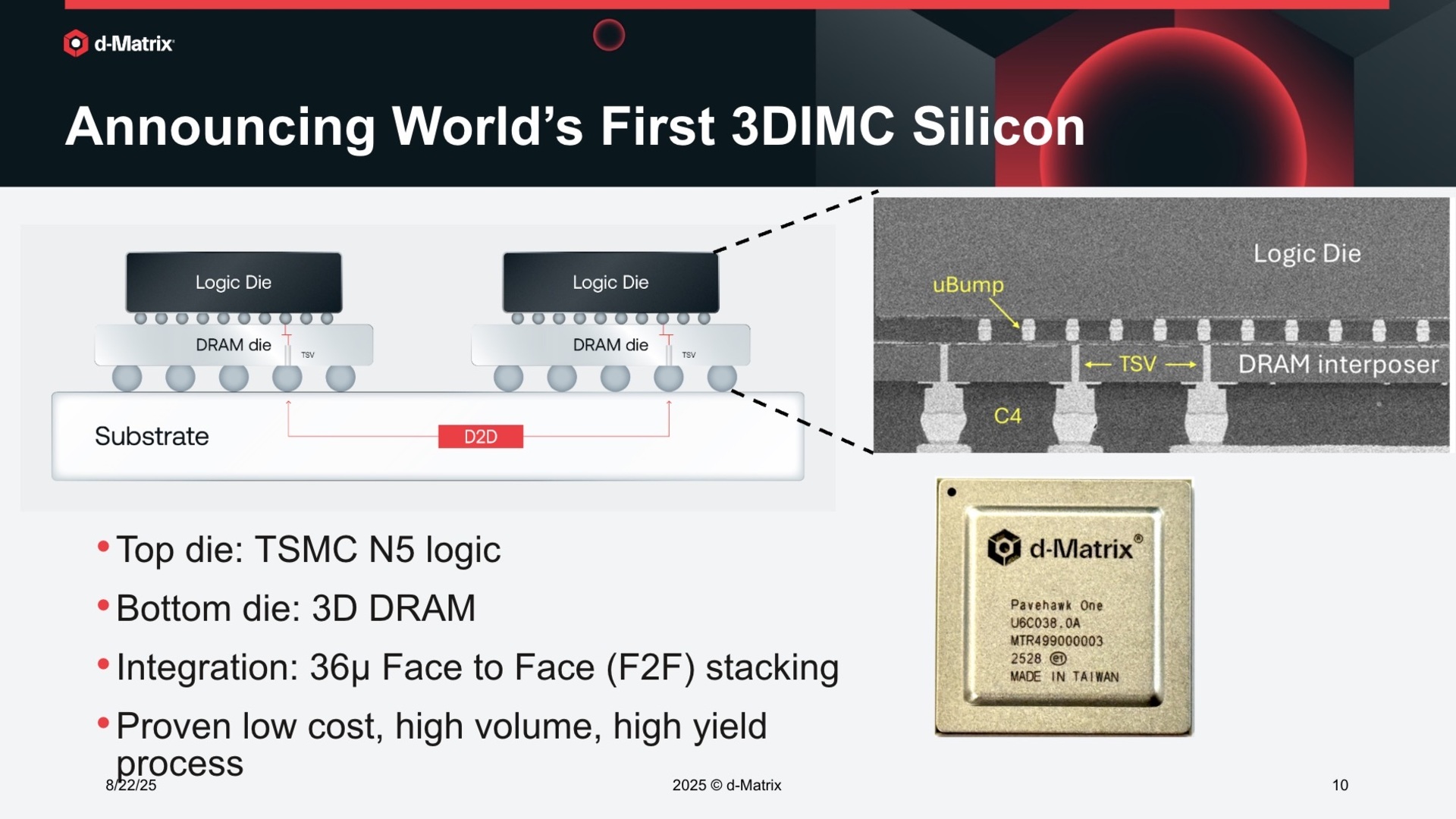

This technology, called D-Matrix Pavehawk, will launch with 3DIMC, which is expected to rival HBM4 for AI inference with 10x bandwidth and energy efficiency per stack.

Built on a TSMC N5 logic die and combined with 3D-stacked DRAM, the platform aims to bring compute and memory much closer than in conventional layouts.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

By eliminating some of the data transfer bottlenecks, D-Matrix suggests it could reduce both latency and power use.

Looking at its technology path, D-Matrix appears committed to layering multiple DRAM dies above the logic silicon to push bandwidth and capacity further.

The company argues that this stacked approach may deliver an order of magnitude in performance gains while using less energy for data movement.

For an industry grappling with the limits of scaling memory interfaces, the proposal is ambitious but remains unproven.

It is worth noting that memory innovations around inference accelerators are not new.

Other firms have been experimenting with tightly coupled memory and compute solutions, including designs with built-in controllers or links through interconnect standards like CXL.

D-Matrix, however, is attempting to go further by integrating custom silicon to rework the balance between cost, power, and performance.

The backdrop to these developments is the persistent cost and supply challenge surrounding HBM.

Large players such as Nvidia can secure top-tier HBM parts, but smaller companies or data centers often have to settle for lower-speed modules.

This disparity creates an uneven playing field where access to the fastest memory directly shapes competitiveness.

If D-Matrix can indeed deliver on lower-cost and higher-capacity alternatives, it would address one of the central pain points in scaling inference at the data center level.

Despite the claims of "10x better performance" and "10x better energy efficiency," D-Matrix is still at the beginning of what it describes as a multi-year journey.

Many companies have attempted to tackle the so-called memory wall, yet few have reshaped the market in practice.

The rise of AI tools and the reliance on every LLM show the importance of scalable inference hardware.

However, whether Pavehawk and Corsair will mature into widely adopted alternatives or remain experimental remains to be seen.

Via Serve The Home

You might also like

- This devious cyberattack can downgrade your phone from 5G to 4G without you knowing

- These are the fastest SSDs you buy right now

- Take a look at some of the best external hard drives

Efosa has been writing about technology for over 7 years, initially driven by curiosity but now fueled by a strong passion for the field. He holds both a Master's and a PhD in sciences, which provided him with a solid foundation in analytical thinking.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.