Turning data into knowledge: How Huawei is upgrading storage for the AI era

Huawei is redefining data storage to power the AI era with speed, intelligence, and efficiency

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Without data, AI would not be able to exist and AI cannot get better without significant developments in how data is stored and used for AI scenarios. Traditional data storage systems simply hold raw data but AI wordloads require systems that can turn raw information into knowledge. Leading the change in what we consider storage to be is Huawei, helping businesses utilize information in a new future of data storage.

The emergence of artificial intelligence has created four key areas that storage needs to adapt to: AI Corpus, AI training, AI inference, and agentic AI. Each of these areas has its own technical challenges that require unique and innovative solutions.

AI Corpus: Building Intelligent Data Lakes

The foundation of any AI model is the data that it’s trained on. Most large organizations struggle with fragmented, siloed information. Huawei's AI data lake architecture enables efficient aggregation of massive corpora with high-density storage and multi-modal data fusion management.

Even though most businesses are discarding 97% of their data, the key isn’t just storing more data but making existing data more accessible, discoverable, and usable for training. Huawei’s AI data lake architecture, turns isolated islands into data repositories enabling companies to aggregate massive amounts of data. The DME Omni-Dataverse technology helps make data more discoverable and facilitates cross department data sharing.

AI Training: Minimizing Downtime, Maximizing Efficiency

When training large-scale AI, training runs can last for up to 2.6 days on average before hardware failures, maintenance, or other interruptions force a stop. When this happens the system must start from a checkpoint. These checkpoints involve massive amounts of data that requires a lot of time to read and write to the system.

This means that while the storage is doing its job, GPUs that are often the most expensive part of the system are sitting ideally waiting for tasks. Huawei’s OceanStor A series storage can reduce the time it takes to save and restart from checkpoints, lowering the time it takes to train AI and fully utilize the entire computing system.

A real world example is iFLYTEK. Huawei’s OceanStor A series storage reduced checkpoint recovery time to just one minute and GPU utilization jumped from 30% to 60%.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

AI Inference: The Memory Revolution

When it comes to data and AI scenarios Huawei says that the industry is now moving from requirements for AI training to more AI inference. At Huawei Connect was Dr. Peter Zhou, Vice President of Huawei and President of Huawei Data Storage Product Line who delivered a speech saying that "The demand for inference has surpassed that for training. In terms of output speed, mainstream large models abroad operate at a speed of 200 tokens per second (with a latency of 5ms), while Chinese large models lag behind by a factor of 10, with inference latency exceeding 1 minute, urgently requiring optimization."

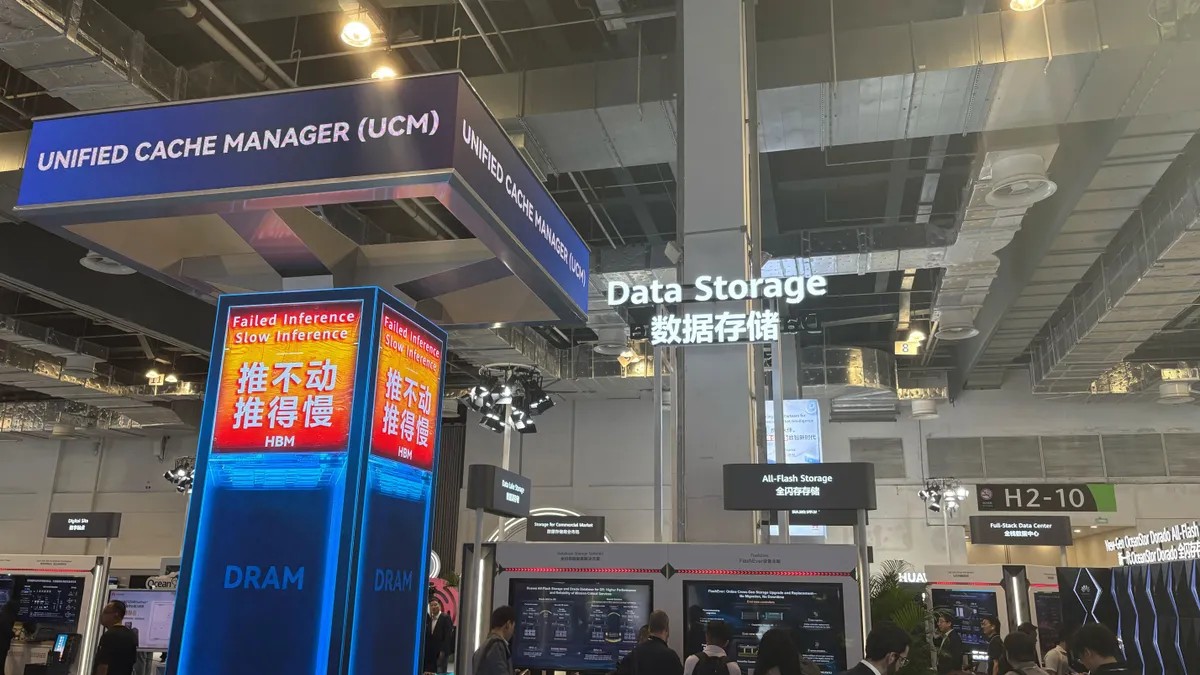

This is where Huawei's Unified Cache Manager (UCM) technology becomes crucial. The UCM technology avoids redundant calculations by referencing historical data, clearing redundant KV Cache information to shorten the length of inference text, and predicting inference input and automatically matching optimal results through streamlining sequence length. It allows for breakpoint continuation which resolves the issues when tasks are required to restart when prefill/decode resources are scaled.

"During inference, it's better to store tokens in cache. Otherwise, you have to calculate repeatedly," said Yuan Yuan, President of Huawei Distributed Storage Domain. "If you don't have cache in inference storage, every time you ask questions, the system calculates from scratch, wasting computational resources."

The UCM is the industry's first comprehensive, full-scenario, and evolvable systematic solution. UCM creates a "three-layer collaboration of inference framework, computing power, and storage" solution through a series of scenario-based acceleration algorithms, kits, and open third-party libraries.

This is especially important with the emergence of agentic AI. Using AI to carry out tasks requires significantly more speed than when it comes to training. Not only does short-term, and long-term memory need to be processed but also domain-specific knowledge and it's not just hardware that reduces this bottleneck.

The future AI data platform should support multi-level unified caching which enables multi-modal knowledge bases and memory bases to be utilised to enhance the intelligence of AI agents. Advances in this technology are critical for businesses as the AI era demands better corpus management, training and inference acceleration for agentic AI systems that are becoming more and more important for businesses.

In the Agentic AI era, storage systems must be more than simple data repositories and become a more computational and intelligent part of the system that can store and share knowledge, memory, and context.

Despite the vast amount of information already stored, data storage is still growing at a compound rate and it’s expected to grow even more. At the current rate, enterprises store less than 3% of their data and share less than 25%. The majority of data is trapped in isolated silos. "We believe we're at a turning point because of AI," explains Yuan Yuan. "Before AI, huge amounts of data were abandoned because there was no good approach to handle it. But AI gives us a good approach to leverage data value."

Why flash Storage is important for AI

Traditional storage cannot handle the challenges of low-latency, high IOPS, and cluster availability when it comes to the requirements of AI. These limitations are causing 97% of data to be lost at source and inference delays exceeding one minute.

HDDs have traditionally been used to store less frequently used ‘cold data’ and can deliver roughly 100 megabytes per second which while impressive can only be done when large amounts of data is read sequentially. AI workloads require different types of tasks needing much faster random access speeds where SSDs can achieve 15 gigabytes per second. When it comes to IOPS, power consumption, size, and weight, SSDs offer roughly 10 times the advantage of traditional drives. The problem is that SSD speeds used to come at a cost. Now though, the value of the speed of data is increasing and with Huawei’s storage technology lowering the price point, the price gap between HDDs and SSDs is diminishing.

"GPU utilization rates are roughly 50% during training across many customers," added Yuan Yuan. "This means half the time, expensive GPU cards are idle, waiting for data from storage." This idle time represents a significant waste of compute resources.

Huawei's solution is to lean on an all-flash storage strategy that can reduce these bottlenecks to enable businesses. The OceanStor Pacific 9926 has 8x the capacity density of HDD cutting the cost of ownership by 54% and reducing inference workloads from minutes to seconds.

Huawei's approach goes beyond simple hardware upgrades. Their OceanStor A series storage systems deliver 500 gigabytes per second throughput and over 10 million IOPS, specifically engineered for AI training workloads. Perhaps more importantly, they've achieved industry-leading energy efficiency at 0.25 watts per terabyte—roughly half the power consumption of traditional storage systems.

An all flash approach

In modern storage systems HDD and SDD storage have lived happily side by side. Each playing to its own advantages. HDDs are cheaper for storing large amounts of data and can sit ideally without degradation for a long time but are slower than SSDs. Therefore companies would store infrequently used cold data in HDDs while storing hot data that needs to be used regularly in SSDs providing a balance between costs and performance.

"In the AI era, there's a popular concept called 'warm' data—not hot, not cold, but warm," explained Yuan Yuan. "This means when you need data, you need it instantly." Yuan Yuan also added that HDDs are no longer considered the more stable option. “SSD technology had issues with memory cells failing. But after five years, many new technologies have been implemented in SSD chips, controllers, and memory sectors. They're now robust enough to last 5-10 years without problems."

It’s also not an issue for companies to move data from HDDs to SSDs because the interfaces and access models remain the same. Whether you access files through block storage, the protocol between computing and storage doesn't change - you just change the media part. It's like transferring from one piece of equipment to an SSD.

Bottomless buckets

"Current generation SSDs perform at about 15 GB/s. Next generation will be much faster—potentially triple the performance," explains Yuan Yuan. "We don't see immediate limitations because we can continue scaling in multiple ways."

Huawei believe that they are still a long way from reaching any hard limits on how much better SSDs can perform and with 20-30% of Huawei’s revenue going toward R&D, if there was a limit on the performance of SSDS, Huawei will be ready with the next storage solutions.

"These investigations are necessary because technology moves very fast, especially with AI demands," Yuan Yuan added.

This is good because all predictions show that the amount of storage required is only going to grow as AI becomes more and more embedded into our daily lives.

Global recognition and growth

Huawei tops Gartner's storage rankings with new storage solutions for the AI era. Huawei's all-flash strategy and storage solutions designed to solve the issues of traditional HDD storage for the AI era earned Huawei its place as a leader in the Magic Quadrant for Enterprise.

Huawei also ranked first in Gartner’ Customer Choice, and Critical Capabilities report showing trust and leadership in services, quality and features when it comes to storage.

Huawei covered the costs of flights and accommodation for TechRadar Pro as part of a paid campaign.

James is a tech journalist covering interconnectivity and digital infrastructure as the web hosting editor at TechRadar Pro. James stays up to date with the latest web and internet trends by attending data center summits, WordPress conferences, and mingling with software and web developers. At TechRadar Pro, James is responsible for ensuring web hosting pages are as relevant and as helpful to readers as possible and is also looking for the best deals and coupon codes for web hosting.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.