How Kaspersky Lab disabled a botnet

The Kelihos botnet is under control. But what now?

This is a post written by Kaspersky Lab Expert Tillmann Werner, which first appeared on Kaspersky's Securelist blog.

Earlier this week, Microsoft released an announcement about the disruption of a dangerous botnet that was responsible for spam messages, theft of sensitive financial information, pump-and-dump stock scams and distributed denial-of-service attacks.

Kaspersky Lab played a critical role in this botnet takedown initiative, leading the way to reverse-engineer the bot malware, crack the communication protocol and develop tools to attack the peer-to-peer infrastructure. We worked closely with Microsoft's Digital Crimes Unit (DCU), sharing the relevant information and providing them with access to our live botnet tracking system.

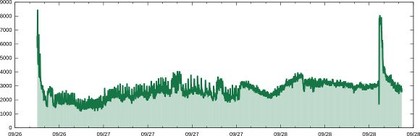

A key part of this effort is the sinkholing of the botnet. It's important to understand that the botnet still exists - but it's being controlled by Kaspersky Lab. In tandem with Microsoft's move to the U.S. court system to disable the domains, we started to sinkhole the botnet. Right now we have 3,000 hosts connecting to our sinkhole every minute. This post describes the inner workings of the botnet and the work we did to prevent it from further operation.

Let's start with some technical background: Kelihos is Microsoft's name for what Kaspersky calls Hlux. Hlux is a peer-to-peer botnet with an architecture similar to the one used for the Waledac botnet.

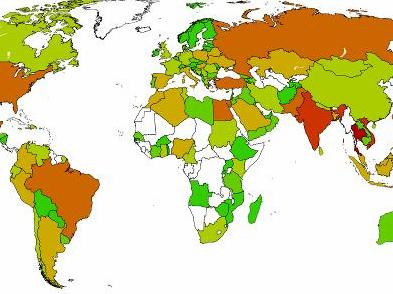

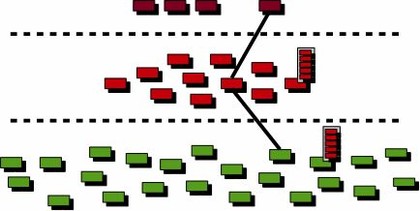

It consists of layers of different kinds of nodes: controllers, routers and workers. Controllers are machines presumably operated by the gang behind the botnet. They distribute commands to the bots and supervise the peer-to-peer network's dynamic structure.

Routers are infected machines with public IP addresses. They run the bot in router mode, host proxy services, participate in a fast-flux collective, and so on.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Finally, workers are infected machines that do not run in router mode, simply put. They are used for sending out spam, collecting email addresses, sniffing user credentials from the network stream, etc. A sketch of the layered architecture is shown below with a top tier of four controllers and worker nodes displayed in green.

ABOVE: Architecture of the Hlux botnet

Worker nodes

Many computers that can be infected with malware do not have a direct connection to the internet. They are hidden behind gateways, proxies or devices that perform network address translation. Consequently, these machines cannot be accessed from the outside unless special technical measures are taken.

This is a problem for bots that organise infected machines in peer-to-peer networks as that requires hosting services that other computers can connect to. On the other hand, these machines provide a lot of computing power and network bandwidth.

A machine that runs the Hlux bot would check if it can be reached from the outside and if not, put itself in the worker mode of operation. Workers maintain a list of peers (other infected machines with public IP addresses) and request jobs from them. A job contains things like instructions to send out spam or to participate in denial-of-service attacks. It may also tell the bot to download an update and replace itself with the new version.

Router nodes

Routers form some kind of backbone layer in the Hlux botnet. Each router maintains a peer list that contains information about other peers, just like worker nodes. At the same time, each router acts as an HTTP proxy that tunnels incoming connections to one of the Controllers. Routers may also execute jobs, but their main purpose is to provide the proxy layer in front of the controllers.

Controllers

The controller nodes are the top visible layer of the botnet. Controllers host a nginx HTTP server and serve job messages. They do not take part in the peer-to-peer network and thus never show up in the peer lists. There are usually six of them, spread pairwise over different IP ranges in different countries.

Each two IP addresses of a pair share an SSH RSA key, so it is likely that there is really only one box behind each address pair. From time to time some of the controllers are replaced with new ones. Right before the botnet was taken out, the list contained the following entries:

193.105.134.189

193.105.134.190

195.88.191.55

195.88.191.57

89.46.251.158

89.46.251.160

The peer-to-peer network

Every bot keeps up to 500 peer records in a local peer list. This list is stored in the Windows registry under HKEY_CURRENT_USER\Software\Google together with other configuration details. When a bot starts on a freshly infected machine for the first time, it initializes its peer list with some hard-coded addresses contained in the executable.

The latest bot version came with a total of 176 entries. The local peer list is updated with peer information received from other hosts. Whenever a bot connects to a router node, it sends up to 250 entries from its current peer list, and the remote peer send 250 of his entries back. By exchanging peer lists, the addresses of currently active router nodes are propagated throughout the botnet. A peer record stores the information shown in the following example:

m_ip: 41.212.81.2

m_live_time: 22639 seconds

m_last_active_time: 2011-09-08 11:24:26 GMT

m_listening_port: 80

m_client_id: cbd47c00-f240-4c2b-9131-ceea5f4b7f67

The peer-to-peer architecture implemented by Hlux has the advantage of being very resilient against takedown attempts. The dynamic structure allows for fast reactions if irregularities are observed. When a bot wants to request jobs, it never connects directly to a controller, no matter if it is running in worker or router mode. A job request is always sent through another router node. So, even if all controller nodes go off-line, the peer-to-peer layer remains alive and provides a means to announce and propagate a new set of controllers.

The fast-flux service network

The Hlux botnet also serves several fast-flux domains that are announced in the domain name system with a TTL value of 0 in order to prevent caching. A query for one of the domains returns a single IP address that belongs to an infected machine.

The fast-flux domains provide a fall-back channel that can be used by bots to regain access to the botnet if all peers in their local list are unreachable. Each bot version contains an individual hard-coded fall-back domain.

Microsoft unregistered these domains and effectively decommissioned the fall-back channel. Here is the set of DNS names that were active before the takedown - in case you want to keep an eye on your DNS resolver. If you see machines asking for one of them, they are likely infected with Hlux and should be taken care of.

hellohello123.com

magdali.com

restonal.com

editial.com

gratima.com

partric.com

wargalo.com

wormetal.com

bevvyky.com

earplat.com

metapli.com

The botnet further used hundreds of sub-domains of ce.ms and cz.cc that can be registered without a fee. But these were only used to distribute updates and not as a backup link to the botnet.

Counteractions

A bot that can join the peer-to-peer network won't ever resolve any of the fall-back domains - it does not have to. In fact, our botnet monitor has not logged a single attempt to access the backup channel during the seven months it was operated as at least one other peer has always been reachable.

The communication for bootstrapping and receiving commands uses a special custom protocol that implements a structured message format, encryption, compression and serialization. The bot code includes a protocol dispatcher to route incoming messages (bootstrap messages, jobs, SOCKS communication) to the appropriate functions while serving everything on a single port.

We reverse engineered this protocol and created some tools for decoding botnet traffic. Being able to track bootstrapping and job messages for a intentionally infected machine provided a view of what was happening with the botnet, when updates were distributed, what architectural changes were undertaken and also to some extend how many infected machines participate in the botnet.

ABOVE: Hits on the sinkhole per minute

This Monday, we started to propagate a special peer address. Very soon, this address became the most prevalent one in the botnet, resulting in the bots talking to our machine, and to our machine only. Experts call such an action sinkholing - bots communicate with a sinkhole instead of its real controllers.

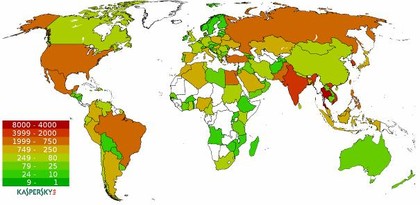

At the same time, we distributed a specially crafted list of job servers to replace the original one with the addresses mentioned before and prevent the bots from requesting commands. From this point on, the botnet could not be commanded anymore. And since we have the bots communicating with our machine now, we can do some data mining and track infections per country, for example. So far, we have counted 49,007 different IP addresses. Kaspersky works with Internet service providers to inform the network owners about the infections.

ABOVE: Sinkholed IP addresses per country

What now?

The main question now is: what is next? We obviously cannot sinkhole Hlux forever. The current measures are a temporary solution, but they do not ultimately solve the problem, because the only real solution would be a cleanup of the infected machines.

We expect that the number of machines hitting our sinkhole will slowly lower over time as computers get cleaned and reinstalled. Microsoft said their Malware Protection Center has added the bot to their Malicious Software Removal Tool. Given the spread of their tool this should have an immediate impact on infection numbers. However, in the last 16 hours we have still observed 22,693 unique IP addresses. We hope that this number is going to be much lower soon.

Interestingly, there is one other theoretical option to ultimately get rid of Hlux: we know how the bot's update process works. We could use this knowledge and issue our own update that removes the infections and terminates itself. However, this would be illegal in most countries and will thus remain theory.