Could a computer algorithm be put on trial?

The algorithmic economy could have morally questionable consequences

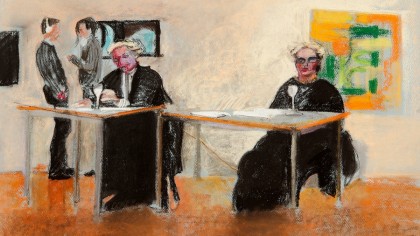

Should we hold algorithms and artificial intelligence accountable for their actions? That's the question asked by a provocative piece of performance art by Goldsmiths, University of London MFA student Helen Knowles, in which a computer algorithm goes on trial for manslaughter.

In a fictional plot, an algorithm called 'Superdebthunterbot' is used by a debt collection agency that's just bought the debts of students across the UK. The algorithm then targets job adverts at the students to ensure there are fewer defaulters, but two of them die after taking part in a risky medical trial advertised to them by the algorithm. Is the algorithm culpable?

There is, of course, one problem with the thesis. Algorithms don't have legal status. "It's possible that a computer algorithm could be put on trial," says Dr Kevin Curran, Technical Expert at the IEEE and reader in computer science at Ulster University, but he raises an excellent question for anyone thinking of getting litigious. "No computer algorithm has opened a bank account yet, so what would you sue?"

Who to sue?

The answer of who to sue if something goes wrong with an algorithm seems simple enough. "The most pragmatic and reasonable approach is to sue the humans who deployed the algorithm," says Curran, but it's not as straightforward as that.

"Take the instance of an automated driverless car causing a death," says Curran. "Does the lawsuit pursue the dealership, the car manufacturer, or the third-party who developed the algorithm that was deemed to be at fault?" Cue new kinds of lawyers; with super-complex incidents put in front of judges, there's bound to be a growing need for lawyers skilled in the role of automation and its relation to legal accountability.

"Algorithms are essentially sets of rules that computers must follow when processing data – so, in legal terms, they're much like any other software," says Richard Kemp, founder of Kemp IT Law and one of the world's top IT lawyers. "Just as if CAD software is used to design a building that falls down and injures people, the designers of the defective software may be liable, so it's possible that the designers of faulty algorithms may also have to accept legal responsibility."

Technology is rarely just a tool.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Algorithmic angst

Technology isn't neutral. Apps constantly make algorithm-based decisions that affect their users. Facebook's algorithm decides both what news you read and which of your friends' updates you get to see. Facebook's algorithm decides what values its users are exposed to. This isn't unusual. The values of the Silicon Valley elite are often baked into the platforms and products created in that one tiny corner of a diverse world.

For example, some love the part-time, casual labour habits created by the likes of Uber, Lyft and Airbnb to create the 'sharing', 'gig' or 'peer-to-peer' economy. Some hate it for creating millions of largely worthless, zero-hours jobs. But can anyone refute that Uber is akin to a political movement? Or that Airbnb isn't contributing to a housing problem in some places? Both algorithm-driven apps are wrapped-up with moral and ethical dilemmas, whether the coders and programmers behind the apps like it or not.

Existential threats

However, that's nothing compared to the threat from artificial intelligence (AI). Could AI represent an existential threat to humanity? "A world where big data is constantly whirring away in the background in every part of our lives poses big risks around security, individual liberties and state powers," says Kemp. "All these things in another form are going through the UK parliament at the moment with the Investigatory Powers Bill … AI, big data and algorithms just makes them more pervasive."

Top Image Credit: Helen Knowles & Liza Brett

Jamie is a freelance tech, travel and space journalist based in the UK. He’s been writing regularly for Techradar since it was launched in 2008 and also writes regularly for Forbes, The Telegraph, the South China Morning Post, Sky & Telescope and the Sky At Night magazine as well as other Future titles T3, Digital Camera World, All About Space and Space.com. He also edits two of his own websites, TravGear.com and WhenIsTheNextEclipse.com that reflect his obsession with travel gear and solar eclipse travel. He is the author of A Stargazing Program For Beginners (Springer, 2015),