How Microsoft is stamping out Azure failures and improving reliability

Avoiding the major errors that cause outages

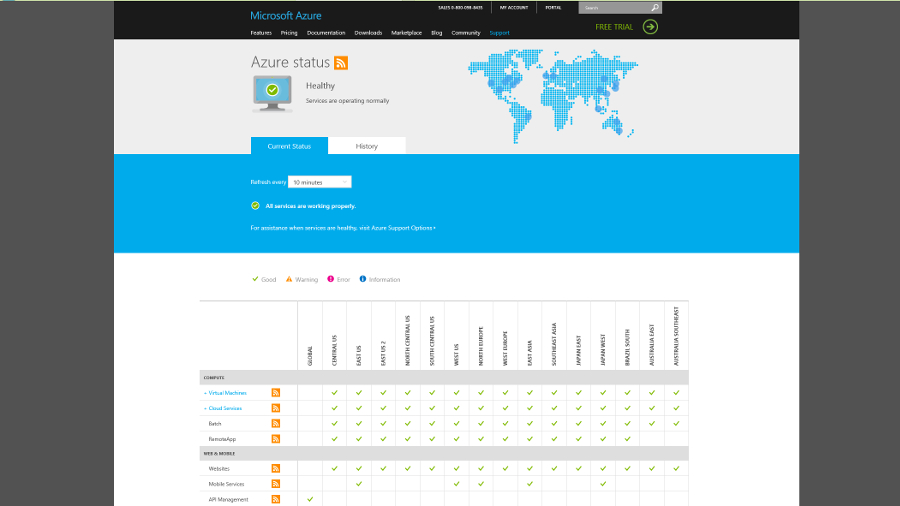

In 2014, Microsoft's Azure cloud service was more popular than ever with businesses – but it also suffered some very visible outages. In the worst, not only did the failure of Azure Storage take out many other Azure services, but details for companies trying to get their systems back were few and far between.

We spoke to Azure CTO Mark Russinovich about what went wrong, what Microsoft can do to make Azure more reliable, and how it's going to improve in terms of keeping customers informed.

Major faux pas

We've known since November that the problem with Azure Storage wasn't just that a performance improvement actually locked up the database – it was one of the Azure engineers rolling out the update not just for the Azure Table storage it had been tested on, but also for the Azure Blob storage it hadn't been tested with – and that's where the bug was. Because the update had been tested, the engineer also decided not to follow the usual process and deploy it on just one region of Azure at a time and rolled it out to all the regions at once.

That caused other problems, because Azure Storage is a low-level piece of the system. "Azure is a platforms platform that's designed in levels; there are lower levels and higher levels and apps on top," Russinovich explains. "There are dependencies all the way down, but we have principles like one component can't depend on another component that depends on it, because that would stop you recovering it.

"We've designed the system so the bottom-most layers are the most resilient because they're the most critical. They're designed so they're easy to diagnose and recover. Storage is one of those layers at the bottom of the system, so many other parts of Azure depend on Azure Storage."

Automation means fewer errors

Despite the complexity of Azure, problems are more likely to be caused by human error than bugs and the best way to make the service more reliable is to automate more and more, Russinovich explained. "We've found through the history of Azure that the places where we've got a policy that says a human should do something, a human should execute this instruction and command, that that's just creating opportunities for failure. Humans make mistakes of judgement, they make inadvertent mistakes as they try to follow a process. You have to automate to run at scale for resiliency and for the safety of running at scale."

The team developing Azure is responsible for testing their code, both basic code tests and tests of how well it works with the rest of Azure, using a series of test systems. "We try to push tests as far upstream as possible," Russinovich told us, "because it's easier to fix problems. Once it's further along the path, it's harder to fix it – and it's harder to find a developer who understands the problem."

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

That caused a problem in another outage, when performance improvements in SQL Azure hadn't been documented and it took time both to find the problem and track down a developer who knew about the code.

After testing, building and deploying the code is supposed to be fully automated, based on what's been tested. "When the developer put the code in the deployment system the setting he gave it was enabled for blobs and tables, and that was a violation of policy," he told us. "The development system now takes care of that configuration step, rather than leaving it up to the developer."

That should mean that not only will this particular problem not happen again, but no-one else can make the same sort of error on a different Azure service.

Mary (Twitter, Google+, website) started her career at Future Publishing, saw the AOL meltdown first hand the first time around when she ran the AOL UK computing channel, and she's been a freelance tech writer for over a decade. She's used every version of Windows and Office released, and every smartphone too, but she's still looking for the perfect tablet. Yes, she really does have USB earrings.