Startup wants to rival Nvidia and end GPU shortage by going back to basics — betting on CPUs may not seem a great idea but just hear these guys out

With GPUs in really high demand, there might be a way to configure CPUs to be a reliable stopgap solution to getting your AI dream off the ground

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

An industry landscape in which component supply and demand were in lockstep feels like a lifetime ago. The global chip shortage, which took hold as the world slipped into lockdowns at the height of COVID-19 had a serious impact on the supply of goods in all kinds of sectors – from entertainment to automotive.

But as this tide started to recede, another was rising in the form of AI. Generative AI is all the rage and various industries are desperate to get in on the action, but running large language models (LLMs) requires a monumental amount of computing power. These come in the form of GPUs, with Nvidia being the current industry leader. ChatGPT, for example, was powered with Nvidia A100 chips when it launched, and the firm has since doubled down with the launch of the H100 and H200 chips, as part of a roadmap of annual releases.

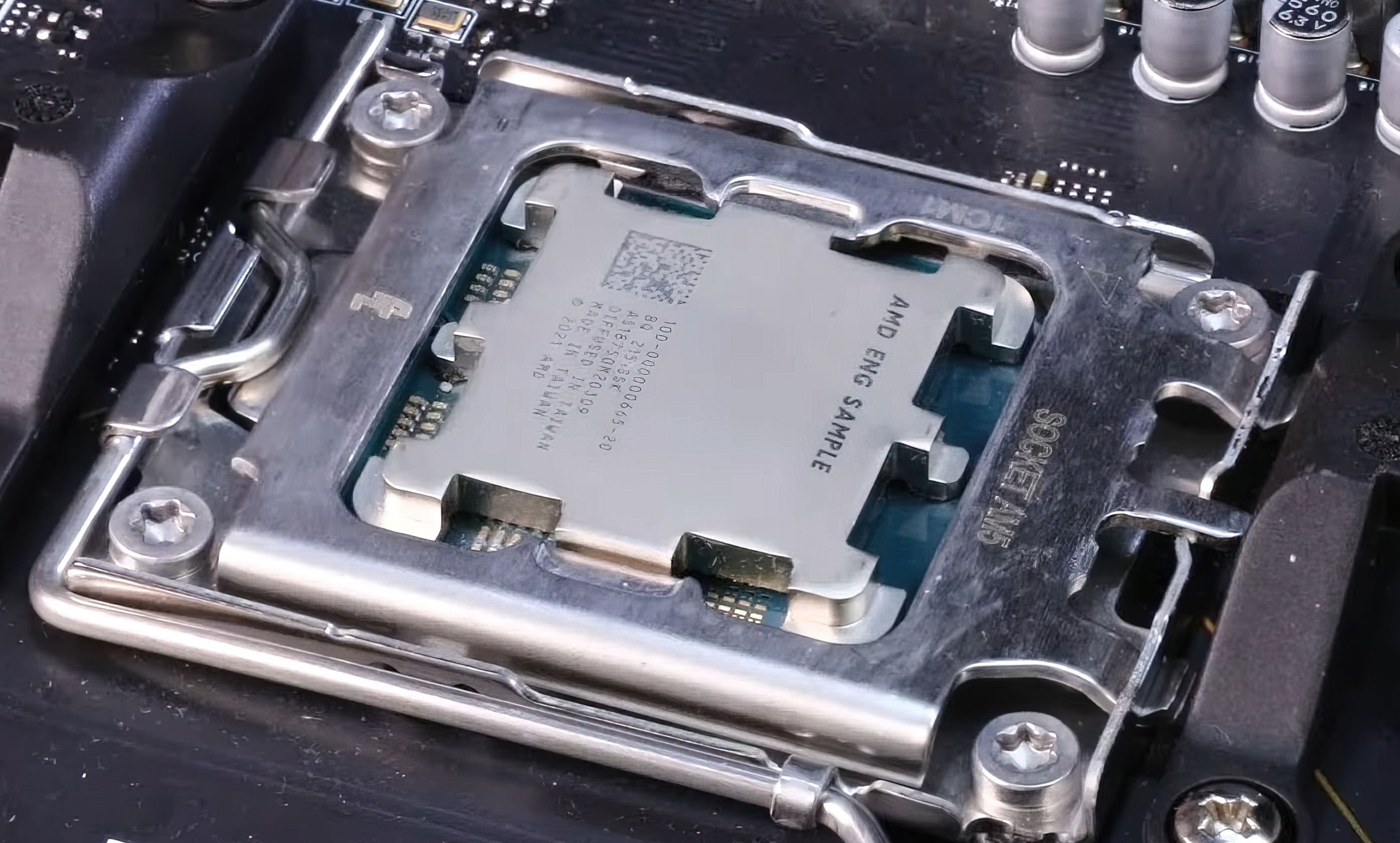

Unprecedented demand has given way to a shortage in GPUs, however, meaning businesses clamoring for the hardware to run AI workloads are stuck in a void – waiting for supply to become available. But what if those businesses were to, instead, consider using the best CPUs instead? They're more than just a viable alternative, according to Alex Babin, CEO of Zero Systems. In conversation with TechRadar Pro, he outlines exactly what your business needs to look out for when selecting CPUs for AI training and inference, and why they might make a reasonable stopgap until costly and in-demand GPUs become a little more accessible again.

Alex, you posit that the CPU can be used as a substitute for GPU when it comes to AI training and inference. Can you develop your thoughts and explain how you came to this conclusion?

Depending on business needs and application requirements, CPUs can perform inference with specific LLMs, such as llama.cpp libraries, which decrease the resolution of numeric weights to simplify LLMs. The training portion of the AI development with LLM typically requires GPUs, but there are composite implementations of end-to-end solutions that have models that are trained on CPU. For instance, prediction engines are algorithmic and not driven by transformer architecture.

When deploying very large models, the ideal situation is to fit the model on a single GPU. This is the best option with respect to performance as it eliminates the overhead of communication between GPU devices. For some models, it is simply impossible to fit them on a single GPU due to model size. For other models, they may fit on a single GPU, but it may be more cost-effective to partition the model across multiple cheaper GPUs.

Given that some business use cases are not dependent on real-time inference, several CPUs can be deployed to process the data overnight or during weekends and continue with downstream processing during regular business hours.

What sort of CPU are we talking about? x86, Arm, Risc-V? High core counts? Pure CPU vs APU/CPU + FPGA?

For inference, x86 CPUs with high core counts (+90), such as Intel Xeon with Gaudi accelerators. For other CPU types to become as pervasive as x86 it will take some time. Intel has the know-how and the engineering strength to articulate and execute a robust strategy in order to maintain its market position. Having said that, the ARM and RISC-V architectures are quick to adapt to new market needs, but these architectures may not have (yet) the full developer support needed for large-scale adoption. Libraries and configurations as well as management tools need to be developed, adapted, and rolled out together with specifications for taking advantage of the flexibility of ARM and RISC-V.

You told me that CPUs have the potential to become much more competitive in the AI training segment with extremely large data volumes. How come?

There are several drivers for this. First, specialized models require fewer parameters than typical LLMs and this decreases the pressure on GPU compute type. Second, new approaches are being developed to enable training with simplified LLMs, such as Llama-2. Third, CPU providers are laser-focused on providing alternatives to GPUs with accelerators as well as memory and networking. Fourth, new chip types on Arm are taking some of the pressure off from GPUs, such as Trainium from AWS.

While it is true that the GPU is at the heart of the GAI revolution we've experienced over the past year or so, there are a number of serious challengers (e.g. D-Matrix, Cerebras). Will the CPU with its legacy architecture still have a chance against them?

Given the high market demand for compute training needs, the competitive landscape evolves rapidly, and new players are bringing platforms and frameworks into the fold. However, many of the new players do not have the developer community enabled and this will delay adoption. Meanwhile, existing providers, such as AMD have already come with a new GPU platform that looks promising (MI300).

What can the current crop of CPUs do to compete better with GPUs at inference and training?

They need to focus on end-to-end developer experience within the existing compute footprint. Existing providers need to engage with the ML community and have platforms that work within the existing GPU environment. They also need to come up with specific guidelines when CPU performance relative to cost is advantageous compared to GPUs.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Désiré has been musing and writing about technology during a career spanning four decades. He dabbled in website builders and web hosting when DHTML and frames were in vogue and started narrating about the impact of technology on society just before the start of the Y2K hysteria at the turn of the last millennium.

- Keumars Afifi-SabetChannel Editor (Technology), Live Science