Nvidia and IBM have a plan to connect GPUs straight to SSDs

Nvidia, IBM, and researchers are trying to make ML training a lot quicker

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

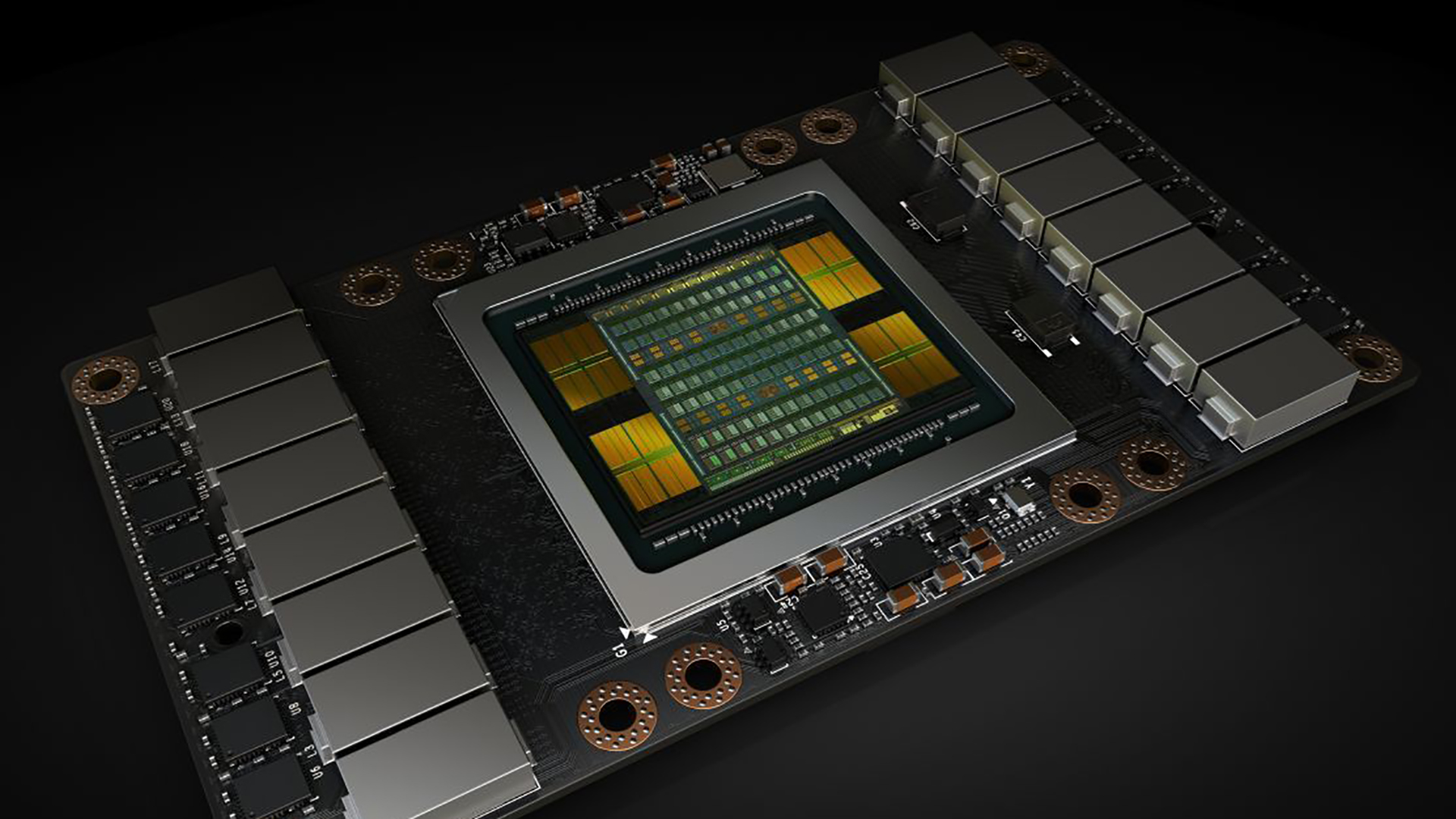

Nvidia, IBM, and researchers have a plan to make the lives of those working in machine learning a bit easier: connecting GPUs directly to SSDs.

Detailed in a research paper, the idea is called Big accelerator Memory (BaM) and involves connecting GPUs directly to large amounts of SSD storage, helping unwind a bottleneck for ML training and other intensive tasks.

"BaM mitigates the I/O traffic amplification by enabling the GPU threads to read or write small amounts of data on-demand, as determined by the compute," the researchers write.

Speed and reliability boost

"The goal of BaM is to extend GPU memory capacity and enhance the effective storage access bandwidth while providing high-level abstractions for the GPU threads to easily make on-demand, fine-grain access to massive data structures in the extended memory hierarchy."

The ultimate goal is to reduce the reliance of Nvidia GPUs ob hardware accelerated general-purpose CPUs. By allowing Nvidia GPUs to to directly access storage, and then process it, the work is being carried out by the most specialised tools available.

Wham BaM Shang-A-Lang

The technical details are pretty complex (we recommend reading the paper if this is your field) but the gist is twofold.

BaM uses a cache of GPU memory managed by software alongside a library where GPU threads can request data stored on NVMe SSDs directly. Moving information between is handled by the GPUs.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

The end result is that ML training and other intensive activities can access data more quickly and, importantly, in specific ways that are helpful to the workloads. In testing, this was borne out: the GPUs and SSDs worked well together, quickly transferring data.

The teams plan to open-source their hardware and software designs ultimately, a big win for the ML community.

- We've found the best GPUs around today

Via The Register

Max Slater-Robins has been writing about technology for nearly a decade at various outlets, covering the rise of the technology giants, trends in enterprise and SaaS companies, and much more besides. Originally from Suffolk, he currently lives in London and likes a good night out and walks in the countryside.